For the second SMB and ROBO HCI benchmark stage we obtained 26.8 million IOPS, 101% out of theoretical 26.4 million IOPS, over production-ready HCA cluster of 12 Supermicro SuperServers featured by Intel® Xeon® Platinum 8268 processors and Intel® Optane™ SSD DC P4800X Series drives and Mellanox ConnectX-5 100GbE NICs all connected by Mellanox SN2700 Spectrum™ switch and Mellanox LinkX® copper cables. This is a standard hardware configuration for our production HCAs where only CPUs were upgraded for chasing HCI industry record.

This is the third of three benchmark stages that shows the 12-node production-ready StarWind HCA cluster performance. For this scenario, we decided to measure the storage performance using NVMe-oF.

StarWind HCA has maximum the power: we installed 4x Intel® Optane™ SSD DC P4800X Series drives to each node. In our setup, StarWind HCA has the fastest software running: Microsoft Hyper-V (Windows Server 2019) and StarWind Initiator service application runs in Windows user-land. For this scenario, StarWind NVMe-oF Initiator features storage interconnection, and SPDK NVMe-oF is used as a target.

There were certain limitations in our Enterprise HCI environment. Intel Optane NVMe is used as the fastest underlying storage interconnected to a Virtual Machine that handles IO. The storage has no block-level replication which means the highly-available cluster configuration features data replication on VM-level or application levels. This is due to lack on "NVM reservations" functionally. This HCI configuration suits SQL AGs, SAP and other databases, for the DB-guys, those use their own replication (on application level). We are developing NVMe Reservations functionally to make it available and supported by StarWind to make it production ready.

Learn more about hyperconverged infrastructures powered by StarWind Virtual SAN on our website.

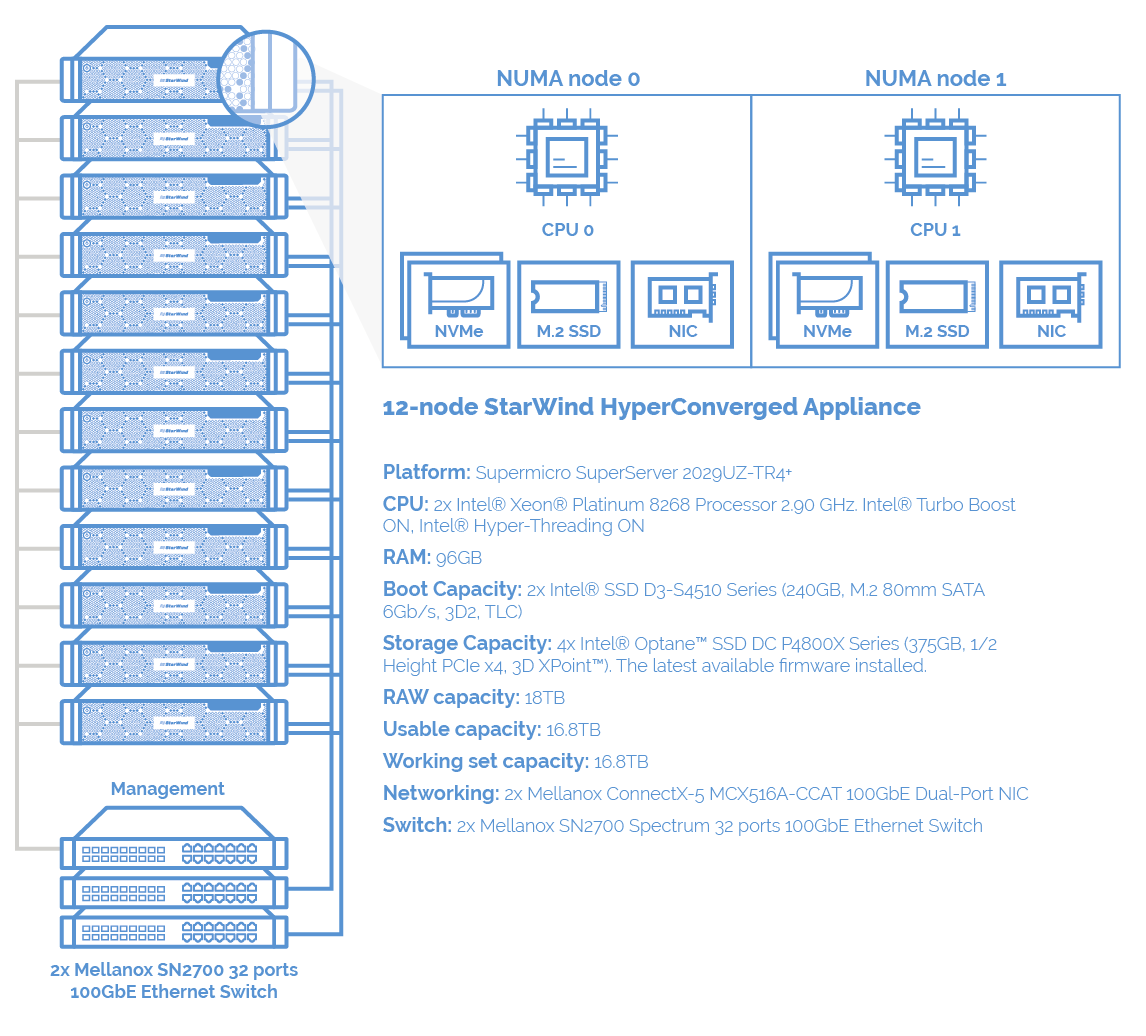

Platform: Supermicro SuperServer 2029UZ-TR4+

CPU: 2x Intel® Xeon® Platinum 8268 Processor 2.90 GHz. Intel® Turbo Boost ON, Intel® Hyper-Threading ON

RAM: 96GB

Boot Capacity: 2x Intel® SSD D3-S4510 Series (240GB, M.2 80mm SATA 6Gb/s, 3D2, TLC)

Storage Capacity: 4x Intel® Optane™ SSD DC P4800X Series (375GB, 1/2 Height PCIe x4, 3D XPoint™). The latest available firmware installed.

RAW capacity: 18TB

Usable capacity: 16.8TB

Working set capacity: 16.8TB

Networking: 2x Mellanox ConnectX-5 MCX516A-CCAT 100GbE Dual-Port NIC

Switch: 2x Mellanox SN2700 Spectrum 32 ports 100GbE Ethernet Switch

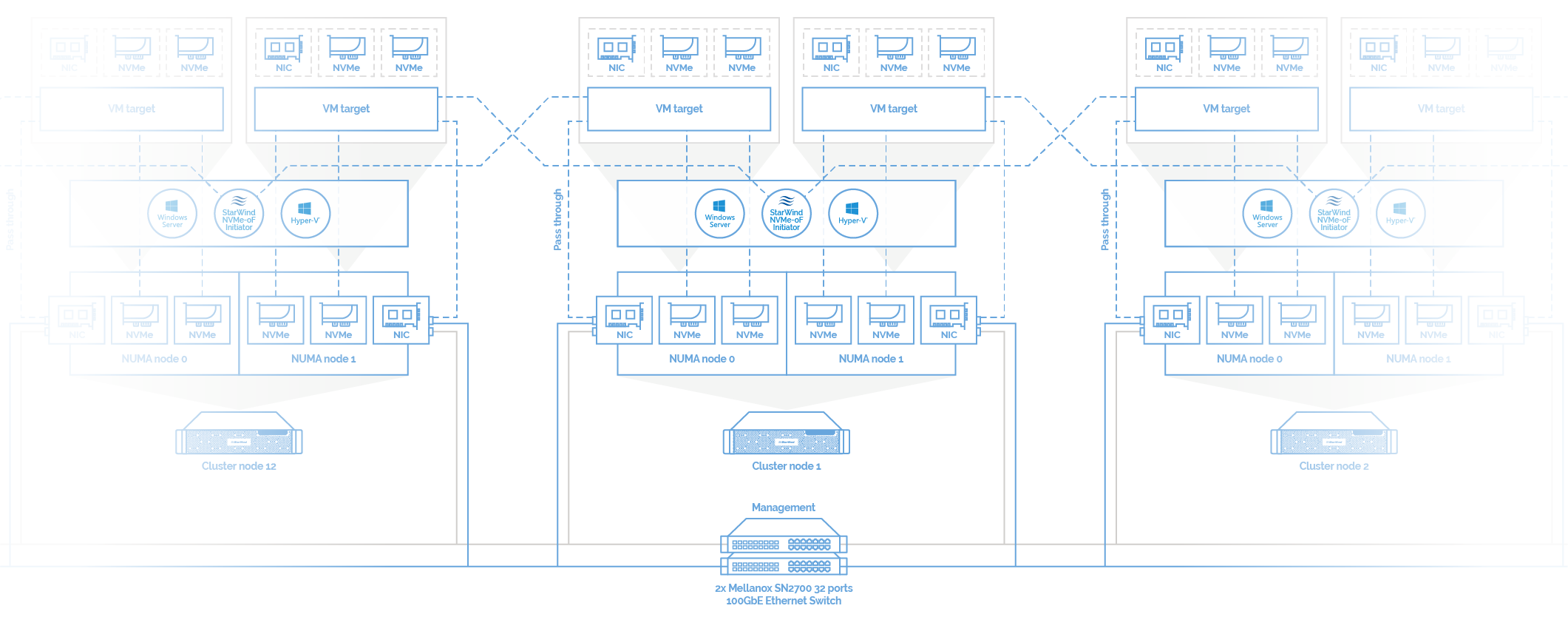

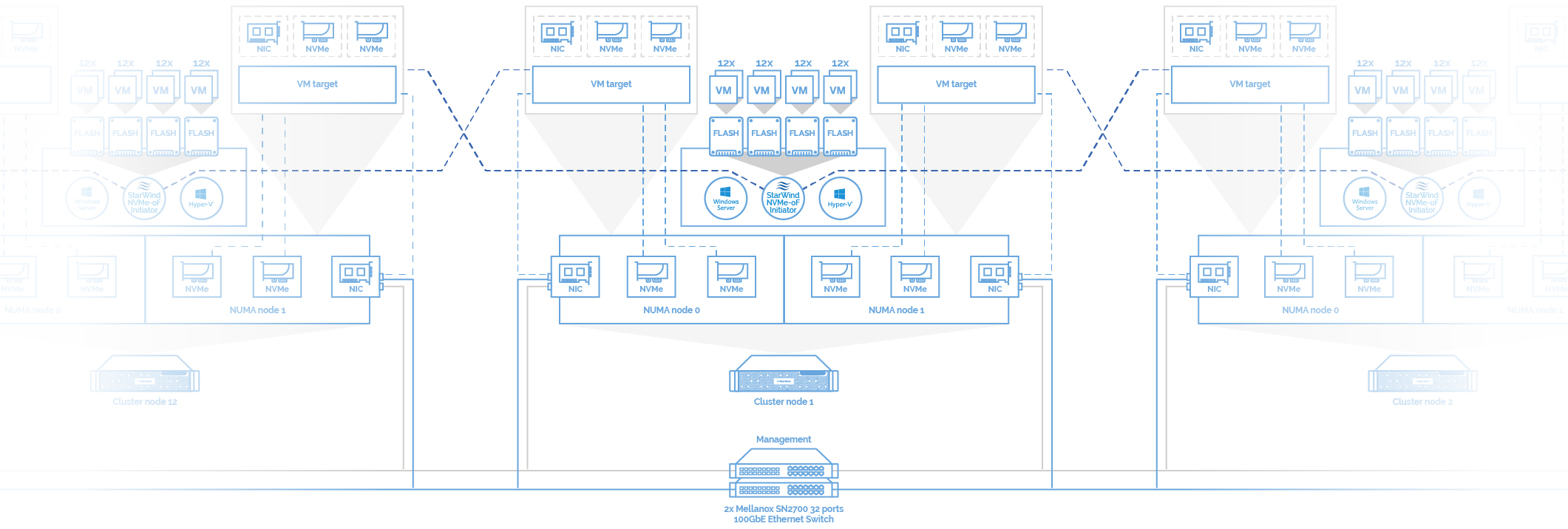

The diagram illustrates the servers’ interconnection.

Operating system. Windows Server 2019 Datacenter Evaluation version 1809, build 17763.404 was installed on all nodes with the latest updates available on May 1, 2019. With performance perspective in mind, the power plan was set to High Performance, and all other settings, including the relevant side-channel mitigations (mitigations for Spectre v1 and Meltdown were applied), were left at default settings.

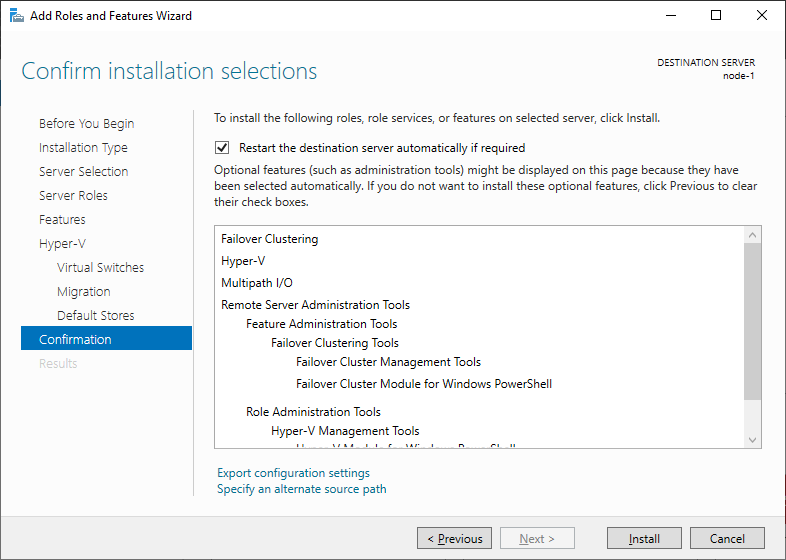

Windows Installation. Hype-V Role, MPIO, and Failover Cluster features

To make the deployment process faster, we made an image of Windows Server 2019 with Hype-V Role installed and MPIO and Failover Cluster features enabled. Later, the image was deployed on 12x Supermicro servers.

Driver installation. Firmware update

Once Windows installed, for each hardware piece hardware Windows Update were applied, and firmware updates were installed for Intel NVMe SSDs.

Cluster storage. The storage has no block-level replication which means the highly-available cluster configuration features data replication on application level. The working set capacity for this setup was 16.8TB, this is all usable capacity from 18TB RAW capacity.

SPDK target VM. None of existing Windows NVMe Drivers support polling mode. So, Linux VMs were configured running SPDK NVMe-oF target. In our cluster, we deployed 24x Linux VMs running SPDK NVMe-oF target. 2 target VMs, one per NUMA node, were running on each server. 2x NVMe SSD and 1 NIC port served as a passthrough to each target VM.

Each target VM had 4 vCPUs, 6GB RAM, and 10GB storage. CentOS 7.6 was installed on target VM along with SPDK, version 19.01.1, and DPDK, version 18.11.0.

Here’s nvme.conf file listing

[Nvmf]

#Set how often the acceptor polls for incoming connections. The acceptor is also responsible for polling existing connections that have gone idle. 0 means continuously poll. Units in microseconds.

AcceptorPollRate 10000

# One valid transport type must be set in each [Transport]. The first is the case of RDMA transport and the second is the case of TCP transport.

[Transport]

# Set RDMA transport type.

Type RDMA

# Set the maximum number of outstanding I/O per queue.

MaxQueueDepth 512

# Set the maximum number of submission and completion queues per session. Setting this to '8', for example, allows for 8 submission and 8 completion queues per session.

MaxQueuesPerSession 8

[Nvme]

# NVMe Device Whitelist. Users may specify which NVMe devices to claim by their transport id. See spdk_nvme_transport_id_parse() in spdk/nvme.h for the correct format. The second argument is the assigned name, which can be referenced from other sections in the configuration file. For NVMe devices, a namespace is automatically appended to each name in the format

TransportID "trtype:PCIe traddr:b0f4:00:00.0" Nvme1

# The number of attempts per I/O when an I/O fails. Do not include this key to get the default behavior.

RetryCount 4

# Timeout for each command, in microseconds. If 0, don't track timeouts.

TimeoutUsec 0

# Action to take on command time out. Only valid when Timeout is greater than 0. This may be 'Reset' to reset the controller, 'Abort' to abort the command, or 'None' to just print a message but do nothing. Admin command timeouts will always result in a reset.

ActionOnTimeout None

# Set how often the admin queue is polled for asynchronous events. Units in microseconds.

AdminPollRate 100000

# Set how often I/O queues are polled from completions. Units in microseconds.

IOPollRate 0

# Disable handling of hotplug (runtime insert and remove) events, users can set to Yes if want to enable it. Default: No

HotplugEnable No

# Namespaces backed by physical NVMe devices

[Subsystem1]

NQN nqn.2016-06.io.spdk:cnode1

Listen RDMA 100.1.2.10:4420

AllowAnyHost Yes

Host nqn.2016-06.io.spdk:init

MN SPDK_Controller

SN SPDK1111

Namespace Nvme0n1 1

[Subsystem2]

NQN nqn.2016-06.io.spdk:cnode2

Listen RDMA 100.1.2.10:4420

AllowAnyHost Yes

Host nqn.2016-06.io.spdk:init

MN SPDK_Controller

SN SPDK2222

Namespace Nvme1n1 1

Targets are created by executing the command: ./nvmf_tgt -m [0,2] -c ./nvmf.conf

Hyper-V VMs. Empirically generalized, we took 48 virtual machines x 1 virtual processors each = 48 virtual processors per each cluster node to saturate the performance. That’s 576 total Hyper-V Gen 2 VMs across the 12 server nodes. Each VM runs Windows Server 2019 Standard and is assigned 1GiB of memory and 25GB storage.

NOTE: According to the NUMA spanning recommendations, NUMA spanning was disabled to ensure that virtual machines always ran with optimal performance.

RDMA. Mellanox ConnectX-5 NICs incorporate Resilient RoCE to provide best of breed performance with only a simple enablement of Explicit Congestion Notification (ECN) on the network switches. Lossless fabric which is usually achieved through enablement of PFC is not mandated anymore. The Resilient RoCE congestion management, implemented in ConnectX NIC hardware delivers reliability even with UDP over a lossy network. Mellanox Spectrum Ethernet switches provide 100GbE line rate performance and consistent low latency with zero packet loss.

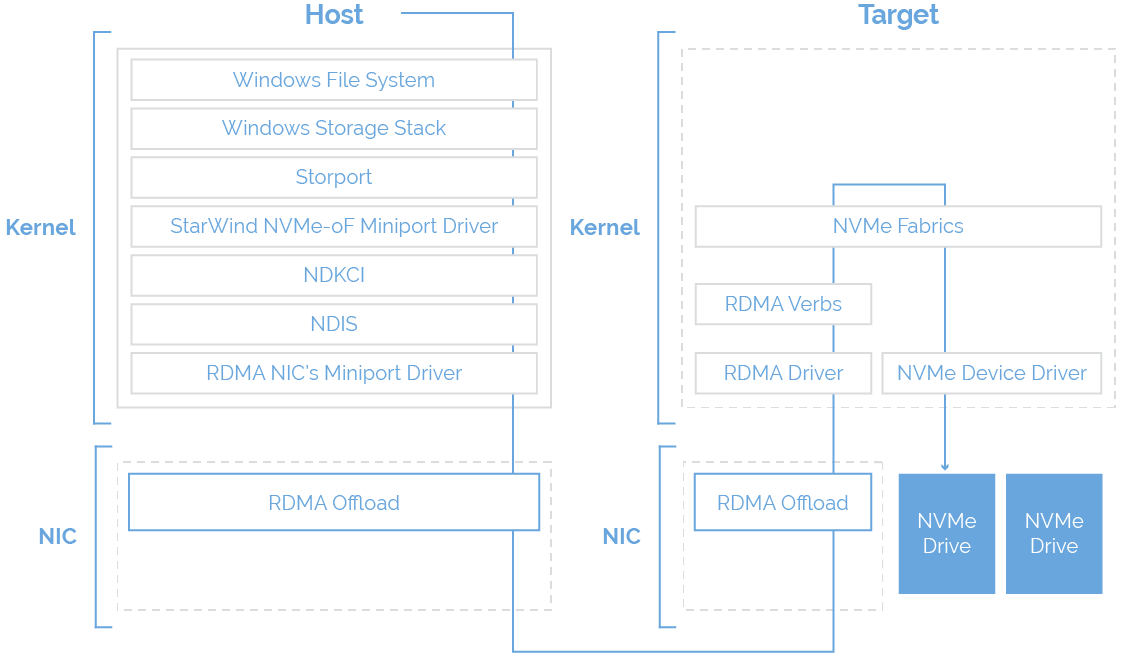

StarWind NVMe-oF Initiator. NVMe-oF or NVMe over Fabrics is a network protocol, like iSCSI, used to communicate between a host and a storage system over a network (aka fabric) utilizing RDMA to transfer data over the network with RDMA over Converged Ethernet (RoCE) technology. This is an emerging technology that gives data centers unprecedented access to NVMe SSD storage. We develop own NVMe-oF Initiator for Windows using NVMe Storport Miniport driver model.

StarWind NVMe-oF Initiator features storage interconnection, and SPDK NVMe-oF is used as a target. The initiator was completely written from scratch since Windows kernel has lack of SPDK, there are no polling drivers for NIC and NVMe. We are getting so close to those "dramatical low-latency results" you can see on Linux. We believe that reaching out the Linux, Microsoft will decide to rewrite the kernel, or they will allow other vendors to develop set of applications that match the storage performance on Linux.

NUMA node. Taking into account NUMA node configuration on each cluster node, each NVMe SSD is configured using a network adapter located on the same NUMA node as it is located on. For example, on cluster node 3, Intel Optane SSD is paired with Mellanox NIC located on NUMA node 1. Each cluster node is connected to VM target using grid model at it is illustrated on the diagram below.

CPU Groups. CPU Groups allow Hyper-V administrators for managing and distributing host CPU resources across guest virtual machines. CPU Groups make it easy to isolate VMs belonging to different CPU groups (i.e., NUMA nodes) from each other in order to assign NIC and NVMe SSDs to specific VMs.

Hyper-V scheduler. For Windows Server 2019, the default Hyper-V scheduler is the core scheduler. Core scheduler offers a strong security boundary for guest workload isolation but has lower performance. We set it to the classic scheduler, the default for all versions of the Windows Hyper-V hypervisor since its inception, including Windows Server 2016 Hyper-V.

Hyper-V Virtual Machine Bus (VMBus) Multi-Channel. Hyper-V VMBus is one of the mechanisms used by Hyper-V to offer paravirtualization. In short, it is a virtual bus device that sets up channels between the guest and the host. These channels provide the capability to share data between partitions and setup synthetic devices.

In our scheme, each VM has 1 vCPUs to optimize IO workload and eliminate waiting time. We set 1: 1 match -> VMBus channel: vCPU.

In virtualization and hyperconverged infrastructures, it’s common to judge on solution performance based on the number of storage input/output (I/O) operations per second, or “IOPS” – essentially, the number of reads or writes that virtual machines can perform. A single VM can generate a huge number of either random or sequential reads/writes. In real production environments, there usually are tons of VMs, and that makes the IOPS fully randomized. 4 kB block-aligned IO is a block size that Hyper-V virtual machines use, so it was our IO pattern of choice.

Hardware and software vendors often use this kind of pattern to measure the best performance in the worst circumstances.

In this article, we not only performed the same benchmarks as Microsoft while measuring Storage Spaces Direct performance but also carried out additional tests for other I/O patterns that are commonly used in virtualization production environments.

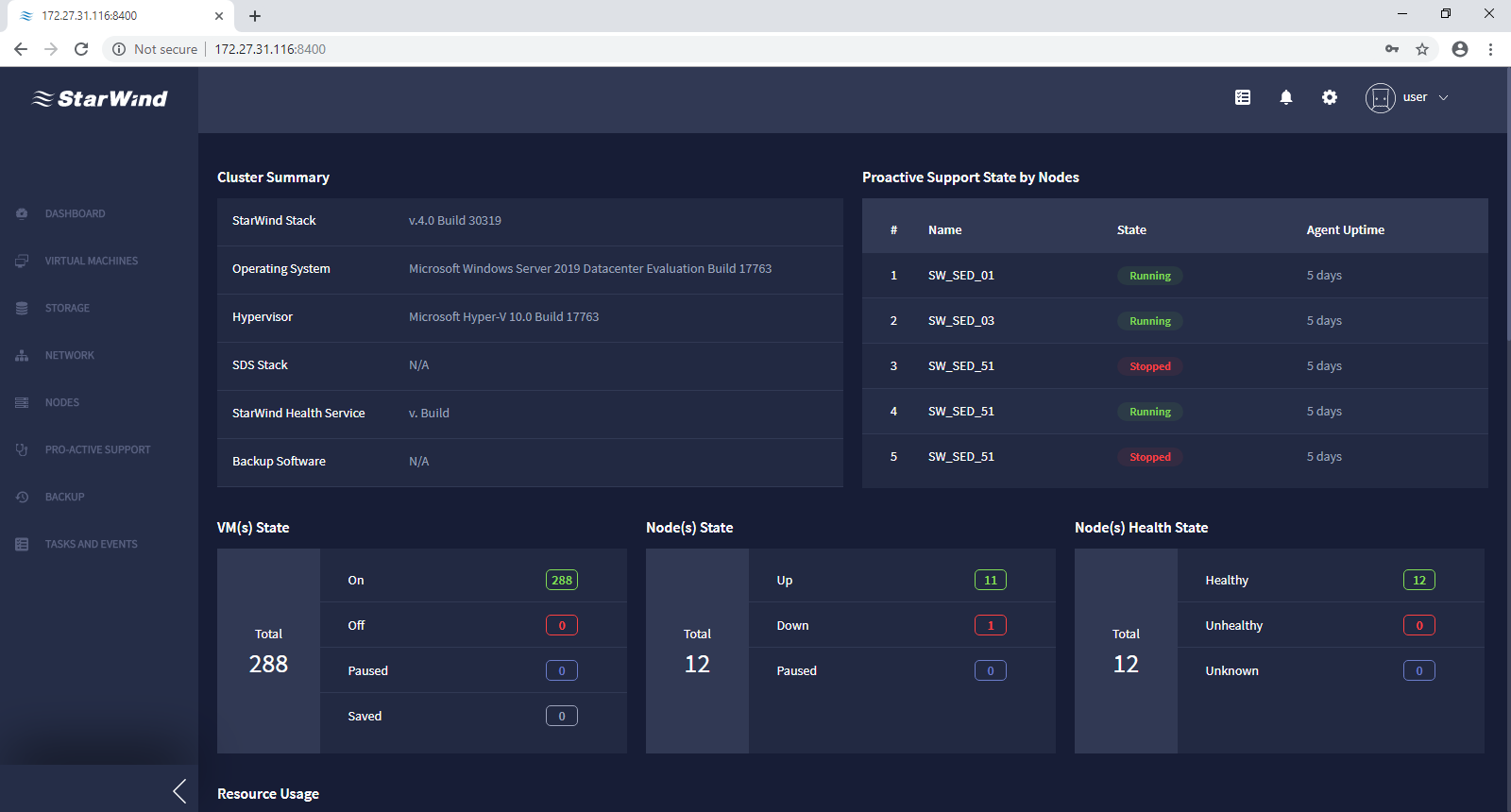

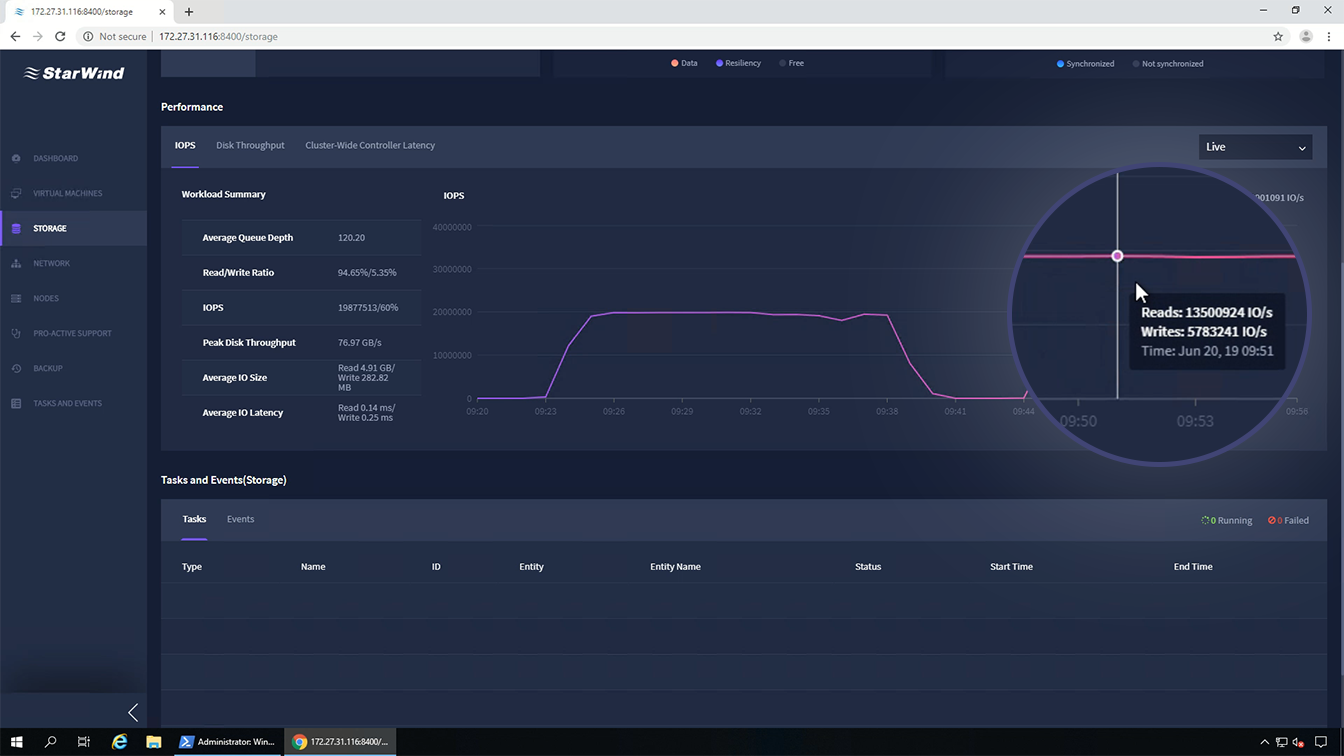

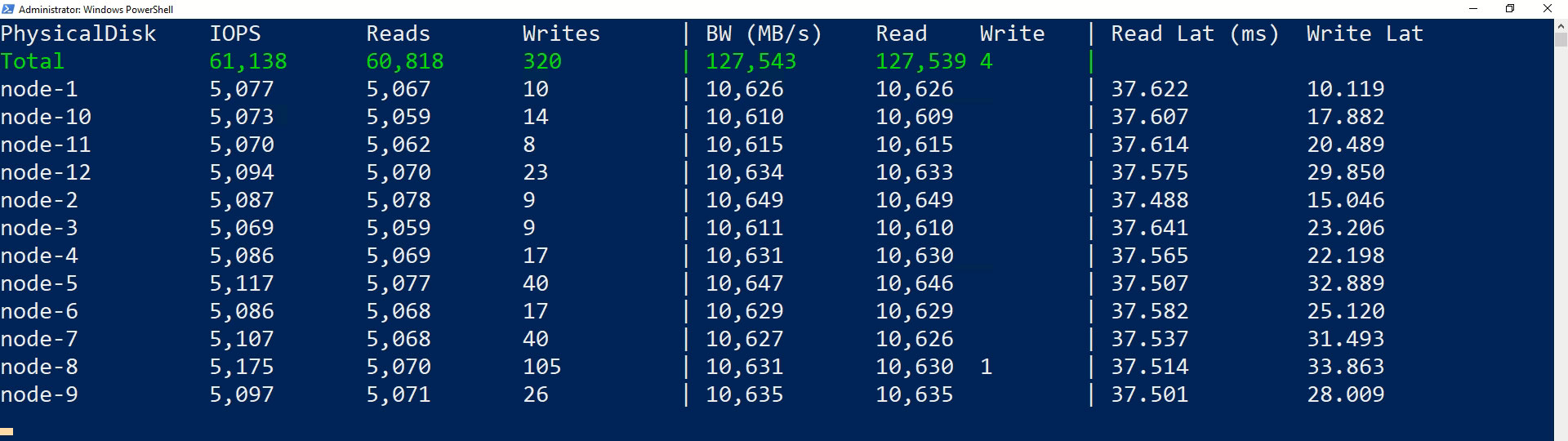

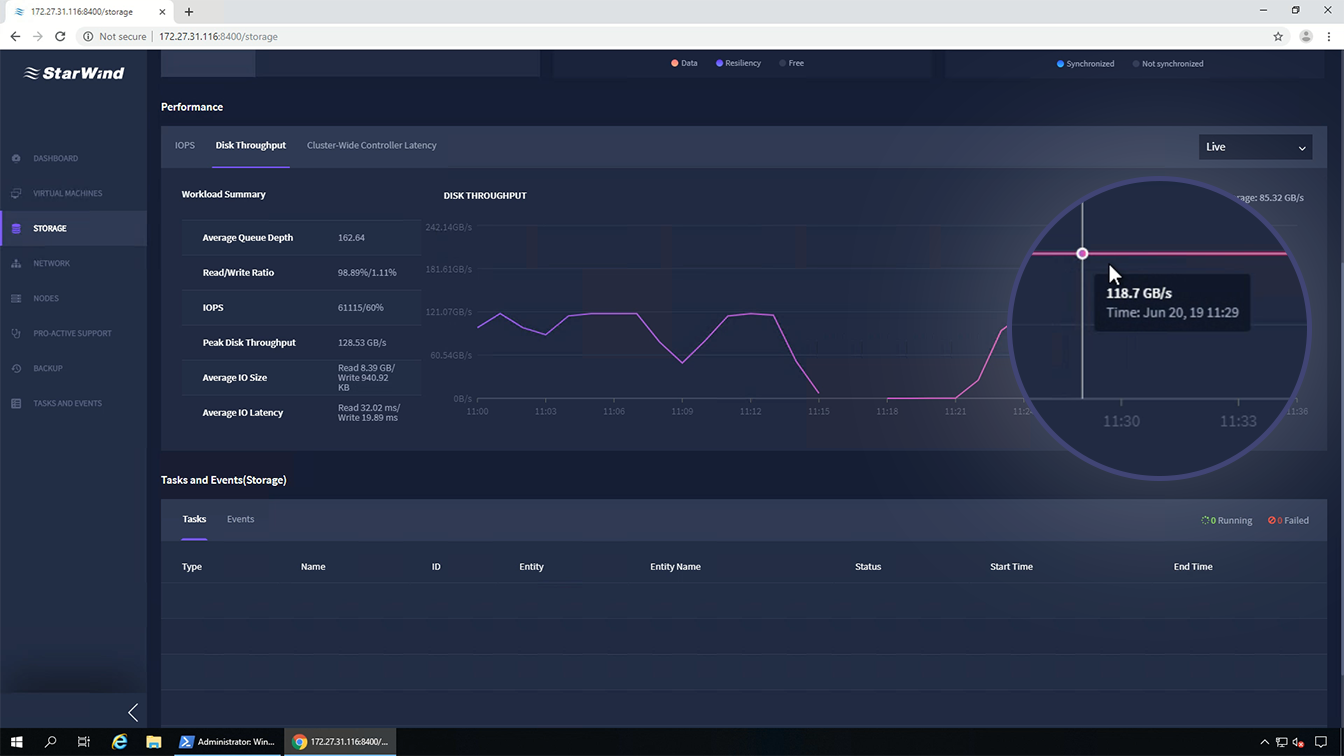

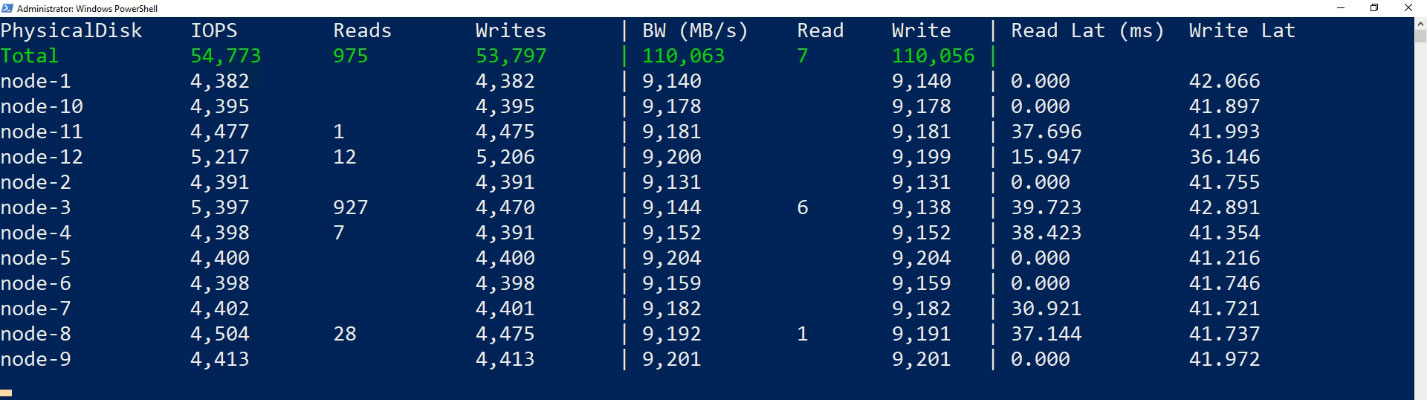

For this benchmark stage we spit test onto phases: RAW device performance and VM-based performance. RAW device configuration is tested by VM Fleet and FIO. VM-based IO benchmarks run using VM Fleet and DSKSPD. Additionally, to VM Fleet, StarWind Command Center is used to show performance results.

FIO is a tool that spawns a number of threads or processes doing a particular type of I/O action as specified by the user. The typical use of FIO is to write a job file matching the I/O load one wants to simulate.

In order to benchmark raw device performance, we connected each cluster node to target VM using StarWind NVMe-oF Initiator. The client was a bare metal host, the server was a target VM running SPDK.

VM Fleet. We used the open-source VM Fleet tool available on GitHub. VM Fleet makes it easy to orchestrate DISKSPD or FIO (Flexible I/O tester), popular Windows micro-benchmark tools, in hundreds or thousands of Hyper-V virtual machines at once.

VM Fleet configuration for VM-based benchmarks. To saturate performance, we set 1 thread per file (-t1). Taking into account Intel Optane recommendations, the given number of threads was used for numerous storage IO tests. As a result, we got the highest storage performance in the saturation point under 16 outstanding IOs per thread (-o16). To disable the hardware and software caching, we set unbuffered IO (-Sh). We specified -r for random workloads and -b4K for 4 kB block size. Read/write proportion was altered with the -w parameter.

Here’s how DISKSPD was started: .\diskspd.exe -b4 -t1 -o16 -w0 -Sh -L -r -d900 [...]

NOTE: We modified the VM Fleet scripts to benchmark storage accurately. By default, VM Fleet obtain I/O performance from CSVFS. Considering that storage was presented over NVMe-oF, we set up VM Fleet to get performance from local storage.

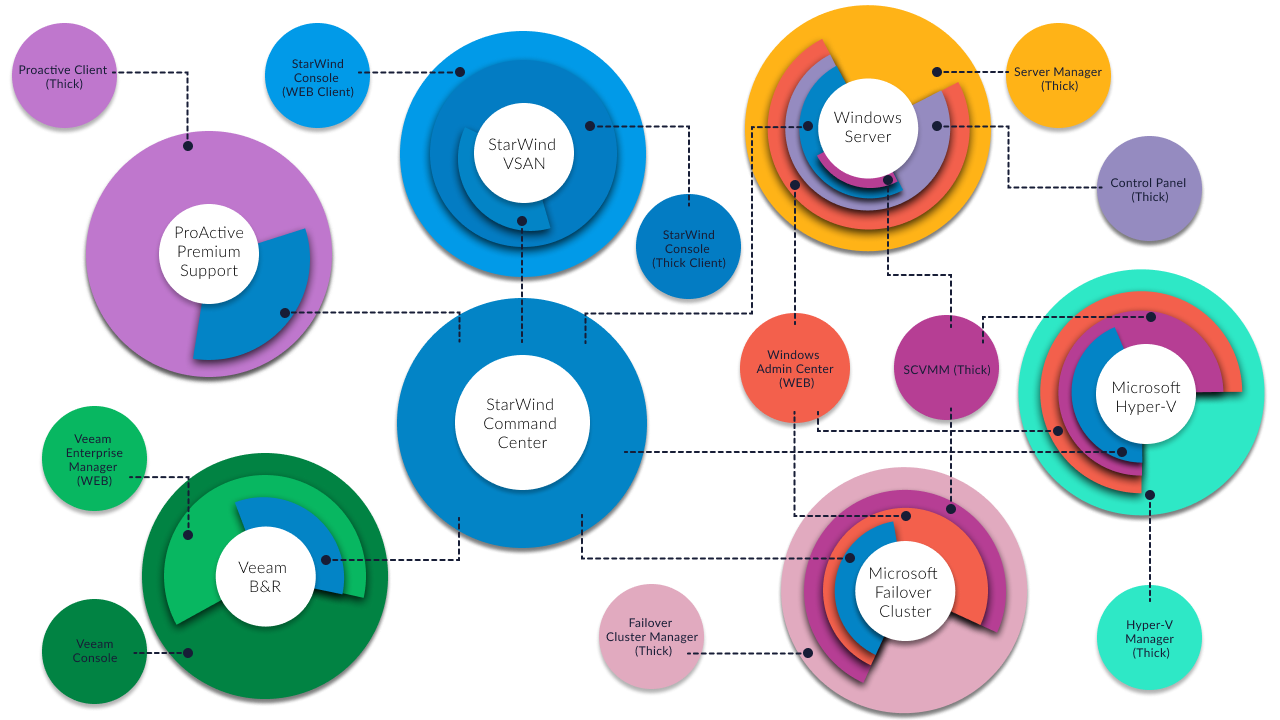

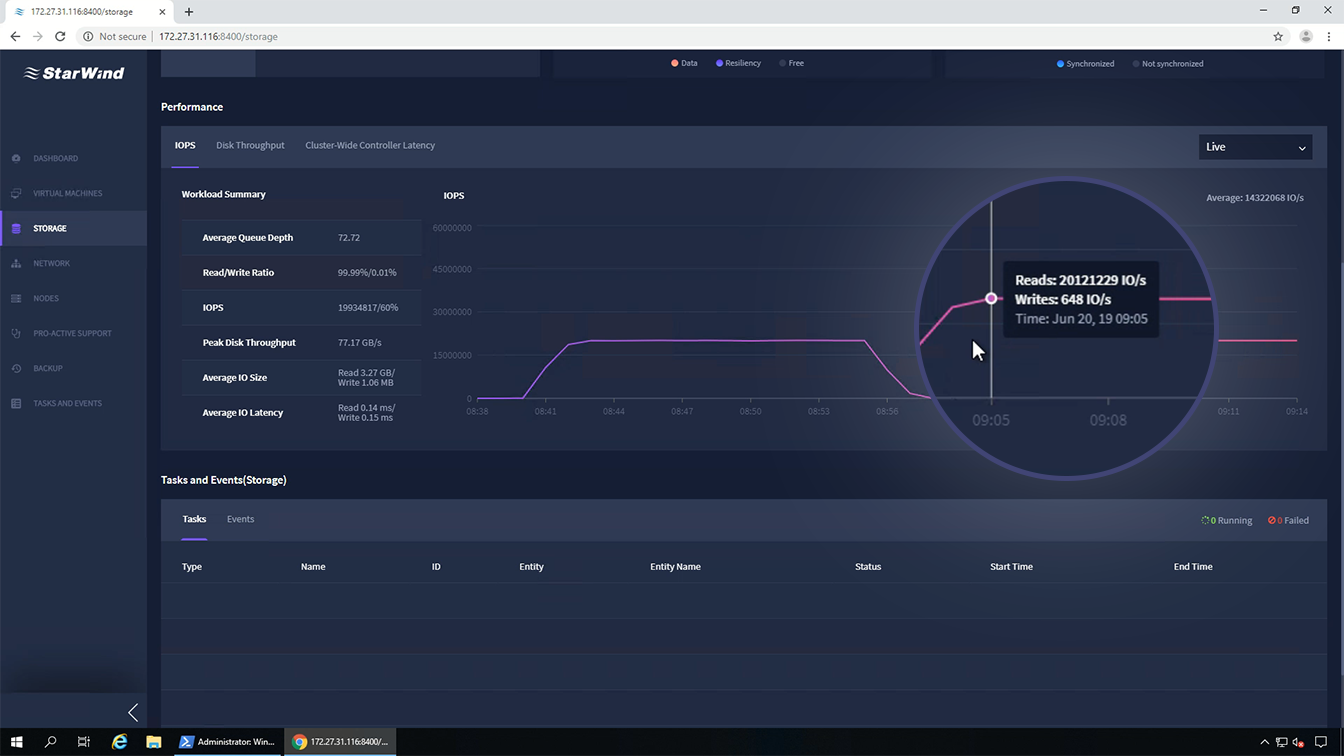

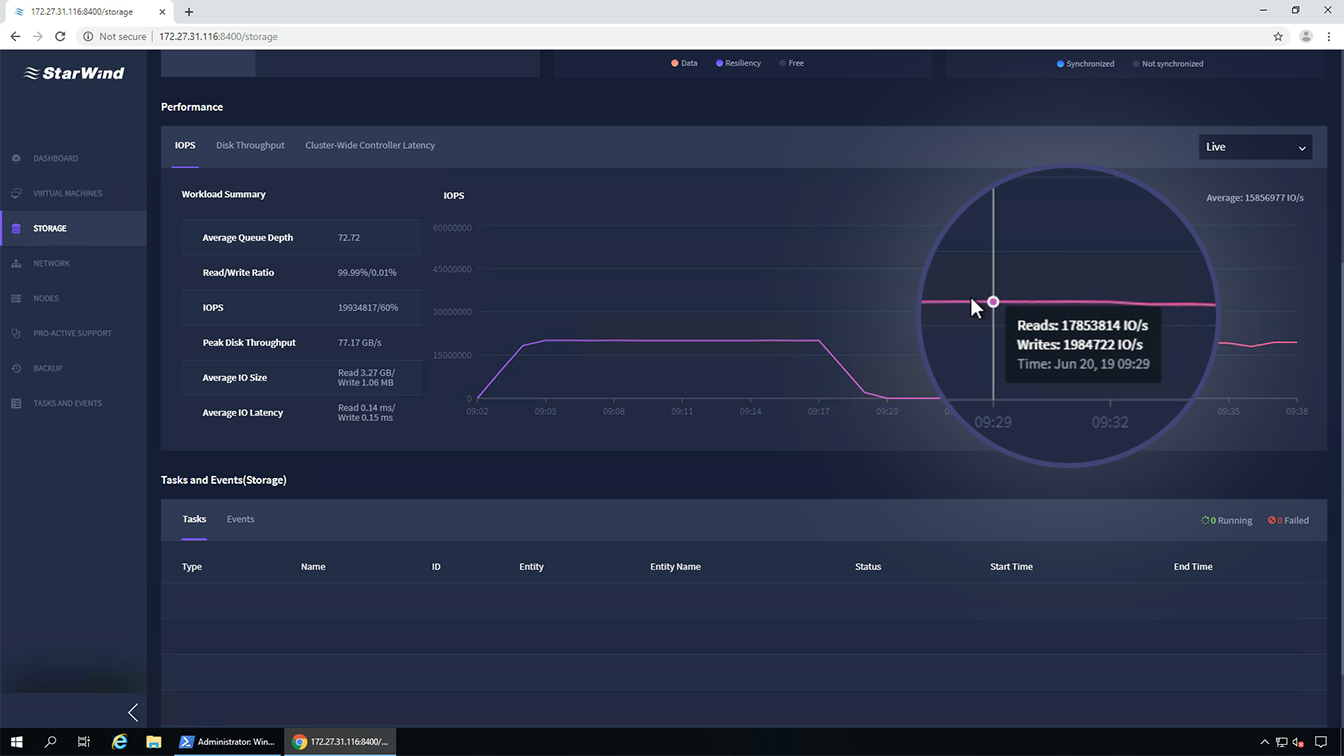

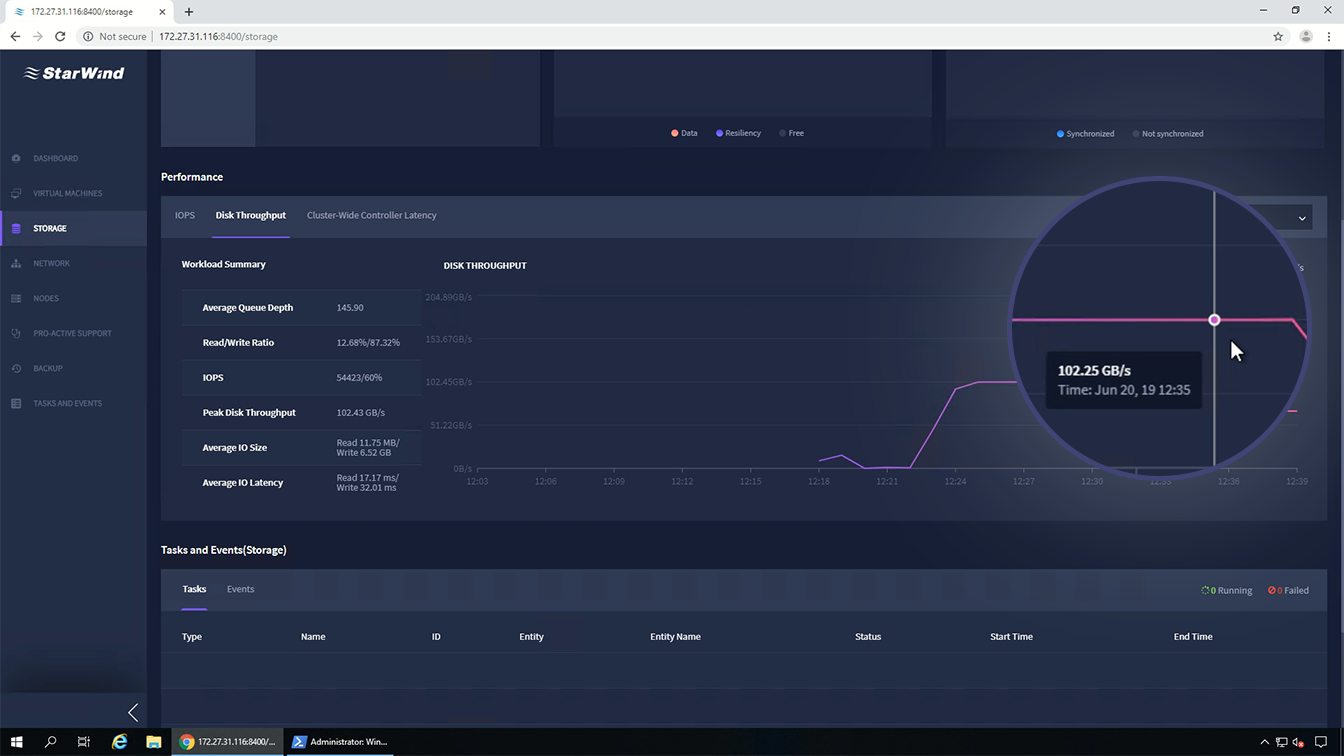

StarWind Command Center. Designed as a replacement for routine tasks to featureless Windows Admin Center and bulky System Center Configuration Manager, StarWind Command Center consolidates sophisticated dashboards that cover all the important information about the state of each environment component on a single screen.

Being a single-pane-of-glass tool, StarWind Command Center allows solving the whole range of tasks related to managing and monitoring your IT infrastructure, applications, and services. As a part of StarWind ecosystem, StarWind Command Center allows managing a hypervisor (VMware vSphere, Microsoft Hyper-V, Red Hat KVM, etc.) and integrates with Veeam Backup & Replication and public cloud infrastructure. On top of that, the solution incorporates StarWind ProActive Premium Support that monitors the cluster 24/7, predicts failures, and reacts to them before things go south.

The other side of storage performance is the latency – how long an IO takes to complete. Many storage systems perform better under heavy queuing, which helps to maximize parallelism and busy time at every layer of the stack. But there’s a tradeoff: queuing increases latency. For example, if you can do 100 IOPS with sub-millisecond delay, you may also be able to achieve 200 IOPS if you can tolerate higher delay. Latency time is good to watch out for: sometimes the largest IOPS benchmarking numbers are only possible with delays that would otherwise be unacceptable.

Cluster-wide aggregate IO latency, as measured at the same layer in Windows, is plotted on the HCI Dashboard too.

Any storage system that provides fault tolerance makes distributed copies of writes, which must traverse the network and incurs backend write amplification. For this reason, the largest IOPS benchmark numbers are typically associated with reads, especially if the storage system has common-sense optimizations to read from the local copy whenever possible, which StarWind Virtual SAN does.

NOTE: To make it right, we show you VM Fleet results and StarWind Command Center results.

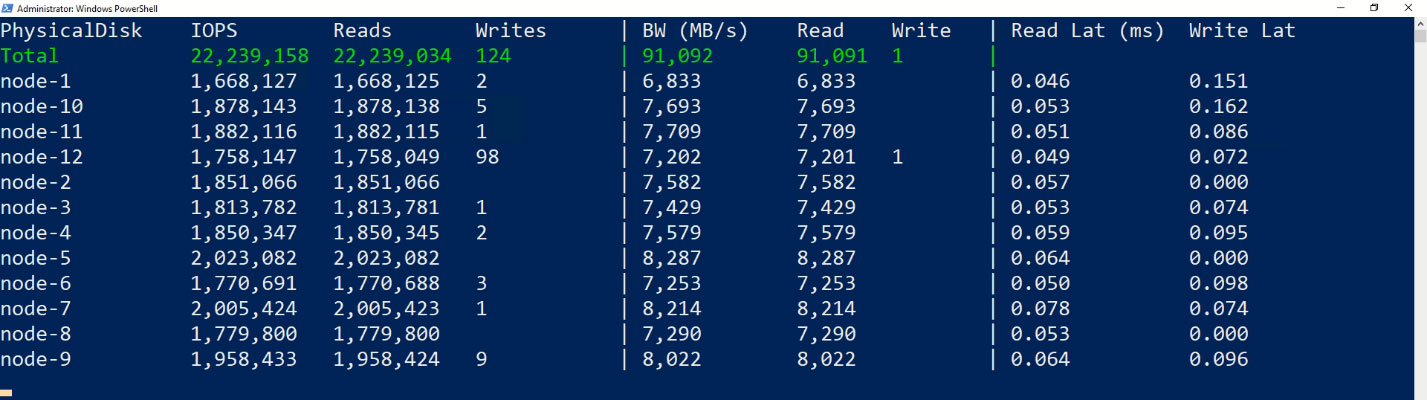

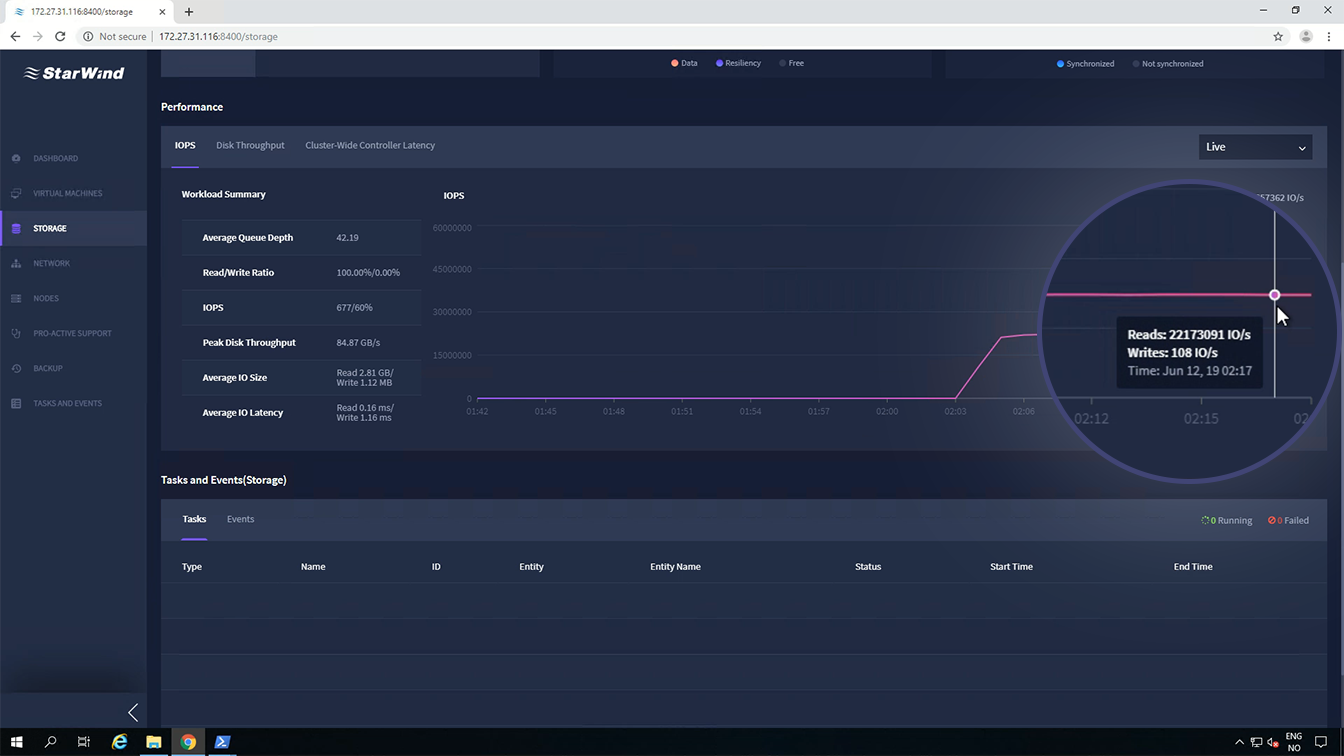

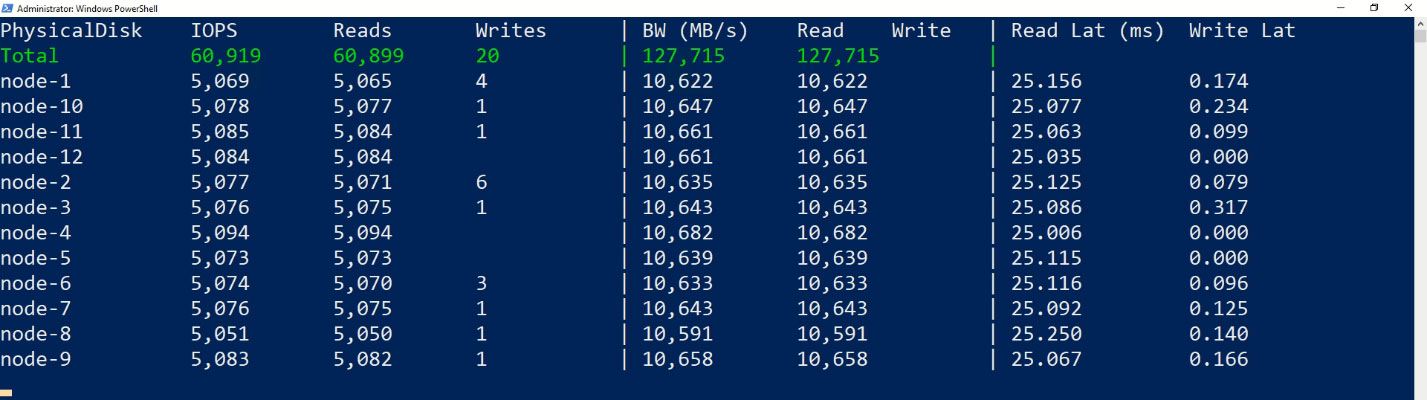

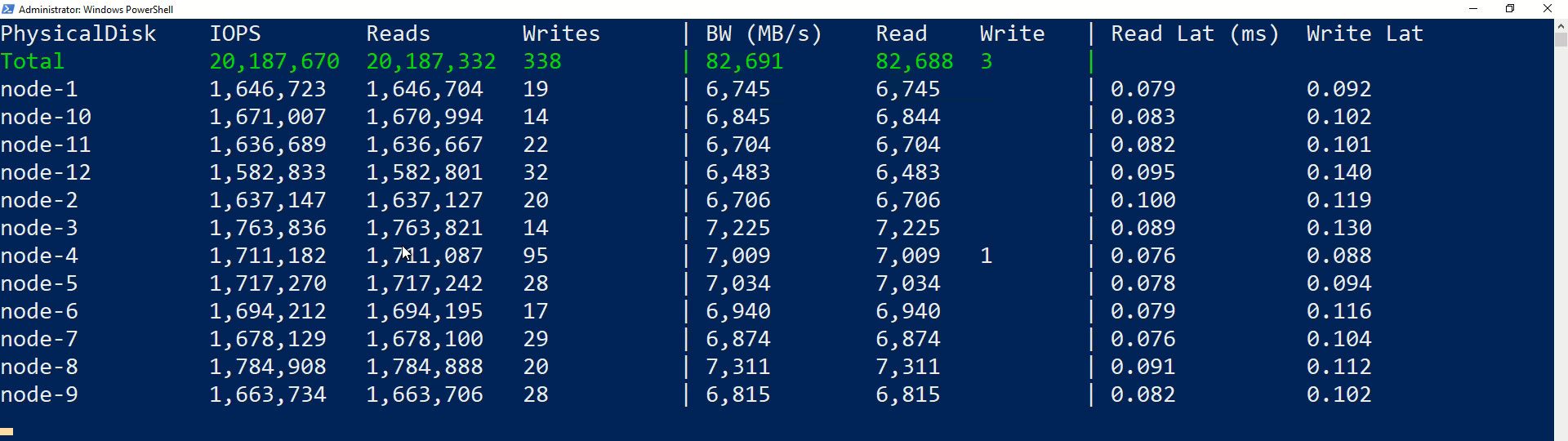

Action 1: RAW device 4К random read

Here are all the results, with the same 12-server HCI cluster:

| Run | Parameters | Result |

|---|---|---|

| RAW device, Maximize IOPS, all-read | 4 kB random, 100% read | 22,239,158 IOPS1 |

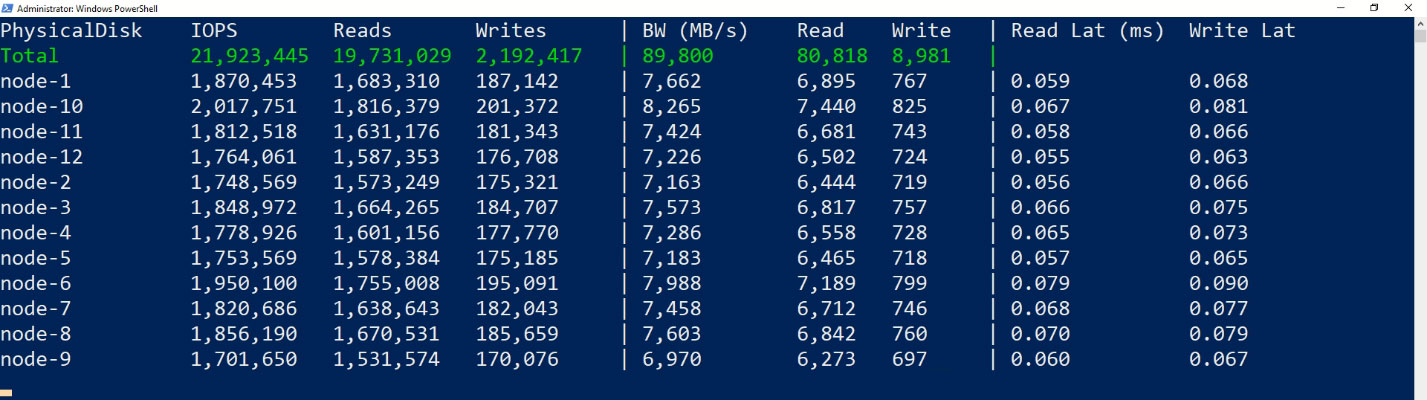

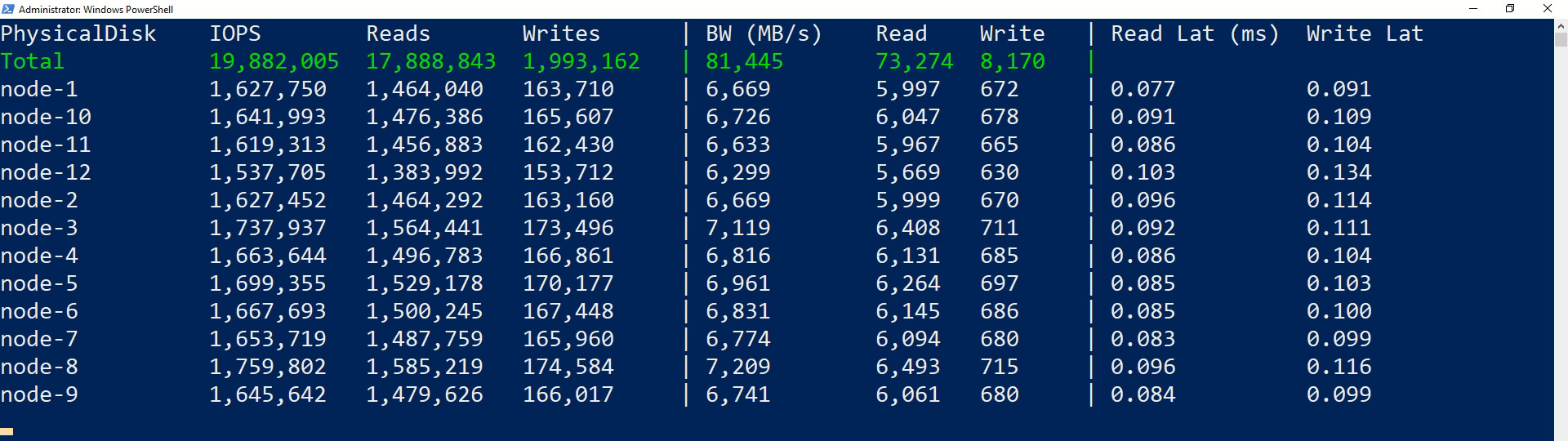

| RAW device, Maximize IOPS, read/write | 4 kB random, 90% read, 10% write | 21,923,445 IOPS |

| RAW device, Maximize IOPS, read/write | 4 kB random, 70% read, 30% write | 21,906,429 IOPS |

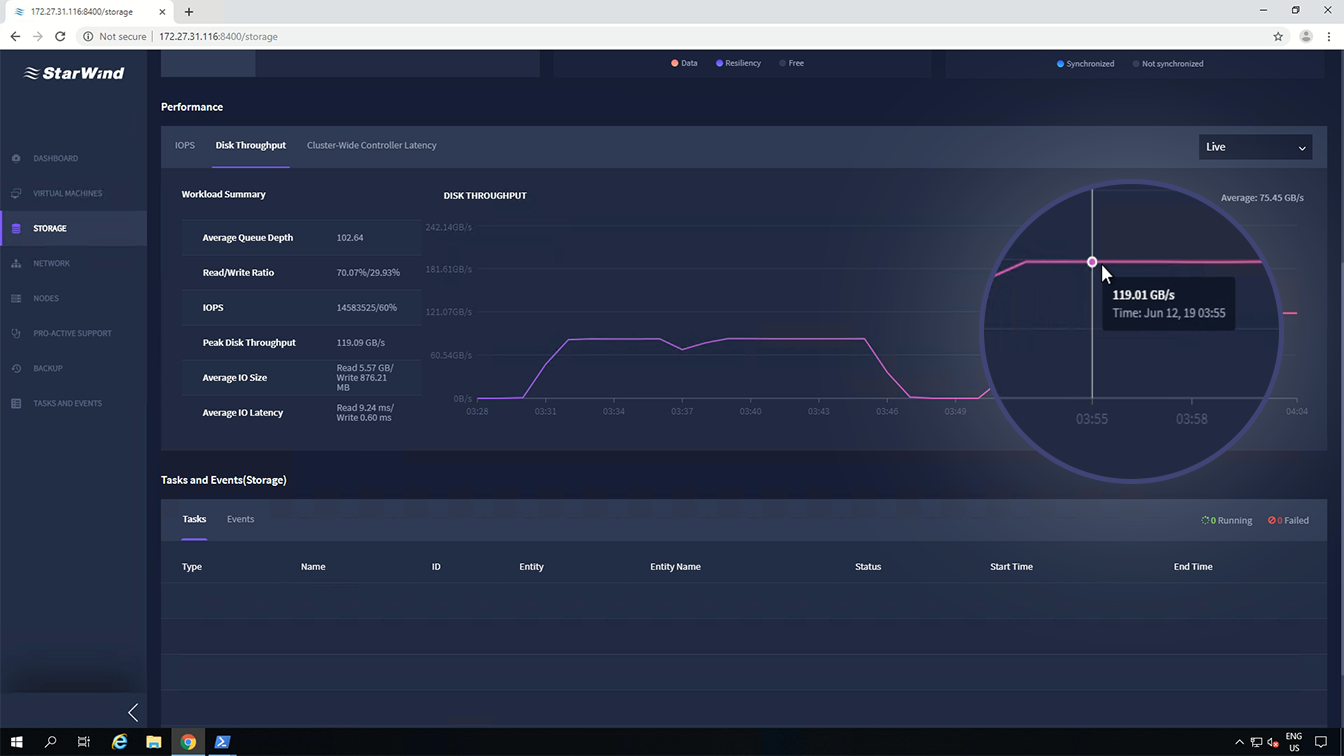

| RAW device, Maximize throughput | 2 MB sequential, 100% read | 119.01GBps22 |

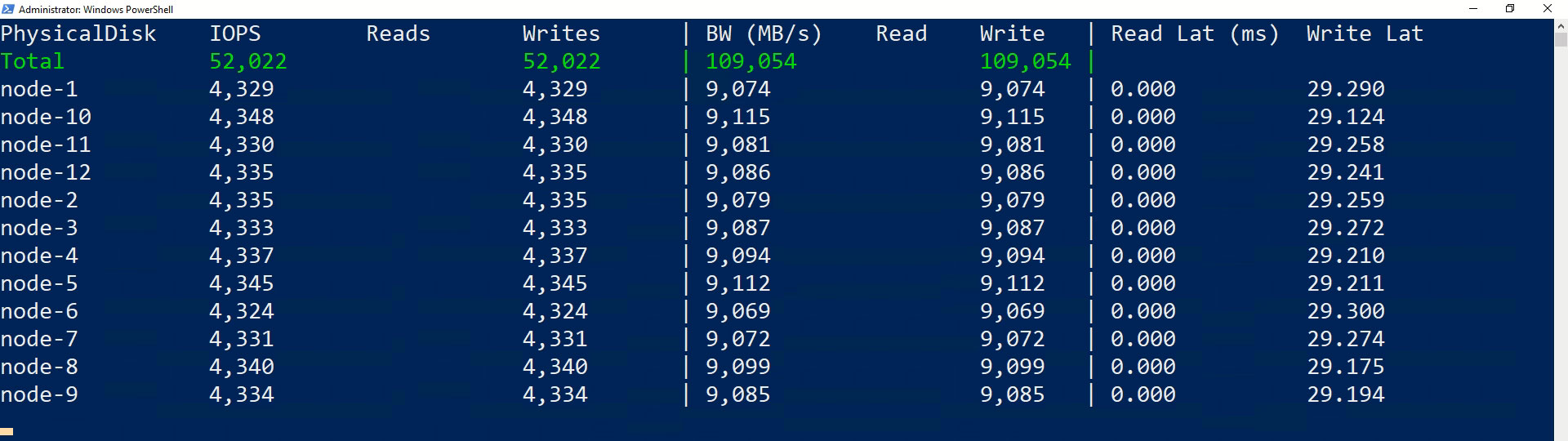

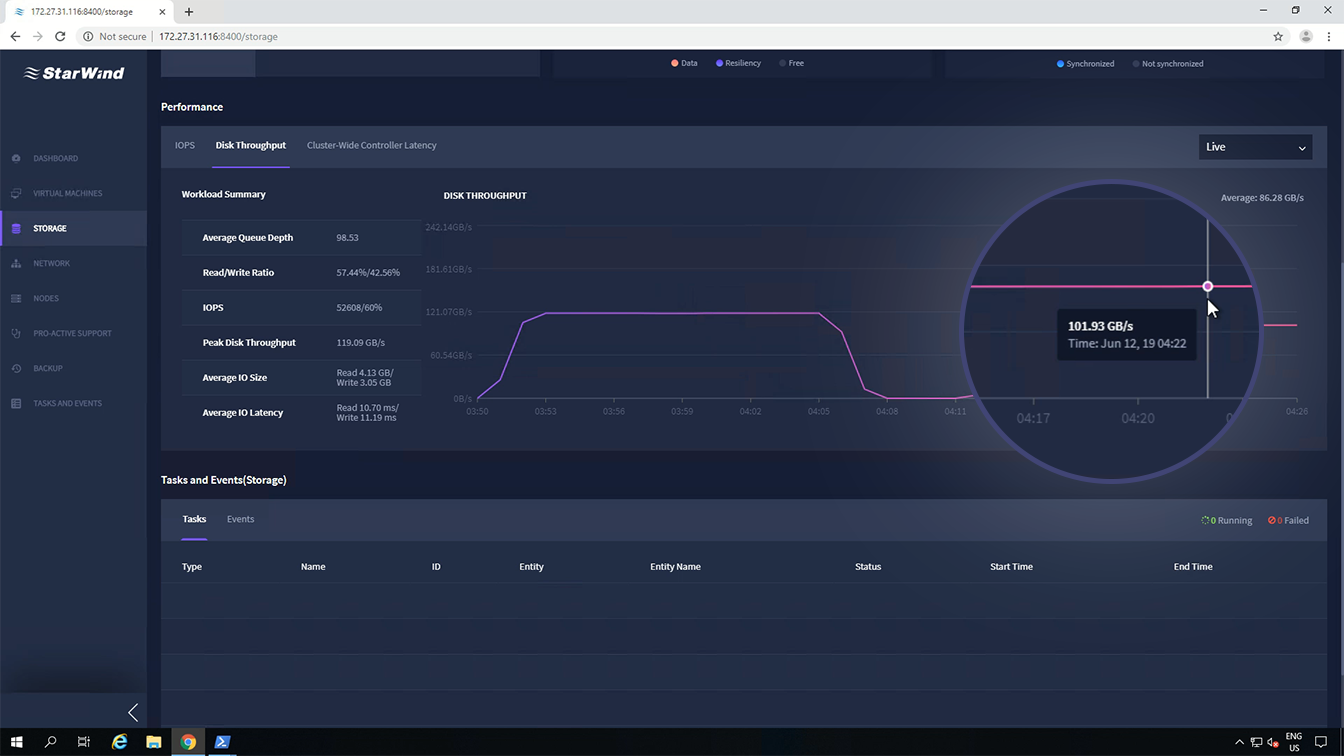

| RAW device, Maximize throughput | 2 MB sequential, 100% write | 101.93GBps |

| VM-based, Maximize IOPS, all-read | 4 kB random, 100% read | 20,187,670 IOPS3 |

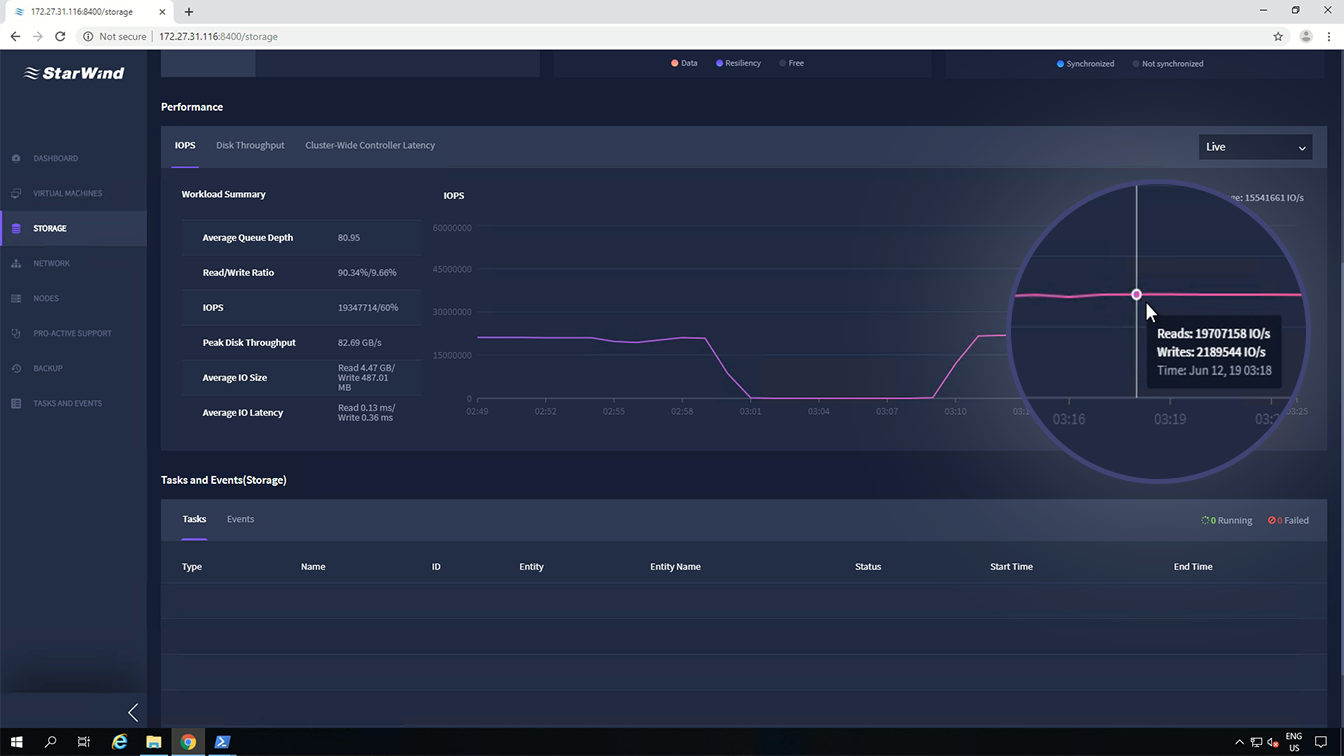

| VM-based, Maximize IOPS, read/write | 4 kB random, 90% read, 10% write | 19,882,005 IOPS |

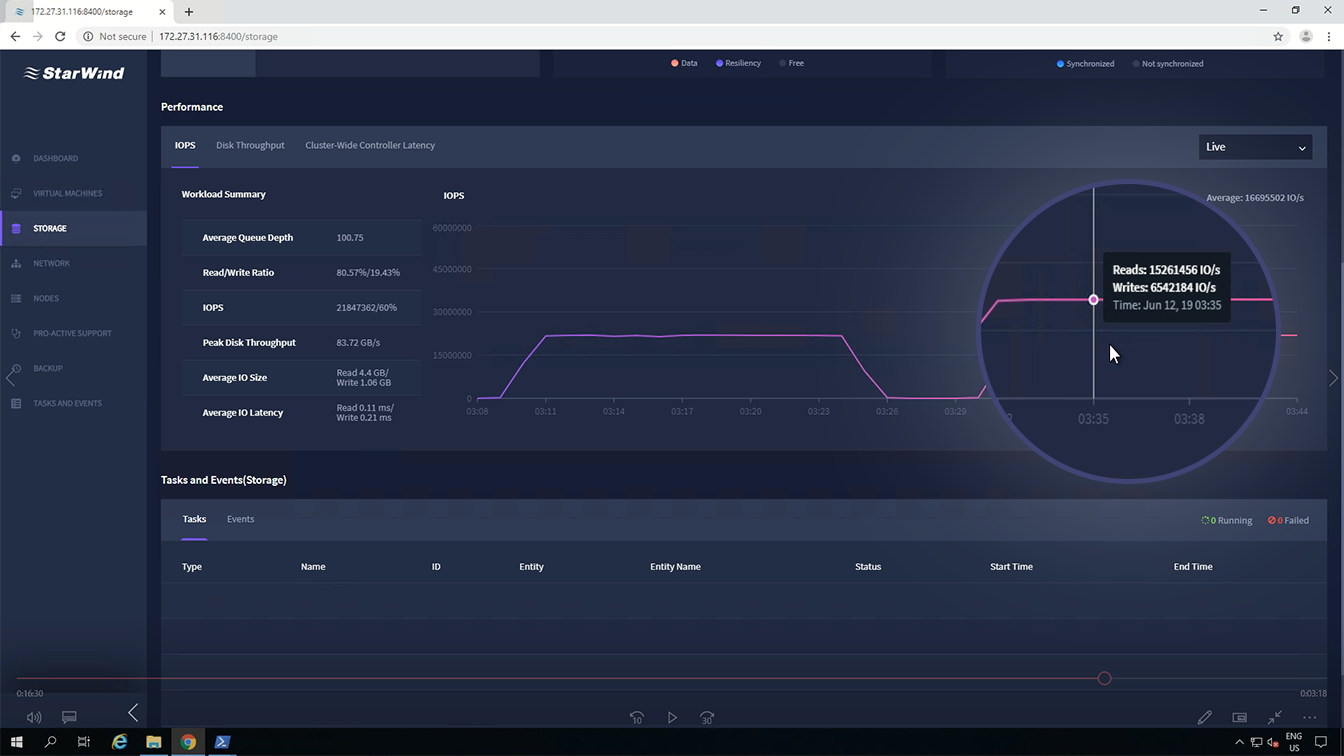

| VM-based, Maximize IOPS, read/write | 4 kB random, 70% read, 30% write | 19,229,996 IOPS |

| VM-based, Maximize throughput | 2 MB sequential, 100% read | 118.7GBps44 |

| VM-based, Maximize throughput | 2 MB sequential, 100% write | 102.25GBps |

1 - 84% performance out of theoretical 26,400,000 IOPS

2 - 106% performance out of theoretical 112.5GBps

3 - 76% performance out of theoretical 26,400,000 IOPS

4 - 105.5% performance out of theoretical 112.5GBps

This is pretty much it for the third stage of benchmark of 12-node production-ready StarWind HCA cluster featured NVMe-oF storage. 12-node all-flash NVMe cluster delivered 20.187 million IOPS, 84% performance out of theoretical 26.4 million IOPS.

The breakthrough performance was obtained by configuring NVMe-oF cluster right. The fastest NVMe storage is the passthrough to SPDK NVMe target VM and StarWind NVMeoF Initiator features storage interconnection.