Curious about RAID? Discover the technical differences and find out which one suits your needs best.

Curious about RAID? Discover the technical differences and find out which one suits your needs best.

Curious about the next frontier in data storage? Discover the key aspects of NVMe over Fabrics (NVMe-oF), its benefits, and practical applications.

Broadcom’s $61 billion acquisition of VMware in 2022 has led to significant changes in the virtualization vendor’s licensing policy.

Converged infrastructure (CI) simplifies IT with pre-configured systems, while hyperconverged infrastructure (HCI) optimizes resource utilization through a unified software-defined approach. Still unsure what to choose?

Ransomware is real, and so is the need for solid data protection. Discover immutable backups and how they ensure fast recovery, compliance, and unbreakable protection.

Looking to upgrade Kemp LoadMaster in a High Availability (HA) mode? Our guide is focused on simplicity and practicality.

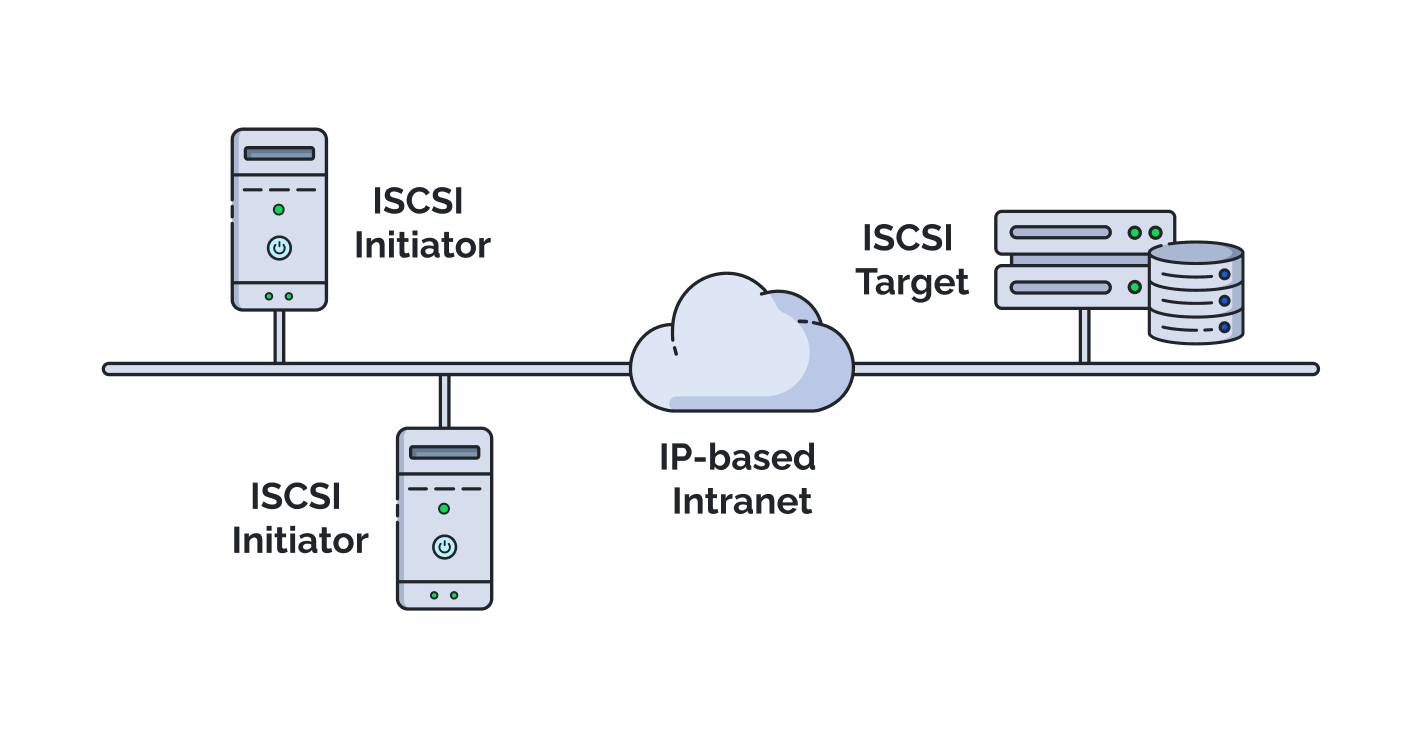

Data transfer is at the core of tech evolution, and choosing the right storage system is paramount. Learn how the iSCSI protocol revolutionizes data storage and boosts efficiency.

In today’s digital landscape, Disaster Recovery (DR) isn’t just a safety net – it’s a lifeline. It ensures data protection, business continuity, and a competitive edge.

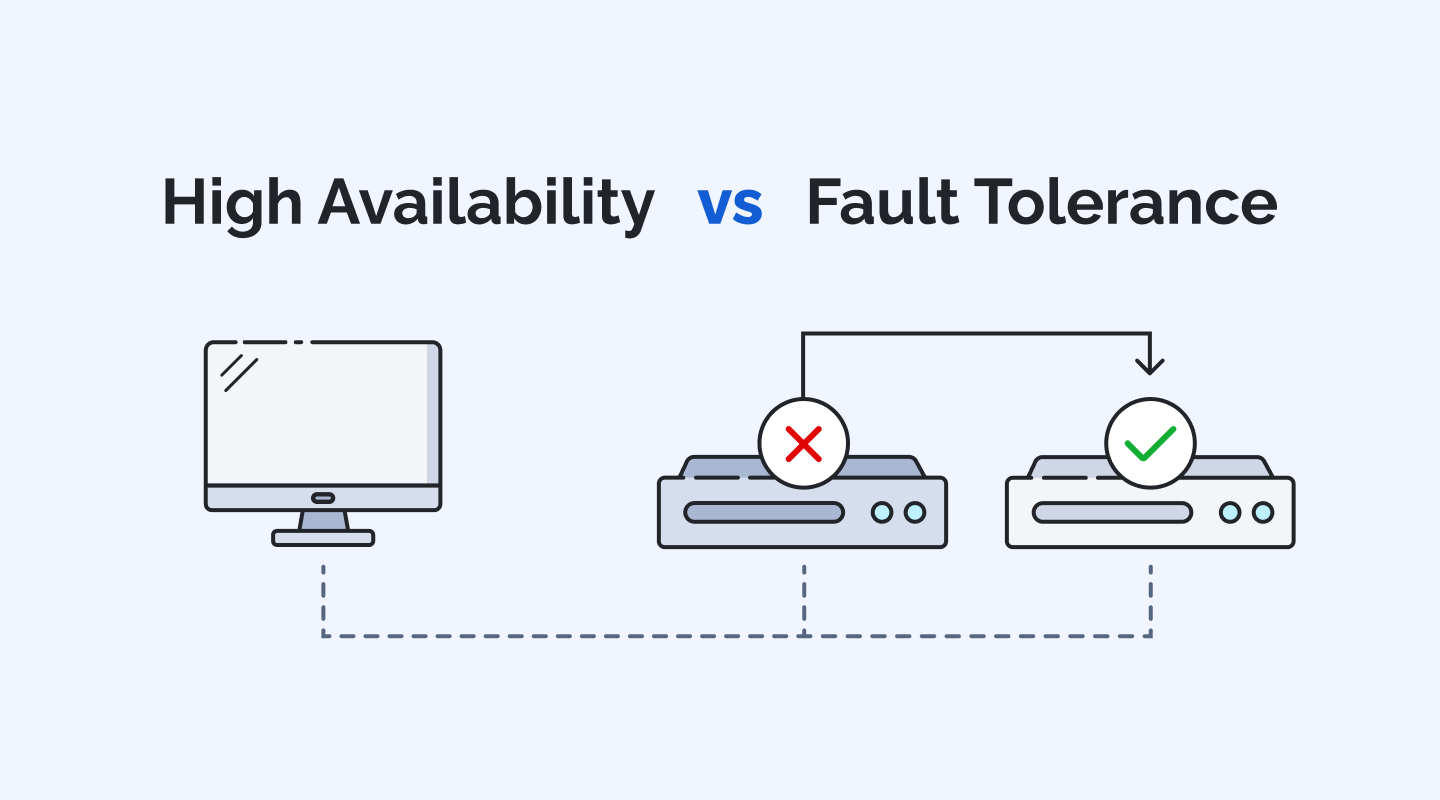

Running a company heavily reliant on IT services and wondering how to improve business continuity? Our new article explains the key differences between High Availability (HA) and Fault Tolerance (FT) and will help you choose the right IT resilience strategy.

Explore storage protocols with our performance benchmarking series! In Part 1, we configured NFS, and now, Part 2 dives deep into iSCSI configuration. Which protocol is your best fit for virtual infrastructure?