Lab review: Cost-effective iSCSI SANity

By Jack Fegreus, openBench Labs

July 24, 2009 -- As a software-only product for iSCSI storage virtualization, StarWind Enterprise Server from StarWind Software provides IT with a cost effective means to leverage storage virtualization and help deliver critical data management services for business continuity. With StarWind's software, any 32-bit or 64-bit Windows Server can be used to provide iSCSI target devices capable of sharing access with multiple hosts. Most importantly, StarWind differs from many competitive offerings by including two nodes with a StarWind Enterprise Server license to support mirroring and replication. The company also places no limits on the storage capacity exported to clients or on the number of client connections.

The simplicity of providing virtualization with a software iSCSI SAN solution allows StarWind to fit a wide range of IT needs. For SMB sites, where direct-attached storage (DAS) is dominant, IT can leverage StarWind Enterprise Server with existing Ethernet infrastructure to adopt a SAN paradigm with minimal capital expenditure (CapEx) and operating (OpEx) costs. At large enterprise sites, IT can immediately garner a positive return on investment (ROI) by leveraging StarWind Enterprise Server to extend storage consolidation to desktop systems for improved storage utilization, on-demand storage provisioning, higher data availability, booting over the SAN, and tighter risk management.

It is the virtualization of systems via a Virtual Operating Environment (VOE); however, that now garners the lion's share of attention from CIOs. More importantly, to maximize the benefits of a VOE, such as VMware Virtual Infrastructure, shared SAN-based storage is a prerequisite. StarWind Enterprise Server furthers the SAN value proposition with integrated software for the automation of storage management functions to ensure the high availability of data and protection against application outages. StarWind Enterprise Server's data management features include thin storage provisioning, data replication and migration, automated snapshots for continuous data protection, and remote mirroring for less than $3,000.

What's more, StarWind helps IT utilize storage assets more efficiently and avoid vendor lock-in. There are substantial capital costs to be paid when the same storage management functions are licensed for every storage array. Licenses for snapshot, mirror, and replication functionality will often double the cost of an array.

By building on multiple virtualization constructs, including the notion of a space of virtual disk blocks from which logical volumes are built, StarWind Enterprise Server is able to take full control over physical storage devices. In doing so, the software provides storage administrators with all of the tools needed to automate critical functions such as thin provisioning, creating snapshots, disk replication and cloning, as well as local and remote disk mirroring.

iSCSI from desktop to VM

With server virtualization and storage consolidation the major drivers of iSCSI adoption, openBench Labs set up two servers running StarWind Enterprise Server in a VMware iSCSI SAN test scenario. We focused our tests on StarWind's ability to support a VIO featuring VMware ESX server hosting eight VMs running Windows Server 2003. With disk sharing essential for such advanced features as VMotion and VMware Consolidated Backup (VCB), the lynch pin for supporting these features with StarWind is the device sharing capability, which is dubbed "clustering" in the device creation wizard.

|

| Using vCenter Server, we cloned an existing network of eight VMs, which was resident on an FC-based datastore, and deployed the new VMs on an iSCSI datastore that was exported by StarWind. We easily shared access to both the iSCSI and FC datastores with a Dell server running Windows Server 2003, VCB, and NetBackup. |

We began by installing StarWind Enterprise Server on a pair of Dell PowerEdge 1900 servers: One running the 64-bit version of Windows Server 2008 and the other running the 32-bit version of Windows Server 2003. We exported all iSCSI targets using a dedicated Gigabit Ethernet adapter over a private network. Both servers were configured with an 8Gbps Fibre Channel HBA and all disk block pools for iSCSI target volumes originated on volumes imported from a Xiotech Emprise 5000 array. With two, active-active, 4Gbps Fibre Channel ports, the Emprise 5000 system provided a uniform storage base that eliminated back-end storage as a potential bottleneck.

StarWind Enterprise Server packages a collection of physical disk blocks and presents that package to a client system as a logical disk. There are several ways to do this within the software, which provides a complete set of tools for IT administrators to establish control of an entire iSCSI SAN infrastructure from a central point of management. The most functional of StarWind's storage virtualization methods provides thin storage provisioning, automated snapshot creation, and shared-disk access.

The openBench Labs VOE was hosted on a quad-processor HP DL580 ProLiant server, on which we installed a dual-port QLogic iSCSI HBA, the QLA4052, along with a 4Gbps Fibre Channel HBA. Similarly, we configured a quad-core Dell PowerEdge server, which ran Windows Server 2003, VMware vCenter Server (aka Virtual Center), VMware Consolidated Backup (VCB), and Veritas NetBackup 6.5.3, with both a QLogic iSCSI HBA and an 8Gbps Fibre Channel HBA. In this configuration, we were able to test StarWind's ability to provide shared access to iSCSI targets, which is critical for VCB.

|

| With StarWind's support for shared iSCSI targets, we implemented and integrate VCB with Veritas NetBackup (NBU) 6.5.3. During the backup process, the VCB proxy server initiated all I/O for both VCB and NBU. In the first stage of a backup, VCB read data on the shared ESX datastore, SWDataStor, and wrote a copy of the data files associated with each VM to a local directory on another iSCSI StarWind disk. With two Ethernet paths and four simultaneous VM backups in process, both reads and writes proceeded at more than 100MBps. |

Best IT practices call for classic file-level backups of VMs to be augmented with image-level backups that can be moved among VOE servers to enhance business continuity. VCB provides the framework needed to support both image-level and file-level backup operations; however, VCB requires that an ESX host share logical disks with a Windows-based server and that makes VCB support an excellent test of the iSCSI SAN flexibility, ease-of-use, and I/O throughput provided by StarWind Enterprise Server.

The pivotal component in a VCB package is a VLUN driver, which is used to mount and read ESX-generated snapshots of VM disks. From the perspective of a VM and its business applications, the VCB backup window only lasts for the few seconds that the ESX Sever needs to take and later remove a snapshot of the VM's logical disk, which is represented by a vmdk file. The Windows-based proxy server uses the VLUN driver to mount the vmdk file as a read-only drive. In this way, all data during the backup process is accessed and moved over the storage network for minimal impact on production processing.

Device provisioning

As a full-featured, software-only iSCSI target solution for Windows, StarWind Enterprise Server is a conceptually simple and deceptively complex application. Ostensibly, the purpose of installing StarWind Enterprise Server is to package collections of physical disk blocks and present those collections as logical disks to client systems. What complicates the StarWind equation are the plethora of full-featured services included with the software. The result can be a daunting initial out-of-box experience.

For IT, the business value of the solution lies in optimal resource utilization, minimal IT administration, and maximal performance. To meet that value proposition while also providing a wide array of optional features, StarWind utilizes a number of ways to package and virtualize pools of physical disk blocks.

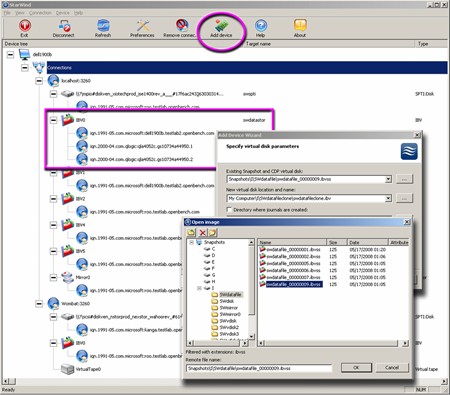

While the menu to add a new iSCSI device is feature-centric, the eight options represent three different technologies for device virtualization. The most basic level of virtualization and simplest management task for IT involves exporting a raw physical device.

If the device uses the SCSI command set, then all StarWind needs to do is route the command and data streams. All extended functionality is provided by the device itself. This device choice is dubbed a SCSI pass-through interface (SPTI) device. If the device does not utilize the SCSI command set, such as a SATA drive, StarWind offers a Disk Bridge device that virtualizes the command set so that a non-SCSI drive or array can be employed.

|

| On any server running StarWind Enterprise Server, IT administrators can launch the management console and manage all StarWind servers on a LAN. Provisioning a new storage device amounts to choosing a device with the appropriate support features, which range from a simple hardware SCSI pass-through interface (SPTI) device to an iSCSI disk with automated snapshots for continuous data protection. Within our VOE, all testing was done using the latter family of image-based virtual disks, which StarWind designates as IBV volumes. |

To do more than just virtualize the SCSI command set, the StarWind software needs to virtualize disk blocks and that means leveraging NTFS on the host server. The easiest way to do this is with an image file that represents the iSCSI device, which is exactly the technology found in the free version of StarWind. It is also the virtualization technology used to provide VTL and RAID-1 mirror devices.

iSCSI target replication

The most sophisticated StarWind features involve thin provisioning, automated snapshots, and replication—dubbed cloning. Providing those features requires the much more sophisticated StarWind image-based virtual disk (IBV) scheme. In this scheme, the software virtualizes disk blocks using separate files for disk headers (.ibvm), block data (.ibvd), and disk snapshots (.ibvss). This virtual disk image technology is what we employed in our VOE to virtualize iSCSI targets for the ESX server and the Windows VCP proxy server.

With our ESX datastore resident on a StarWind IBV device, we encountered no issues in leveraging the advanced features that StarWind offers for IBV target devices. Since our datastore would immediately be required to contain the logical disk files of eight VMs, we needed to define a volume with sufficient room to provide for the VMs and also support future growth. To meet those needs, we created a 500GB target volume with thin provisioning. Initially, this volume began with a few MBs of meta data, but it quickly swelled to consume about 155GB of real disk space as we loaded our eight VMs.

In addition to setting up thin provisioning to garner better utilization of storage, openBench Labs also configured the IBV device for the ESX datastore with support for snapshots. IT administrators can manually create a snapshot at any time. What's more, IT can also enable automatic snapshot creation for an IBV target device.

The central issue with any automated snapshot scheme is the extent to which snapshots grow and consume resources. In our VOE testing, snapshot overhead for our ESX datastore typically was on the order of 100KB. That gives system and storage administrators a lot of leeway when deciding how to configure the frequency for automatic snapshot creation and whether to limit the number of snapshots maintained. If a limit is placed on the number of snapshots, StarWind overwrites the oldest snapshot when the limit is reached.

VM clone home

The easiest way to mount and leverage a StarWind snapshot is through the creation of a new IBV device as a "linked clone." The linked clone process utilizes a snapshot of the original device to set up pointers, rather than duplicate the device's data, to create a point-in-time replica. Once the replica is created, any changes to either the new device or the original are independent, which makes snapshots and replication powerful tools for disaster recovery.

|

| We used StarWind's new device wizard to easily mount and access IBV device snapshots as a new IBV device. We employed the wizard to create a new device based on a snapshot of our SWdatafile device. We simply pointed to the snapshot we wanted to use and a location to create the initial files to support the new device dubbed SWdatafileclone. |

Snapshots and replication have profound implications for a VOE. Once we created the new IBV device, SWdatafileclone, we used the VI Client to rescan the iSCSI HBA in our ESX host. The new device was found, mounted, and its VMFS datastore was imported. We dubbed the datastore SWDataStorclone. We now had two 500GB datastores and 16 VMs within our ESX host; however; we had only consumed 160GB of space on our StarWind Server.

For an ESX server hosting VMs running mission-critical applications, the StarWind process of creating automatic snapshots and mounting the snapshots as linked clones is much quicker and easier than a traditional VCB-based backup. While automated snapshots cannot replace a traditional backup process, in many scenarios that involve data corruption, StarWind's automated snapshot and replication scheme provides a far superior Recovery Point Option and a far better Recovery Time Option. What's more, each of the VMs in the replicated datastore can be used to easily create a new VM that can be mounted and run in its own right.

In addition to being able to clone a connected on-line disk using snapshot technology, the files representing an off-line virtual disk can simply be copied to another drive on the server or another server to create a new and fully independent virtual drive. As a result, a StarWind IBV device represents a virtualized iSCSI target that is entirely independent of the underlying storage hardware and the server running the StarWind software.

|

| In addition to using the replicated datastore as a means of restoring the original VMs, we were also able to use the replicated datastore as a way to replicate the VMs resident in the datastore. The process involves the creation of a new VM by using the vmdk file for a system disk of one of the replicated VMs. Using the replicated system disk of oblSWVM1, we created oblSWVM1b. To complete the task, an administrator must launch the VM, change its LAN address, and join a domain under the new name. |

Desktop storage consolidation

One of the most promising areas to consolidate storage and improve resource utilization rates is on the desktop. The value proposition of iSCSI storage provisioning has always been its simplicity and low cost compared to Fibre Channel SANs. Nonetheless, it was the availability of the extra bandwidth provided by Gigabit Ethernet that launched significant adoption of iSCSI SANs.

Now growing regulatory demands on risk management and data retention, along with the issues of green IT, are spurring the use of iSCSI SANs as a means to consolidate desktop storage resources. In particular, using an iSCSI SAN to provision desktop storage also provides IT with a means to deploy enterprise-class functions such as replication, volume snapshots, and continuous data protection. To support these aims, the new Windows 7 OS shares the Quick Connect iSCSI technology being introduced with Windows Server 2008 R2.

To assess the use of StarWind Enterprise Server as a means for IT to provision desktop systems with storage, openBench Labs utilized a Dell Precision T5400 workstation with a dual-core Xeon processor. Unlike on our server systems, we employed a dedicated 1Gbps Ethernet adapter for iSCSI traffic on the workstation, rather than an iSCSI HBA. On this system, we ran the Iometer benchmark to test the performance of a number of iSCSI virtualization technologies provided by StarWind Enterprise Server. While advanced functionality can sometimes trump performance on servers, the playing field is much more level on the desktop.

Given the simplicity and minimal overhead of SPTI devices, we chose to use several to act as base-level devices in our benchmarks. What's more, we benchmarked the Fibre Channel performance of the underlying Emprise 5000, which sustained 5,300 8KB I/O requests per second on a single DataPac and provided 700MBps sequential I/O.

As expected, sequential I/O on our Windows 7 workstation was entirely limited by the single Gigabit Ethernet connection. Equally interesting, sequential read and write throughput on all of our iSCSI targets, from a simple SPTI device to sophisticated image-based virtual disks, were all statistically identical. Nonetheless, running Iometer with 8KB random access I/O requests in a mix of 75% reads and 25% writes uncovered differences that were an order of magnitude in performance.

With an SPTI device, IOPS peaked at about 350. On the other hand, using a Disk Bridge device, which also exports a full disk target, but virtualizes the command set to enable the use of non-SCSI drives as simple targets, more closely reflected what we had measured with the underlying disk over Fibre Channel. In particular, when we connected to the same hardware, but as a Disk Bridge device, our StarWind iSCSI target sustained 3,250 8KB IOPS. Using full- feature IBV devices also sustained that same 3,250 IOPS level on target devices used to provision our Windows 7 workstation.

Jack Fegreus is CTO of openBench Labs.

OPENBENCH LABS SCENARIO

UNDER EXAMINATION: iSCSI server software

WHAT WE TESTED

StarWind Enterprise Server

-- SCSI Pass Through Device (SPTI)

-- Disk Bridge Device

-- Image File Device Media

-- Virtual Tape Device

-- Virtual DVD Device

-- RAID-1 Mirror

-- Snapshot and Thin Provisioning Device

HOW WE TESTED

(2) Dell PowerEdge 1900 server

-- Quad-core Xeon

-- Brocade 815 8Gbps HBA

(2) Ethernet NICs)

-- Windows Server 2003

-- Windows Server 2008

-- StarWind Enterprise Server

HP ProLiant DL560 server

-- Quad-processor Xeon CPU

-- 8GB RAM

VMware ESX Server

-- (8) VM application servers

-- Windows Server 2003

-- SQL Server

-- ISS

HP ProLiant DL360 server

-- Windows Server 2003

-- VMware vCenter Server

-- VMware Consolidated Backup

-- Veritas NetBackup v6.5.3

Brocade 300 8Gbps switch

Xiotech Emprise 5000 System

-- (2) 4Gbps ports

-- (2) DataPacs

KEY FINDINGS

-- Centralize site-wide iSCSI storage provisioning via the StarWind Management Console

-- Unified SAN thin provisioning only draws upon storage capacity when data is written

-- Sequential I/O approaches wire speed with all devices.

-- 3,200 IOPS sustained with all devices except SPTI