Driving HA Through Virtualization

In this analysis, openBench Labs assesses the setup of High Availability (HA) features in a StarWind Virtual Storage Area Network for a VMware® vSphere™ 5.5 environment. Using StarWind Virtual SAN software, IT is able to create virtual iSCSI storage devices using internal direct attached storage (DAS) resources on one or more servers. These virtual iSCSI devices can be shared among multiple virtual infrastructure (VI) host servers to create cost-effective environments that eliminate single points of failure.

For technically savvy IT decision makers, this paper examines how to use StarWind to implement multiple, software-defined, hyper-converged, Virtual SANs that support VI high-availability (HA) features, including vMotion® in a VMware vCenter™. Using two, three, or four StarWind servers, IT is able to configure storage platforms with differing levels of HA support, on which HA systems can be built using native VI options. More importantly, by layering HA systems support on a foundation of HA-storage resources, IT is able to provide a near fault tolerance (FT) for key business applications, for which IT is frequently required to support a service-level agreement (SLA) addressing business continuity.

Capital Cost Avoidance

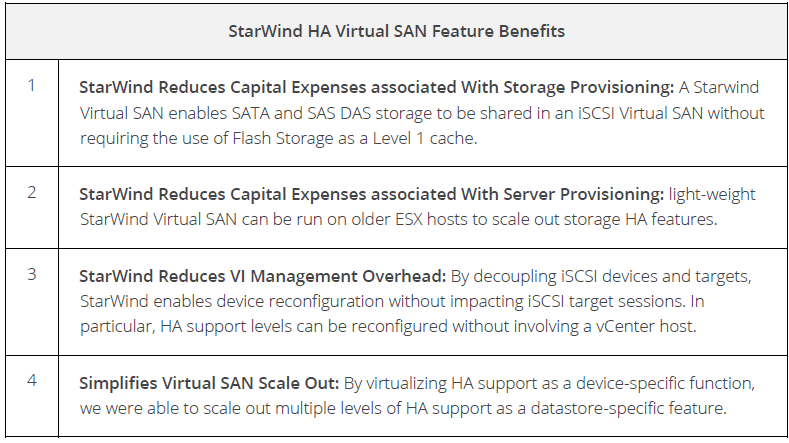

StarWind is seeking to change the old notion that high-performance shared storage requires an expensive hardware-dependent SAN to provide enhanced HA capabilities. In particular, a StarWind Virtual SAN requires no capital expenditures and avoids disruptive internal struggles between IT storage and networking support groups with simple single-pane-of-glass management within VMware vCenter™ as part of virtual machine creation and deployment.

Building on a mature iSCSI software foundation, StarWind uses advanced virtualization techniques to decouple iSCSI targets from the storage devices that they represent. By decoupling iSCSI targets from devices, StarWind virtualizes distinct device types, which enable IT administrators to configure ESX datastores that have specialized features required by applications on VMs provisioned with logical disks located on these datastores.

Building HA on Virtualized DAS Devices

By decoupling iSCSI targets from underlying devices, StarWind enables IT to meet explicit application needs by exporting datastores with different support features on virtual devices created on standard storage resources, including DAS. A StarWind Virtual SAN also provides critical heartbeat and synchronization services to iSCSI targets without requiring special hardware, such as flash devices. A StarWind Virtual SAN enables any IT site, including remote office sites, to cost-effectively implement HA for both the hosts and storage supporting VMs running business-critical applications.

Test Bed Infrastructure

Demands on IT executives continue to aggregate around projects that have a high potential to generate revenue. Typically these business applications are data rich, involve a database structure with transaction-driven I/O, and help foster current double-digit growth rates in stored data. More importantly, the enhanced availability capabilities of a VI are motivating CIOs to run more business-critical applications, including Exchange and SQL Server on VMs. Consequently, issues concerning disaster recovery (DR), HA, and near-FT data and system protection are becoming hot-button issues for CIOs when it comes to IT strategic planning.

Layering Availability for Fault Tolerance

Creating a robust near-FT environment to protect business-critical applications running on VMs is a complex multidimensional infrastructure problem. In a vSphere datacenter, for example, basic ESX host clustering leverages shared SAN-based datastores and vMotion to enhance VM availability; however, VM availability is enhanced solely with respect to VM execution issues on a vSphere host. The critical assumption underpinning a vSphere HA cluster is that stored data are constantly available to cluster members. As a result, a basic vSphere HA cluster makes no provisions for dealing with problems associated with data availability.

On the other hand, a multi-node HA StarWind Virtual SAN is exclusively dedicated to ensuring the availability of the data volumes exported to client systems. In particular, a HA StarWind Virtual SAN provides VI host servers with a very high level of data availability by synchronizing data across multiple copies of datastores, dubbed replicas, that are located on independent storage devices, such as local DAS arrays. Consequently, IT is able to provide business-critical applications that are running on a VM with a sophisticated near-FT environment by simply layering a vSphere HA cluster on top of a HA StarWind Virtual SAN.

Virtual SAN Host Configuration

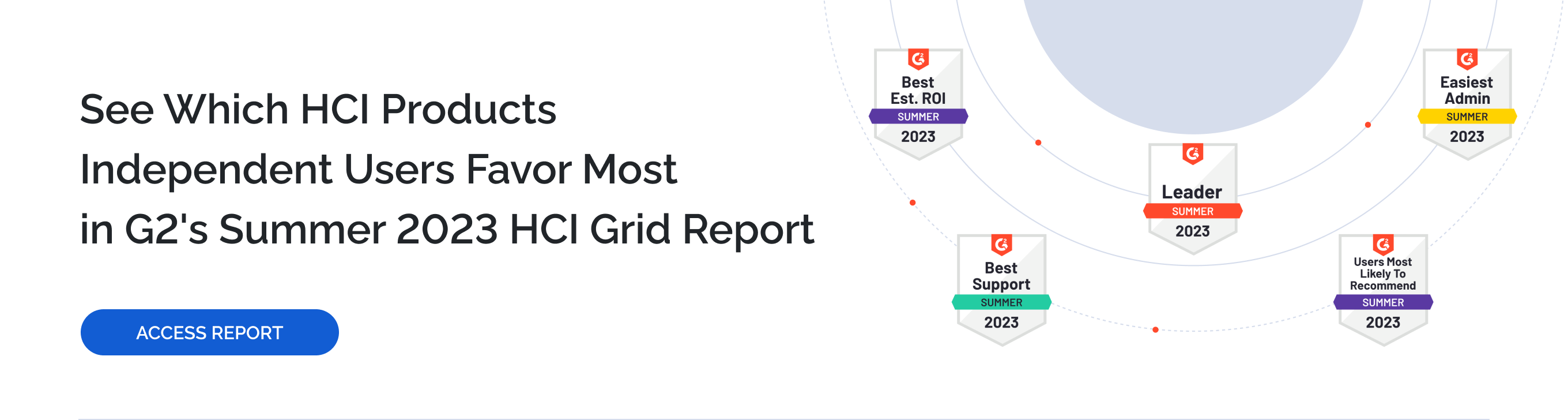

For testing a StarWind Virtual SAN, we created a 3-node vSphere 5.5 datacenter, which consisted of a 2-node vSphere HA cluster using two Dell PowerEdge R710 servers, and an older Dell PowerEdge 2950 server hosting a third StarWind Virtual SAN VM. This configuration provided us with a VI datacenter that featured a 2-node vSphere HA cluster underpinned with a 3-node StarWind Virtual SAN cluster.

We deliberately chose to use an older Dell PowerEdge 2950 in our HA Virtual SAN to demonstrate the ability of IT to retask older servers to scale out a StarWind Virtual SAN with minimal capital investments. In particular, we were able to place a Dell PowerEdge 2950 host in our vCenter datacenter to leverage the server’s storage handling capabilities, while utilizing vCenter tools to simplify datastore deployment and management without impacting the functionality of the vSphere HA Cluster by introducing vMotion compatibility issues with respect to the host virtualization features available to VMs.

The StarWind Virtual SAN server VM running on the Dell PowerEdge 2950 host consistently utilized less than half of its allocated CPU resources, even while supporting datastores exported to support VMs running I/O-intensive transaction processing (TP) applications. Adding that server to the vSphere HA cluster and automating VM migration, however, would have required enabling Enhanced vMotion Compatibility (EVC) to restrict the virtualization features that VMs could utilize on any host server to the features supported by the Dell PowerEdge 2950 host.

Host Storage Provisioning

Before installing the vSphere 5.5 hypervisor, we configured all local SATA drives as a single RAID-5 logical volume on each host system. With the ESXi hypervisor running on our host servers, we set up a VM running Windows 2012 R2 on each host. We then provisioned each StarWind Virtual SAN server with all of its host’s DAS resources using the multi-TB logical volume. We then used that volume to store all of its iSCSI device images that would be shared among the hosts as vSphere datacenter datastores.

The only specialized hardware component used on the vSphere hosts in our test setup were QLogic dual-channel iSCSI HBAs, which added two independent gigabit Ethernet (1GbE) ports. On each ESX host, these HBAs provided greater MPIO support for iSCSI devices than the default vSphere configuration—an ESX kernel port and a dedicated physical NIC with a software iSCSI initiator. In particular, the QLogic HBAs doubled the number of physical device paths associated with each datastore imported from multi-node HA StarWind Virtual SAN by ESX hosts to enhance the network load balancing.

Virtual SAN VM Server Configuration

We provisioned each StarWind Virtual SAN server VM with two CPUs and 8GB of memory to support aggressive provisioning of write-back data caches on iSCSI devices. While the StarWind default for an L1 RAM write-back cache is 128MB, we provisioned every logical iSCSI device with a 1GB L1 RAM cache for better performance on datastores used to support VMs running TP applications. StarWind devices can also be assigned an L2 cache from a local flash storage device.

In addition, we configured each StarWind Virtual SAN server with four virtual NICs in order to separate the data traffic associated with HA StarWind Virtual SAN from standard LAN traffic associated with the VM. As a result, We provisioned three virtual NICs and three VM ports on the ESX host’s virtual switch to implement an HA Virtual SAN. In particular, we needed to separate Virtual SAN device heartbeat traffic from data synchronization traffic using separate subnets. Sufficient network bandwidth for data synchronization is a critical resource should a StarWind VM device or server need to be recovered after a failure.

iSCSI SAN Networking

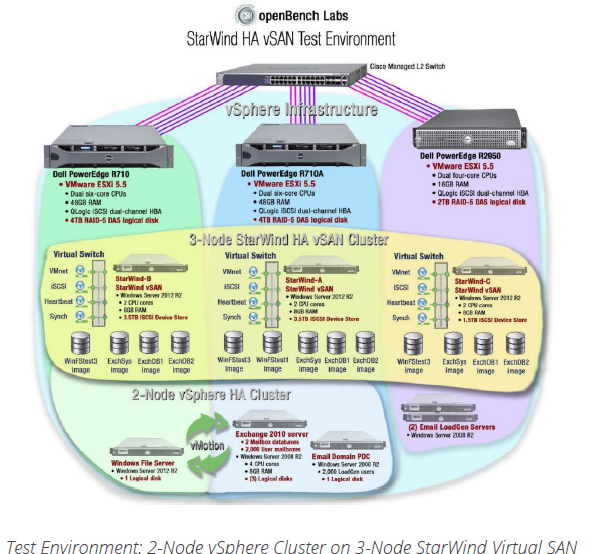

SCSI Traffic Today, a Fibre Channel (FC) SAN is assumed to have a highly fanned-out topology, with a small number of storage devices connected to a large number of systems via high-band width data paths. In an FC SAN, an asymmetric logical unit access (ALUA) policy is used to minimize data path switching; however, this construct has little in common with a typical iSCSI SAN. In a 1GbE iSCSI SAN, network bandwidth is a limiting factor and the ability to leverage multiple network paths to balance data traffic is a key objective.

To meet the real needs of an iSCSI SAN and minimize the impact of ALUA-based connection policies, StarWind defaults to configuring all network paths as optimized and active. By introducing dual-channel iSCSI HBAs on each ESX host, we doubled the number of device paths associated with each StarWind Virtual SAN in our test configuration. Using an iSCSI HBA, each of our ESX hosts had two active physical Ethernet paths to access a datastore exported by a 1-node StarWind Virtual SAN and six active paths to a datastore exported a 3-node StarWind Virtual SAN.

Nonetheless, we still needed to change the ESX host’s default ALUA policies—fixed path for single-node devices and most recently used (MRU) for multi-node HA devices—to an MPIO policy that was more friendly with respect to path switching in order to maximally leverage the default StarWind ALUA policy. In particular, we used a round robin MPIO policy on the ESX host to improve iSCSI I/O load balancing by utilizing all possible paths to datastores exported by HA StarWind Virtual SAN.

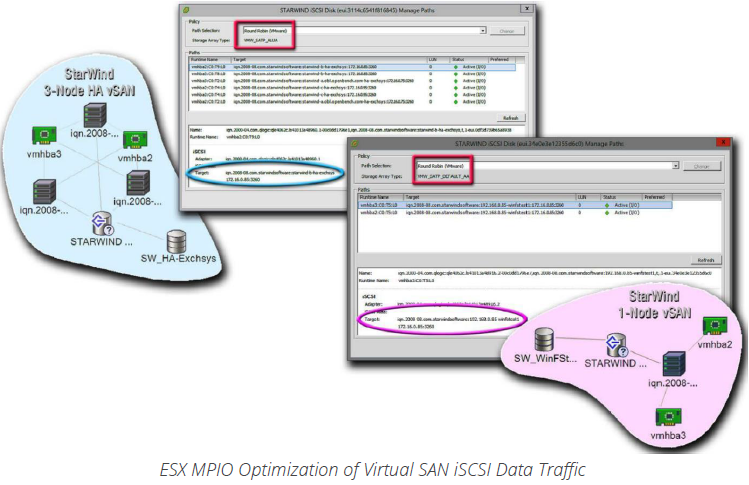

iSCSI Virtualization

Even in our relatively small test configuration, the decoupling of iSCSI targets and devices played a key role in simplifying iSCSI management tasks in our VI. In particular, we were able to use all of the iSCSI initiators deployed on ESX hosts to connect to publicly exposed targets without making any references to underlying devices. Consequently, IT administrators are able to change important characteristics of datastores exported to ESX hosts by either modifying or interchanging Virtual SAN device files on StarWind VM servers without making changes in target configurations or, more importantly, interrupting iSCSI sessions.

With the StarWind Virtual SAN servers in place, we utilized the StarWind Management Console to create, attach, and manage iSCSI device files independently of target connections on multiple servers. While creating new logical device files, we were able to configure numerous datastore features, including the number and size of cache levels, data deduplication, auto defragmentation, and a log-based file system. Similarly, we were able to extend and modify critical support characteristics of existing iSCSI device files, including the level of HA Virtual SAN support using the StarWind Replication Manager without interrupting active application processing.

On completing the creation of an iSCSI device file, the StarWind wizard automatically created an iSCSI network target and associated the target with the device file. We were then free to use vCenter to scan for StarWind VM server addresses in order to discover iSCSI targets for ESX host servers, initiate iSCSI host sessions, and deploy StarWind iSCSI virtual devices as vSphere datastores.

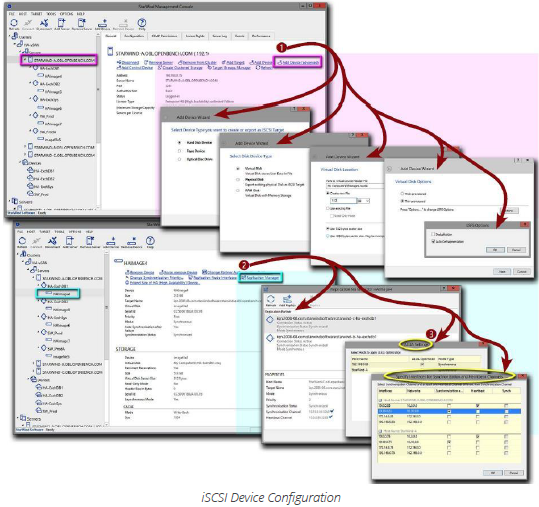

iSCSI Virtualization Wizards

We used the context-sensitive menu structure of the StarWind Management console to drill down independently. on various features and characteristics of both iSCSI targets and devices to optimize Virtual SAN performance. In particular, we were able to invoke specific ALUA options for optimizing iSCSI target paths and configure multi-level cache implementations on virtual iSCSI storage device files.

The context sensitive configuration wizards in the StarWind Management Console take IT administrators through a step-by-step series of options to configure➊ or manage➋ virtual iSCSI devices or targets. Image files can be saved on any VM volume—we used a logical volume defined on a DAS RAID array. Attributes ascribed to a StarWind virtual device file, such as cache size, determine the performance and features of the ESX datastore that will be logically linked to the device file.

More importantly, wizards can be used to modify properties of existing devices. In particular, IT administrators can modify HA characteristics, such as ALUA target settings➌, using the Replication Manager. After choosing a StarWind VM as a device partner, an IT administrator can either utilize an existing device or automatically create a new device on the partner by cloning the original.

Application Continuity and Business Value

For CIOs, the top-of-mind issue is how to reduce the cost of IT operations. With storage volume the biggest cost driver for IT, storage management is directly in the IT spotlight.

At most small-to-medium business (SMB) sites today, IT has multiple vendor storage arrays with similar functions and unique management requirements. From an IT operations perspective, multiple arrays with multiple management systems forces IT administrators to develop many sets of unrelated skills.

Worse yet, automating IT operations based on proprietary functions, may leave IT unable to move data from system to system. Tying data to the functions on one storage system simplifies management at the cost of reduced purchase options and vendor choices.

By building on multiple virtualization constructs a StarWind Virtual SAN service is able to take full control over physical storage devices. In doing so, StarWind provides storage administrators with tools needed to automate key storage functions, including as thin provisioning, disk replication, and data de-duplication, in addition to providing multiple levels of HA functionality to support a near-FT environment for business continuity.