Whether you have heard it called software-defined storage, referring to a stack of software used to dedicate an assemblage of commodity storage hardware to a virtualized workload, or hyper-converged infrastructure (HCI), referring to a hardware appliance with a software-defined storage stack and maybe a hypervisor pre-configured and embedded, this “revolutionary” approach to building storage was widely hailed as your best hope for bending the storage cost curve once and for all. With storage spending accounting for a sizable percentage – often more than 50% — of a medium-to-large organization’s annual IT hardware budget, you probably welcomed the idea of an SDS/HCI solution when the idea surfaced in the trade press, in webinars and at conferences and trade shows a few years ago.

The basic value case made sense at the time. After all, proprietary storage rigs from the likes of EMC, IBM, HP, and other big box makers had become obscenely pricey – with no respite on the horizon. Much of the cost was linked to “value-add” features – tweaks to hardware or software functionality made by vendors and embedded on their array controllers – that supposedly made the vendor’s kit superior to competing products and better suited to your workload. Even if this was true, these improvements usually came with a significant (though mostly under-examined) downside.

Vendor lock-in was a big one. Value-add functionality usually required the purchase of all gear from one vendor. Heterogeneous infrastructure “diluted” the value of the vendor’s innovation, vendors argued, and the purchase of competing software or hardware, regardless of its contribution to capacity, throughput or manageability, was discouraged.

Over time, it became apparent that value-add functionality was being leveraged as much to lock in the consumer as to differentiate products in the marketplace. Eventually, consumers began to believe that the value of value-add was offset by its tendency to limit buying choices or to negotiate the purchase or licensing contracts.

The “undiscovered country” of the value-add equation was management. Vendors offered proprietary monolithic storage arrays that, while they were all constructed from the same commodity hardware, were distinguished from one another by software features. One rig “de-duplicated” the data that was stored on its media, while another used specialized compression techniques. One array sought to eradicate the problem of over subscription with under-utilization by allocating capacity in a piecemeal fashion using a forecasting algorithm, while another provisioned storage efficiently by first virtualizing all available capacity to form a virtual pool of resources. Yet another rig protected data using proprietary RAID architecture, while others eschewed RAID for a nostalgic approach to data protection based on erasure coding.

While each of these innovations may have had a specific value case to make, in aggregate they contributed to the silo’ing of data and introduced more obfuscation into storage management. For example, when trying to manage capacity across a heterogeneous infrastructure, admins needed to ensure that the data they were acquiring on the capacity allocation status of array X referred to actual capacity versus “virtual” or “thinly provisioned” capacity. Array controllers could not be relied upon to articulate the status of the array in a way that was uniform across all products from all vendors.

This was a huge, and mostly undiscussed, problem with what we now call legacy storage. The industry made a couple of halting efforts to redress the problem, including the Storage Networking Industry Association’s System Management Interface Specification (SMI-S) back in the late 1990s, but it was obvious from the get go that many vendors had little interest in cooperation. Why would it be in EMC’s best interest’s to make their gear co-manageable with an IBM or HDS rig? Perhaps if customers had demanded it, the story would have been different. But, alas, consumers never demanded a real cross-platform management solution.

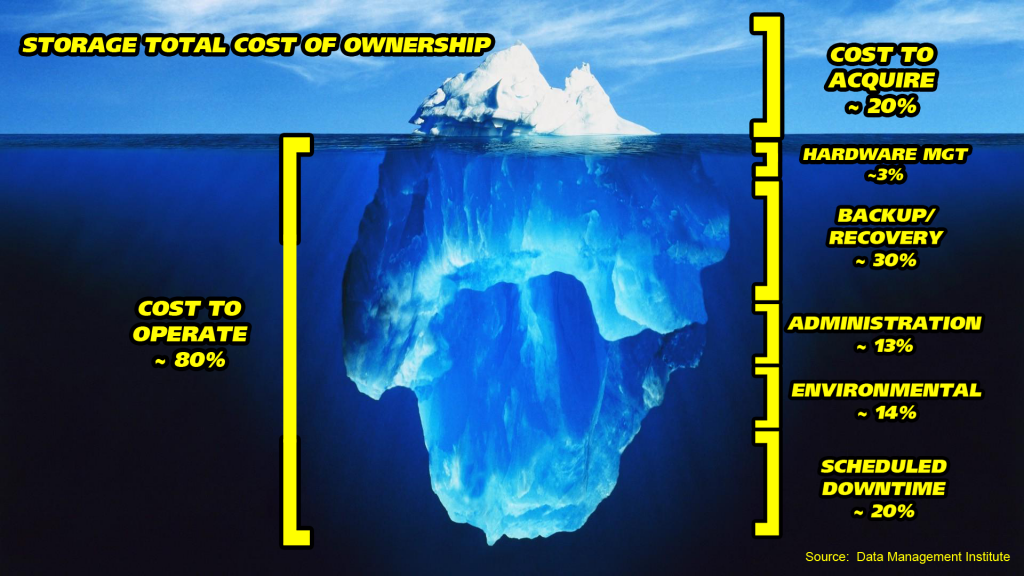

This lack of coherent enterprise storage infrastructure management has shown up in the form of cost from the dawn of business computing. According to numerous evaluations by industry analysts, annualized administration, and management costs total five to six times the annual expense for hardware acquisition. Equipping administrators with the tools (software, management protocols, etc.) to manage more capacity to a greater degree of efficiency has always been the holy grail in storage management.

So, with the deconstruction of monolithic legacy storage evangelized by the software-defined crowd, one would have hoped that a strong management story would have been part of the revolutionary manifesto. Unfortunately, while most SDS vendors succeeded in articulating the value of separating value-add from commodity so that the commodity components could be purchased at a much lower cost, but little was said about the management of the resulting infrastructure. Instead, the management piece was left to hypervisor software vendors to deliver.

Observers should have known the vicissitudes of this idea after observing VMware’s shenanigans with its vStorage APIs for Array Integration (VAAI) just prior to the appearance of the SDS movement. VAAI, for whatever its purported value, comprised a break with the traditional approach to changing the command language of SCSI as maintained in the ANSI T-10 Committee’s Small Computer System Interface (SCSI) command language: VMware simply added nine unapproved primitives to SCSI and cajoled the storage industry to adopt them or risk marginalizing themselves with customers who were increasingly converting workload to VMware-style virtual machines. With much grumbling and gnashing of teeth, the storage industry complied with VAAI…only to be forced to make more arbitrary changes when the whims of VMware dictated a short time later.

In truth, some SDS advocates originally believed that the World Wide Web Consortium’s (W3C) standards around Representational State Transfer (REST) would provide the means to manage the software-defined storage world. RESTful web services and APIs were held up as wonderful ways to enable interoperability between and among hardware kit from different vendors. However, this proved to be overly optimistic as VMware and others, while embracing REST publicly, buried access to their REST interfaces behind several layers of proprietary APIs – mainly to obfuscate management of VMware environments in concert with competitor hypervisors from Microsoft and the open source community.

Bottom line: the SDS/HCI “revolution” has fallen short of the expectations of many consumers because it has failed to deliver real gains in cost-containment. While acquisition prices for hardware kit may have declined somewhat, and while administrator efficiency has increased to the point where a single admin can manage more capacity behind a specific hypervisor and SDS stack, these savings have been met by a commensurate increase of more than 10% in the inefficiency and costliness of storage for organizations enterprise-wide. The promise of SDS is becoming a fiction told by vendors to sell silo’ed storage solutions that are every bit as poorly managed in total as the legacy infrastructures that they replace.

Whether companies deploy legacy monoliths or stovepiped SDS infrastructure, lack of effective storage resource management is still the Achilles Heel from the standpoint of cost containment. Even if the IT planners aren’t paying attention, one can be sure that someone in corporate accounting is. Needed is an enterprise storage architecture that drives down storage hardware expense while also optimizing resource management in an intelligent and open way.