This is a comprehensive comparison of the leading products of the Software-Defined Storage market, featuring Microsoft Storage Spaces Direct, VMware Virtual SAN and VSAN from StarWind. It provides numerous use cases, based on different deployment scales and architectures, because the mentioned products all have different aims. As the market is already large enough, the vendors used to dwell its different parts, but lately they entered a full-scale competition, adapting their products to meet general demand. This post is an analysis of how Microsoft, VMware and StarWind fare in in the Software-Defined Storage market right now. The approach is practical and all the statements are based on the experience of virtualization administrators and engineers from all over the world.

OK, here we go! First of all, I think a set of disclaimers is required here:

So, A), B) and C) summarized make me probably not the best information source on the subject, but… Let’s get started and see what we could win!

I’ll begin with a “maturity” issue where I’ll try to play a “devil’s advocate” for both Microsoft and VMware. It’s quite common to hear statements (usually from Microsoft and VMware “competitors” who managed to download and compile some ZFS fork out and craft some reasonably good-looking HTML5 GUI, so now they call themselves an uber-exciting hyperconverged or storage startup LOL) about Microsoft not understanding storage and VMware never having been a storage company itself, which means they have no traces of storage in their companies’ DNA, both companies having V1.0 version of their products, and so on. I’d say neither Microsoft nor VMware are small companies, and they take Software-Defined Storage challenge for serious for sure: teams are extraordinary talented, partners are well-engaged, huge money is bet on success, so everything others spent years on could be done by Microsoft and VMware in a very short time term (2-3 years. I think). These guys may indeed be a bit late to the SDS party (well, big guys never were good in true innovations, disruption strategies belong to lean startups, and it’s a law stronger than the law of gravity, IMHO), but they catch up very fast, and while their products may have some “holes” in features line (who doesn’t have them?), everything they put into RTM-labeled version works well for sure! Finally, if some particular niche isn’t served well by them, maybe it is because VMware and Microsoft don’t really see this niche as a valuable source of income worth spending time on serving such a customer group? In a nutshell: everything I’ll compare below is assumed to be of a similar build quality, no FUD for sure!

Now comes one very important assumption. While the topic covers VSAN from StarWind, Microsoft Storage Spaces Direct (Why didn’t Microsoft give it a “Virtual SAN” name as well? It would have saved us all A LOT of time and kill so much confusion!), and VMware Virtual SAN, I’ll extend software-only offerings to so-called “ready nodes”, which are branded servers with pre-installed hypervisor (Microsoft Hyper-V or VMware vSphere) and matching Software-Defined Storage solution from listed (SDS in this context) vendors. Software-Defined Storage eventually evolved into hyperconvergence, and hyperconvergence is now mostly considered as “ready nodes” rather than software alone, and here’s why: SDS and hyperconvergence allowed reducing implementation costs (CapEx) and maintenance costs (OpEx) in a way of not buying any “big named” SAN or NAS first (Software-Defined Storage did it), and later in a way of not buying any DIY (Do-It-Yourself) SAN or NAS (hyperconvergence now) at all. “Ready nodes” lift hyperconvergence and associated CapEx and OpEx savings just to another level and save even more money upfront when hyperconverged vendor shares some of their major hardware discount with the end user in order to make hyperconverged infrastructure more affordable, later covers all support for cluster by their own, and eliminates a need in a “middle man” or MSP which smaller SMB shop had to hire to support their vSphere or Hyper-V installation before. Software-Defined Storage à Hyperconvergence à HC “ready nodes”, this is how the whole virtualization evolution looks like for a typical SMB. StarWind has “ready nodes” called HCA (Hyper-Converged Appliance), VMware has them as well (VSAN “ready nodes” or VxRack from parent Dell company), and Microsoft delivers similar solutions through the network of partners.

I’ve decided to separate the customers by size and by associated most typical scenarios instead of focusing on features and some products limitations because I believe it’s hard to decide if a lack of f.e. deduplication is a deal breaker or not for somebody. My separation is still not perfect, no line drawn in the sand (and if there’s one, it’s definitely blurred), but most starting points are there.

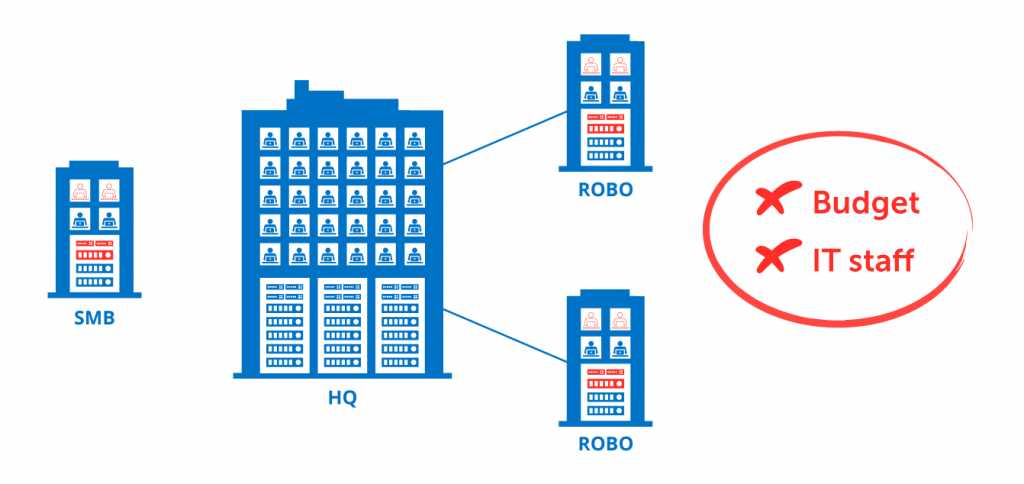

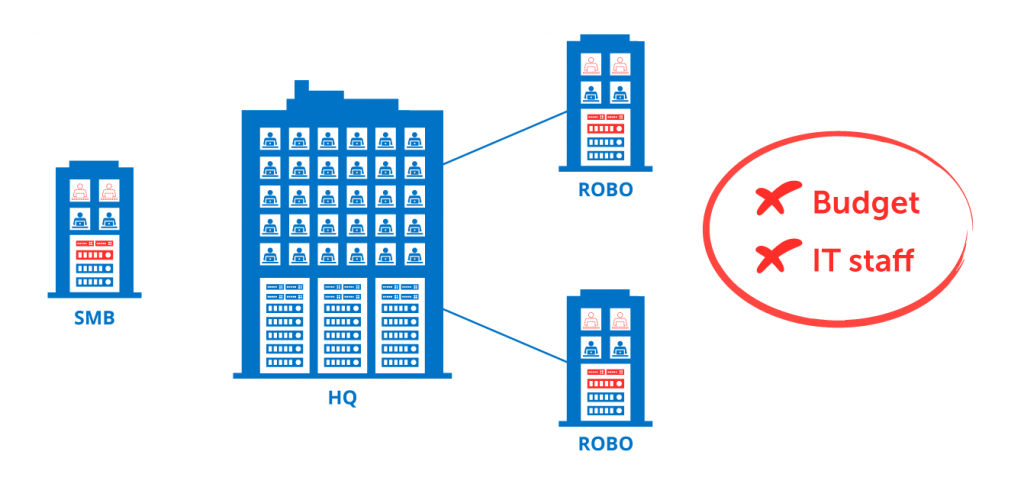

SMB and ROBO typically have limited budgets and IT staff

SMB and ROBO typically have limited budgets and IT staff

VMware does exist in a two-node version called “ROBO Edition”, but it requires a third witness data-less host somewhere (private or public cloud), and it’s also “per-VM-pack” rather than “per-CPU-socket” licensed. Microsoft currently doesn’t support two-node Storage Spaces Direct setup, but even if they did, their data distribution policy doesn’t allow losing more than a single hard disk even in a three-node two-way replicated S2D cluster. Moreover, Storage Spaces Direct requires Datacenter license, making resulting solution extremely expensive and a total overkill for smaller deployments. StarWind doesn’t need any witness entities (it can utilize heartbeat network) for a pure two-node setup, doesn’t need network switches (it doesn’t use broadcast and multicast messages like VMware Virtual SAN currently does), and doesn’t require any specially licensed Windows host (even free Hyper-V Server is absolutely OK for us, Windows Server Standard is PERFECT). Smaller overhead that VM-based VMware solution has can be safely ignored (StarWind runs as a part of a hypervisor on Hyper-V, but requires a “controller” VM for vSphere) because IOPS requirements are low within this scenario. To put the final point, StarWind software itself as well as hyperconverged appliances come with 24/7 support, so shortage or a complete lack of on-site human resources is mitigated.

SMB and ROBO benefit from well-supported solutions with minimum hardware

These all points mentioned above make StarWind and StarWind-based hyperconverged appliances a very natural choice here, and we’ll provide either our own Virtual SAN software alone to “fuel” virtual shared storage for existing commodity servers customer already has, or we’ll ship a complete hyperconverged appliances set with our VSAN from StarWind being used as a “data mover” layer. Still our “ready nodes” are more affordable than DIY (Do-It-Yourself) kits (StarWind has hardware discount which is splitted with the customer, whereas the customer would have to spend literally millions of dollars to get a comparable discount rate), and still our “ready nodes” come with 24/7 support, while DIY is basically a “self-supported” solution.

For these particular customers Microsoft Storage Spaces Direct and VMware Virtual SAN are the best fit! Window Server Datacenter licensing makes sense because of the amount of VMs alone, so Storage Spaces Direct are there automagically, and VMware Virtual SAN cost overhead is split between many VMs running on the same host, and, therefore, is reasonable. For these guys StarWind isn’t offering any paid software for primary storage (within this cluster, I mean), but we’ll be happy to sell StarWind-branded “ready nodes” (just to drive server hardware costs down a little bit), where either Microsoft S2D or VMware VSAN will be used as a “data mover”. We’ll still use our own Virtual SAN to tap some little holes in Microsoft and VMware products in order to add even more performance, increase storage efficiency, and add some more flexibility, as StarWind isn’t forced to support one storage protocol while not supporting other one, for example. For Microsoft we’ll add RAM-based write-back cache (Microsoft own CSV RAM cache is read-only and limited in size), 4KB in-line deduplication (Microsoft Storage Spaces Direct require ReFS, and ReFS has no dedupe), log-structured file system, and a set of protocols Microsoft isn’t offering out-of-the-box (HA iSCSI including RDMA iSER and VVols extensions, failover NFS etc). VMware has no RAM-based write-back cache (flash only) as well, no dedupe for spinning disk (that means VMware dedupe is for primary storage, and backup scenario isn’t served), and block & file protocols (iSCSI, NFS, and SMB3) customer could deploy immediately out-of-the-box (VMware VSAN is a “private party”, so only VMs have access to VSAN-managed distributed storage pool). Last but not least, we’ll still wrap everything customer gets into our own 24/7 support making us rather than customer own the whole support and maintenance thing.

Making long story short: StarWind has the same unchanged “hyperconverged appliance still being cheaper then do-it-yourself kit but all covered by our premium 24/7 support your DIY doesn’t have” offer. Except for “data mover” we’ll use Microsoft and VMware own SDS solutions keeping our own software as a complimentary SKU in order to “enhance” them and help in our differentiation from other vendors shipping same Dell or HP servers and same S2D or VSAN based HCI SKUs.

Either of both Microsoft Storage Spaces Direct and VMware Virtual SAN would really be a bad choice here. The first reason is strictly financial: for a hyperconverged environment it’s simply too expensive to pay $5,000+ of licensing fees per every single host if there are too many of them. 20+ hosts will bring an associated $100,000+ price tag with them, and it’s a MSRP of an exceptionally well-performing all-flash SAN covered with a super-strict SLA (Service Level Agreement) and delivering performance and features set Microsoft and VMware can only dream about. Tiny remark: Microsoft licensing has a benefit of an unlimited number of licensed Windows Server VMs included, but if a customer already has VMs licensed (Windows Server licenses purchased already or BYOL performed), this argument is gone, and Storage Spaces Direct and VMware Virtual SAN play in equal condition. The second reason is a hybrid of financial and architectural: if compute and storage tiers need to be sized separately from each other, whole hyperconverged concept fades, and more classic “compute and storage segregated” should be deployed instead of it. Utilizing expensive Windows Server Datacenter licenses to build a Scale-Out File-Server-storage-only tier is pretty much pointless, just because a dedicated to serve storage-only server hardware alone plus Windows Server Datacenter licenses will outweigh the price of an all-flash SAN still not being able to catch up with an all-flash SAN IOPS, features and SLA included. VMware Virtual SAN simply doesn’t support non-hyperconverged architecture as it has to run on every single hypervisor host where running VMs are consuming VSAN-managed virtual shared storage, which means “compute only” data-less VSAN-licensed nodes are supported while “storage only” VSAN-unlicensed nodes aren’t at all. The Third reason is, again, architectural: it’s about multi-tenant environments where both vSphere and Hyper-V are deployed in various proportions. VMware Virtual SAN doesn’t provide any way to export managed storage, so anybody (including Hyper-V cluster, of course) outside of a VSAN cluster is out of the game immediately, and Microsoft can expose only SMB3 reasonably well, while VMware doesn’t “understand” this protocol, asking for more commonly adopted iSCSI and NFS, and Microsoft isn’t really good with any of them. This means Microsoft and VMware simply “talk different languages”, and, instead of having a single pool of storage being shared and consumed by either Microsoft or VMware running cluster, the customer needs to maintain at least two separate storage pools, one for Microsoft and one for VMware. This brings just recently buried unified central all-flash SAN idea back again, and only because of the fact that even if CapEx were OK for two separate “VMware only” and “Microsoft only” solutions, OpEx would go over the roof for sure, and resource utilization would suck badly: “islands of storage” are always bad compared to a “single unified pool”, which is good.

StarWind here can offer our Virtual SAN software to run either on a non-symmetric (all nodes provide compute power, but not all of them provide storage at the same time, say, you have 40 nodes VMware vSphere cluster where only 8 nodes actually provide shared storage to the others, sort of a hyperconverged and non-hyperconverged mix) hyperconverged cluster and provide it with a virtual shared storage, or on a “compute and storage segregated” cluster “powering” storage-only tier. Unlike Microsoft and VMware, we don’t expect our software to be licensed on every single node of a cluster (or it’s storage-only “sibling” part called Microsoft SOFS, if “compute and storage segregated” model is utilized), we license consumed capacity, and how many nodes of a hyperconverged cluster actually do provide exposed storage or how many instances of a StarWind service are running and where… we don’t actually care about that! The customer now has an excellent ability to pay for exact consumed storage resources and is the one who decides which servers do compute, which do storage, and which do both compute and storage at the same time. Flexibility! Alternatively, we can issue the customer with a hyperconverged or storage-only “ready nodes” in any combination because we not only ship HCI, but also have “storage only” SA (Storage Appliance) “building blocks”. We can provide hyperconverged N-node cluster for just a fraction of VMware or Microsoft N-node cluster typical licensing costs, and we can provide a fully packed all-flash SAN equivalent to feed shared storage to a hypervisor cluster as well. Still everything is way cheaper than trying to buy an assemble yourself, and still everything is covered with our 24/7 support, just because after we start running the storage part of the cluster, we immediately start owning the whole big thing. As you can see, StarWind is a very natural choice here both in terms of hardware and a “data mover” virtual shared storage layer as well if the customer is lucky (unlucky?) to have servers purchased already.

VMware and Microsoft aren’t any good here. Either technical (VMware VSAN can’t be used on a non-virtualized SQL Server cluster as it doesn’t use vSphere, Microsoft can’t provide virtual shared storage to Oracle RAC as it can’t talk SMB3, VMware Virtual SAN and Storage Spaces Direct scale well with many small consumers but they don’t really shine when one or several consumers need all IOPS from a single big unified name space, etc.) or financial (licensing Windows Server Datacenter on every host of the cluster just for a few VMs or for storage-only tier is a waste of money, we touched on this reason already before).

StarWind is a perfect choice here both in terms of software (so-called “data mover” to create virtual shared storage pool) installed on top of a server set customer already has and complete “ready nodes” for HCI or storage-only infrastructure. StarWind supports non-virtualized Windows Server environments, properly supports all possible storage protocols, and can provide high performance (with a decent amount of RAM even matching in-memory TPC) shared storage to a single non-virtualized or several virtualized consumers (VMs?), thanks to StarWind aggressive RAM write-back cache, storage optionally pinpointed to RAM completely, in-line 4KB dedupe, log-structuring, and data locality concepts used. In case of SQL Server (virtualized or not), instead of deploying a very expensive SQL Server Enterprise to build AlwaysOn Availability Groups and utilizing “in-memory” DBs, customer can use a much cheaper SQL Server Standard and AlwaysOn Failover Clustered Instances put on top of an “in-memory” storage StarWind provides. As a result, customer will get much better $/TPC ratio with a very similar or even better uptime metrics. Same about Oracle RAC and its need for an expensive Enterprise plus special “in-memory” licenses: StarWind replaces all of these requirements with an old licensing scheme re-used plus in-memory storage we provide. Same about SAP R/4 vs SAP HANA. In case hardware is purchased from StarWind, we’ll also bring in our discount to make new hardware more affordable to customer, and we’ll keep everything wrapped in our 24/7 support either route customer choses: software-only or HCA/storage one.

Conclusion: VSAN from StarWind is a complimentary rather than competitive solution to Microsoft Storage Spaces Direct and VMware Virtual SAN. In case we see our shared customer doesn’t need Microsoft S2D or VMware VSAN, we’ll use our own software and that’s it, if we see that the customer is going to benefit from a combined solution, we’ll provide them with a stack where Microsoft S2D (or VMware VSAN) will co-exist with a VSAN from StarWind on the same hardware. Technically, what we do here at StarWind is “tapping holes” in Microsoft and VMware products and positioning strategy in terms of features they miss, as well as bringing whole hyperconvergence to another level by making good quality hardware even more affordable and setting 24/7 support as a routine and a “checkbox” feature. Yes, we’ll split our hardware discount with you to make our “ready nodes” even cheaper than anything you would build yourself on a DIY concept, and, yes, we’ll “babysit” your clusters for you, so you don’t need to do anything yourself!

- Microsoft Clustered Storage Spaces and VSAN from StarWind for a Complete Software-Defined Storage Solution

- Software-Defined Storage Architecture: Optimizing Business Value and Minimizing Risk in Microsoft/VMware Storage Strategies