You may know that memory page deduplication technology Transparent Page Sharing (TPS) becomes useless with large memory pages (it’s even disabled in the latest versions of VMware vSphere). However, this doesn’t mean that TPS goes into the trash bin, because when lacking resources on the host-server, ESXi may break large pages into small ones and deduplicate them afterwards. In the process, the large pages are prepared for deduplication beforehand: in case the memory workload grows up to a certain limit the large pages a broken into small ones and then, when the workload peaks, forced deduplication cycle is activated.

A more granular TPS control model was introduced in the latest VMware vSphere versions, including ESXi 6.0. It can be utilized for separate VM groups (for example – the servers that process the same data, where duplicated parts are common). This mechanism is called Salting (Salt).

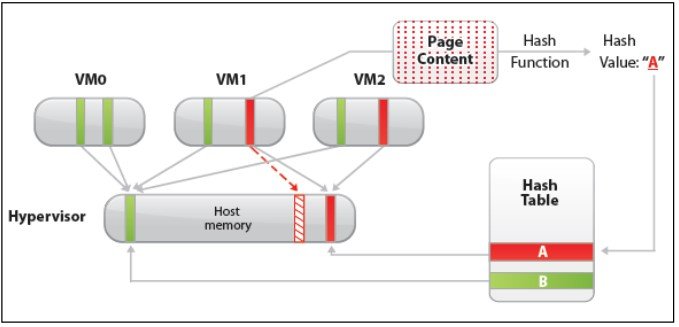

TPS performs hashing of VM memory pages and then compares the hashes in order to find matching parts. In case the matches are found, a per-bit comparison is performed to avoid a false deduplication (because two different sequences can theoretically give the same hash).

If the pages match completely, changes are made into the memory pages table, where in the second matching part there is just a link to the first one, while the actual duplicate is removed.

Any write attempt from VM side into such a page will create a physical copy via copy-on-write (CoW) mechanism. This copy is not shared any more, because each VM starts using its own copy.

In the latest VMware vSphere versions, the TPS mechanism works differently. It uses Salting by default, utilizing VM UDID as Salt, as it is always unique for a particular vCenter server. Prior to capturing the page hash, salt is added to initial data, and while this salt is the same for a certain VM, TPS deduplication is performed on the VM level (Intra-VM TPS).

The Salting mechanism is utilized by default, starting from the following hypervisor versions:

- ESXi 5.0 Patch ESXi500-201502001

- ESXi 5.1 Update 3

- ESXi 5.5, Patch ESXi550-201501001

- ESXi 6.0

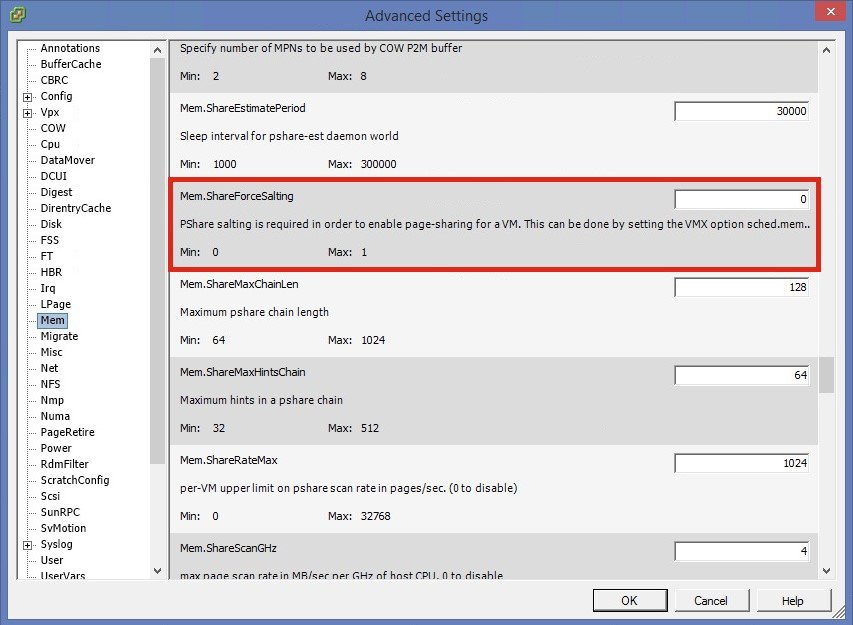

The mechanism is controlled from the host Advanced Settings of VMware ESXi. Mem.ShareForceSalting is used by default set to “2”, meaning that salting is on and works in Intra-VM TPS.

(Source: vblog.io)

In case we set it to “0”, as seen on the screenshot above, then salt stops being added to hash during the TPS algorithm work, and it starts working for every VM on host (Inter-VM TPS).

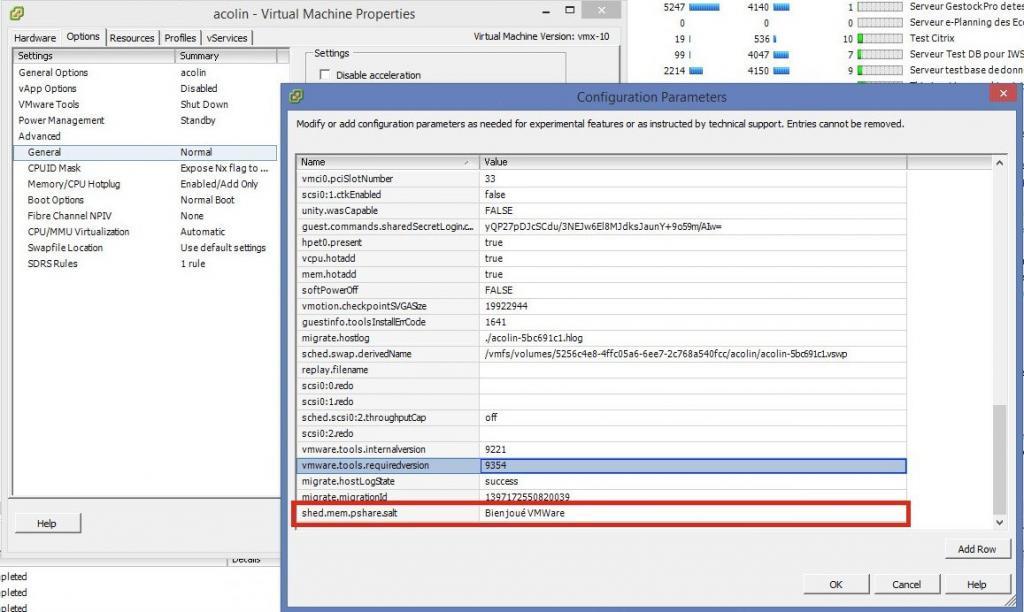

It’s understood that TPS may be enabled for a separate VM group on the ESXi host, using the same salt for the VMs. In order to achieve this, sched.mem.pshare.salt parameter must be set in the advanced settings with the same value for each VM (same in vmx-file).

In this case, Mem.ShareForceSalting must be set to “1” or “2” (doesn’t really matter which, except if you set “1”, Inter-VM sharing turns on without salt).

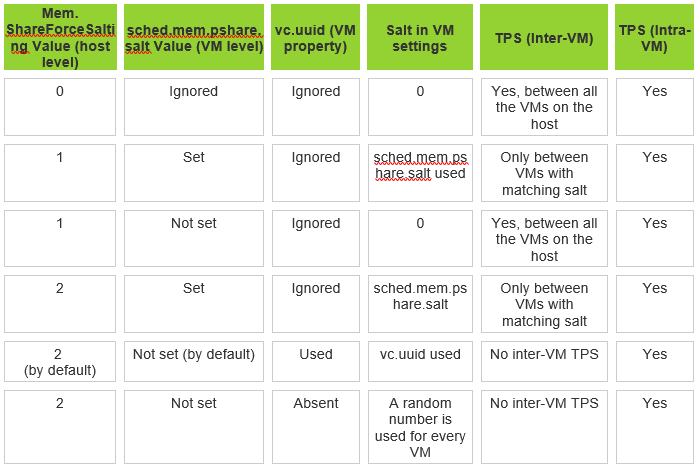

The chart below contains all the differences in the work of TPS for different Mem.ShareForceSalting settings and sched.mem.pshare.salt for the VMs.