Not too long ago, VMware released their newest update, VMware vSphere 7 Update 3, which became available for download right before their latest conference, VMworld Online 2021. The latter was dedicated mainly to their server and desktop product lines as well as to Tanzu ecosystem and the perspectives of their development. According to the conference results, we’ll see a lot of exciting stuff in the future. However, today we’re gonna talk about the three primary announcements for the VMware vSphere platform, the basis of modern data centers.

So, here are the most worthwhile things announced at VMworld 2021:

Project Capitola

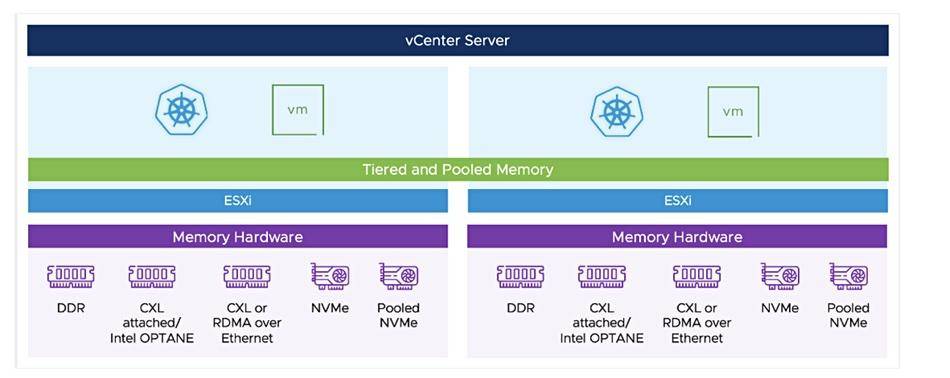

This is, probably, the most promising of all promised vSphere functionalities. As you all know, the server virtualization market for a long time has been developing a broad RAM ecosystem of varying levels, including such solutions as DRAM, SCM (Optane and Z-SSD), CXL memory module, PMEM memory, or NVMe. Just as with the network infrastructure (that utilizes NSX solution for network virtualization and aggregation), server infrastructure (vSphere for CPU virtualization), and vSAN storage infrastructure virtualization, VMware introduced a new memory aggregation and virtualization environment, namely Project Capitola.

Basically, it’s a Software-Defined Memory in the cloud (on-premises/public) managed by vCenter at the VMware vSphere cluster levels:

All available memory of the virtualization servers within the cluster is aggregated into a non-uniform memory architecture (NUMA) memory pool. Furthermore, it is divided into tiers depending upon performance characteristics defined by hardware (price / performance points) that provides resources for RAM.

All of this allows us to dynamically provide memory for virtual machines within the policies created for respective tiers. After all, Project Capitola supports most of the currently available virtual data center dynamic optimization techniques, such as Distributed Resource Scheduler (DRS).

However, VMware isn’t going to release it all at once. First comes the memory management at the level of separate ESXi hosts, then comes the same at the cluster level.

No wonder such technology requires support at the hardware level, which is why VMware has already agreed to cooperate with certain vendors. Memory-wise, they are going to develop a partnership with Intel, Micron, Samsung. The cooperation is also planned with server manufacturers (Dell, HP, Lenovo, Cisco) and service providers (Equinix).

Nevertheless, within this particular project, the primary VMware partner is Intel. They provide such technologies as, say, Intel Optane PMem on Intel Xeon platforms. If you’re looking for more, all info can be found here:

- [MCL2384] Big Memory – An Industry Perspective on Customer Pain Points and Potential Solutions

- [MCL1453] Introducing VMware’s Project Capitola: Unbounding the “Memory Bound”

Project Monterey

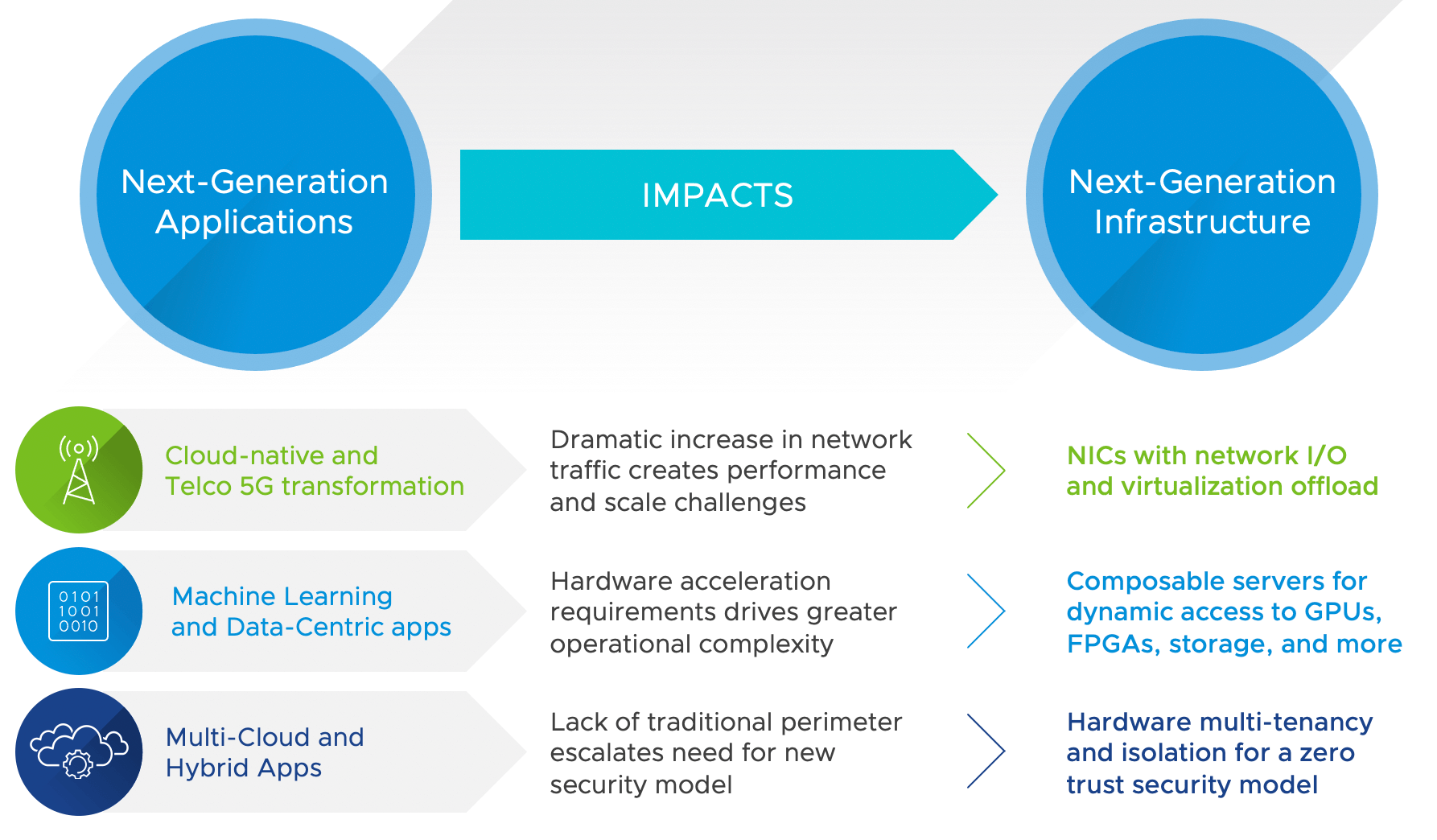

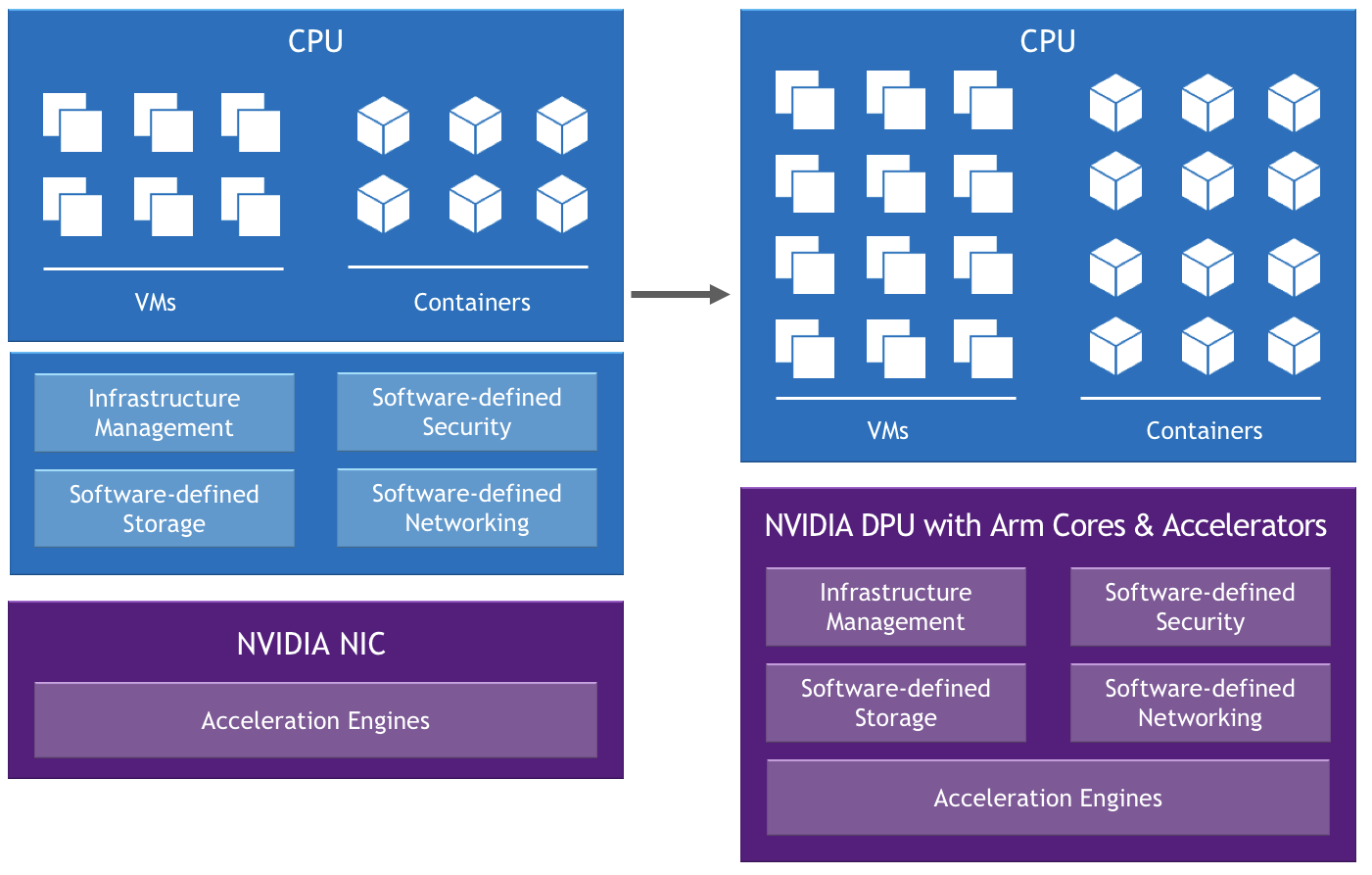

Unlike our previous attendant, Project Monterey isn’t exactly the newest thing, since it has been announced at VMworld 2020. For a long time, hardware vendors are putting a giant effort to reduce the CPU load by transferring certain functions towards respective server components (vGPU module, a network card with offload-function support, etc) and isolating them as much as necessary and possible. Still, this new hardware architecture is practically useless without major changes within the software platform itself.

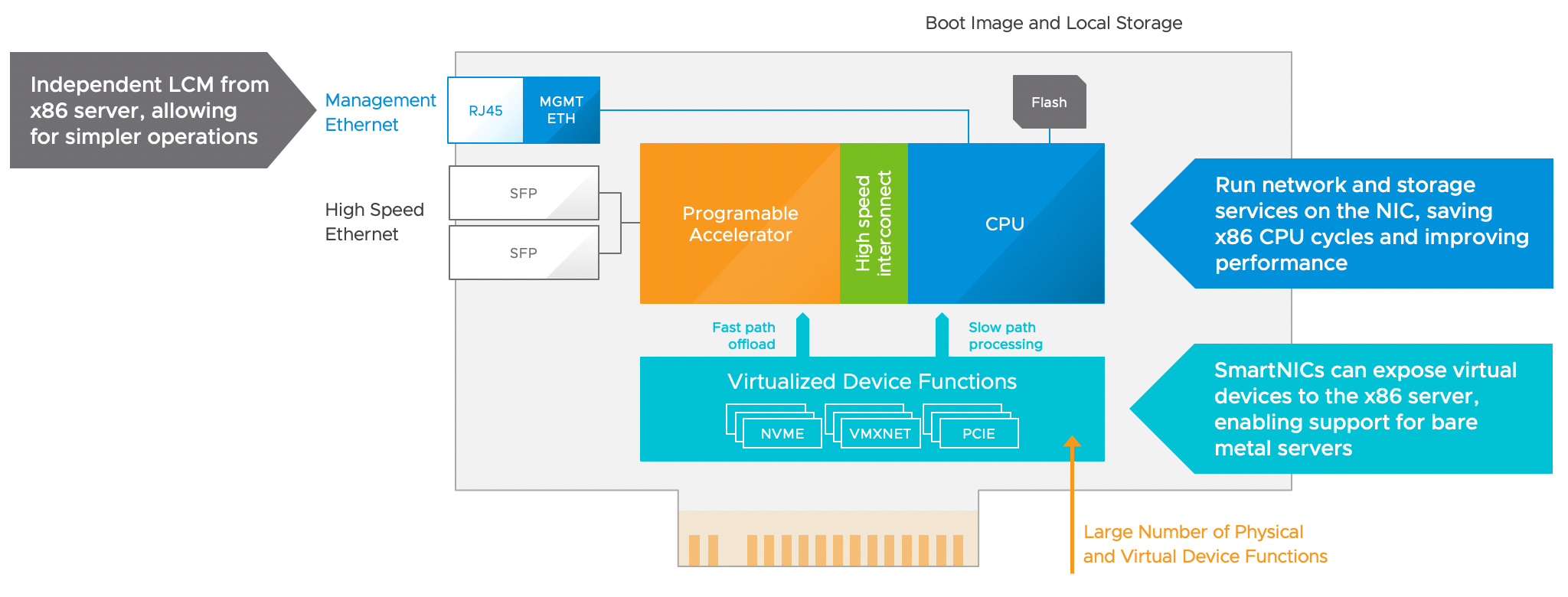

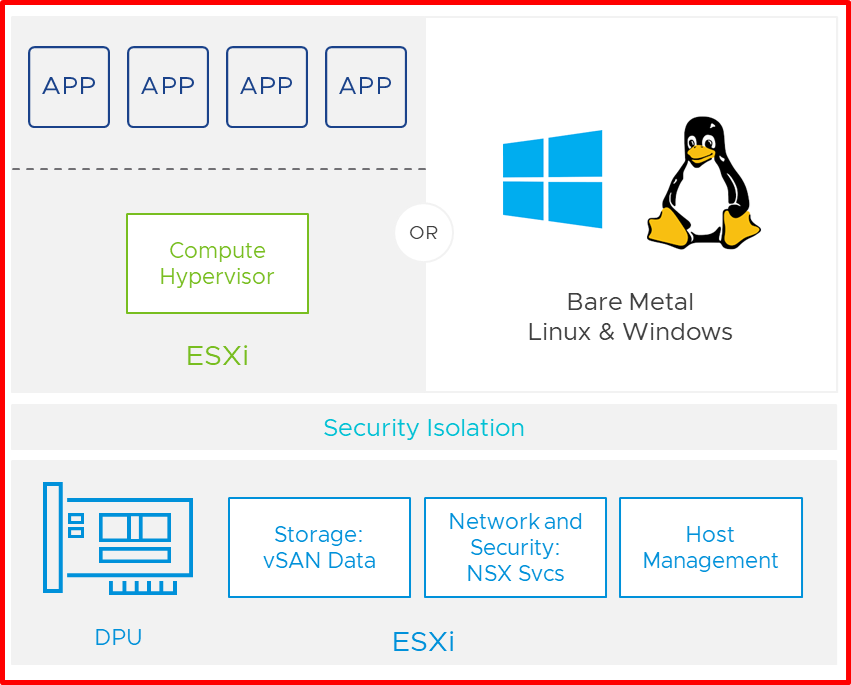

So, what Project Monterey actually is? It is a redo of the very VCF architecture, designed to allow native integration of novel hardware possibilities and program components. For instance, SmartNIC provides a high performance, zero-trust security model, and easy maintenance within the VFC environment. Thanks to SmartNIC, the VFC infrastructure can support operating systems and apps on bare-metal (no hypervisor whatsoever).

Essentially, SmartNIC is a NIC with a CPU module aboard that takes upon itself the offload of managing services’ primary functions (namely, networking, storage, and managing the host itself). Since SmartNIC architecture is currently working mainly on ARMs, VMware will have to pull ESXi ARM Edition up from their current Tech Preview to the vetted enterprise-ready product.

There are three main things you need to know about the said solution:

- Support for offloading complicated network functions to hardware level that will increase throughput and decrease latency.

- Unified operations for all apps, including bare-metal operating systems.

- Zero-trust security model that isolates applications without reducing performance (if the primary ESXi host is compromised, the managing DPU will discover and eliminate vulnerability).

Eventually, Monterey is nothing more but a continuation of Project Pacific technology development, only not in terms of containers for virtual infrastructure but from the hardware side for VMware Cloud Foundation (VCF) infrastructure.

After announcing the said initiative last year, VMware extended partnerships with hardware vendors (Intel, NVIDIA, and Pensando) and OEM server manufacturers (Dell Technologies, HPE, and Lenovo). They have already started Early Access Program for large corporate clients. Within this program, VMware customers can test varying aspects of this technology in their data centers as a part of a pilot project by determining their goals and expectations and discussing them with the VMware team.

The future of the Project Monterey initiative had been widely discussed at the recent VMworld too. That solution responds to the challenge of growing security threats emerging within large enterprise environments of hybrid nature since now you must not only protect your own data center, but the whole integrated cloud environment of the service provider and the company itself. Simultaneously, there’s a visible exponent of growth of computing resources workload from the side of infrastructure services: all managing, security, and service systems are consuming more and more CPU server cycles, which is why many production systems within the cloud environment are left with fewer and fewer resources.

Project Monterey, on the other hand, resolves this task by changing the approach to resource utilization by service systems. The latter are enabled with their very own distributed infrastructure which is closely integrated with DPU modules (Data Processing Unit – same SmartNICs as above). These DPUs can take upon themselves a part of the critical infrastructure services’ load in terms of network communication, security, and storage (as of now it is a responsibility of the CPU modules). As a result, there’s expected to be a performance boost for the existing services by transferring the offload of their CPU cycles to the hardware side.

Another direction for these DPUs is becoming another control point of infrastructure services management, along with the current x86 CPU infrastructure for scaling the management functions. In this regard, DPU is supposed to work as a separate ESXi instance on a server which lifecycle is maintained separately from the x86 CPU environment (second ESXi on the server). In this regard, DPU supports not only virtualization servers but also bare-metal workloads. This is a big deal for service providers, counting as they will be able to finally isolate service workload domains from production x86 systems.

Admittedly, this requires a lot of work from hardware vendors, and this work is happening right now. At this moment, the participants of the program are:

NVIDIA has already started Project Monterey testing for organizations within Early Access Program on Dell Technologies and Lenovo servers.

Also, it appears that Project Monterey will affect cluster architecture as well. Now, clusters are to be tied together with the DPU modules that communicate with each other through specialized API. The infrastructure control will become easier to access: not only at the virtualization servers’ level but also on bare-metal (just as well requires a lot of work to adapt VCF infrastructure with the Tanzu product line):

As you can see, this way is going to be a long one since it requires a coordinated effort from several vendors and includes hardware changes. However, it also promises impressive results in terms of performance, security, and management of large enterprise environments.

Removal of SD Card/USB as a Standalone Boot Device Option

As we can see from experience, even though starting ESXi from embedded SD cards is actually reducing servers’ disk infrastructure load, the reliability is questionable. High intensity of read/write operations leads to frequent failure of these devices and their performance degradation. Simply put, SD cards are not prepared for such intense loads. As a result, the VMware support is overwhelmed with requests to solve such problems (the same goes for USB boot devices for ESXi).

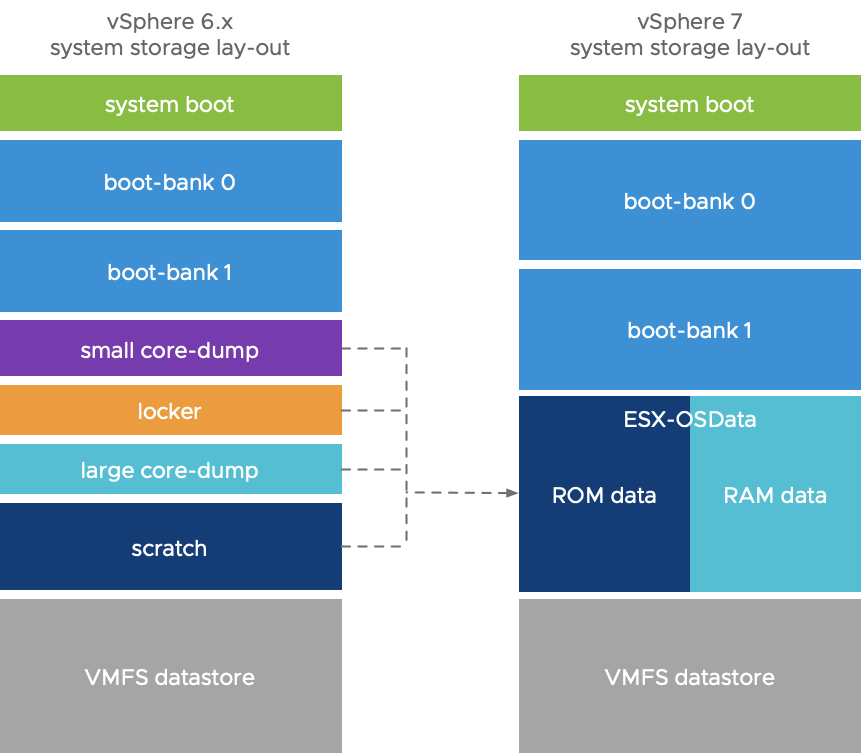

As any VMware vSphere (7 and higher) admin knows, the ESXi partition structure now looks like this:

The new hypervisor version presents new system partitions that became extendable and were consolidated into the ESX-OSDATA partition. By the way, the size is fixed for only one of those, “system boot,” which is equal to 100 MB; the size of the other partitions is going to be defined according to the size of the device where ESXi is being installed to. The ESX-OSDATA partition is supposed to be stored in persistent storage with long service life since there will be a lot of read/write operations coming in. The precise nature of these requests can differ, such as, for example, a request for device status, backup operations, timestamp updates, etc.

SD cards, on the other hand, can cause the following problems respectively:

- /bootbank missing (SD cards usually have small queue size which is why some input/output request are not processed due to a time-out. This problem was essentially fixed in vSphere 7 Update 2c, but it’s hardly a solution since similar problems are occurring all the time).

- VMFS-L locker partition corruption (due to enormous amount of read/write operations, SD regularly just stop working. For example, VMTools (VMware Tools) are generating a lot of similar requests, although it is possible to move VMTools to RAMDisk, by enabling ToolsRAMDisk option. In vSphere 7 Update 2c this problem was fixed as well, but then again, it didn’t solve much).

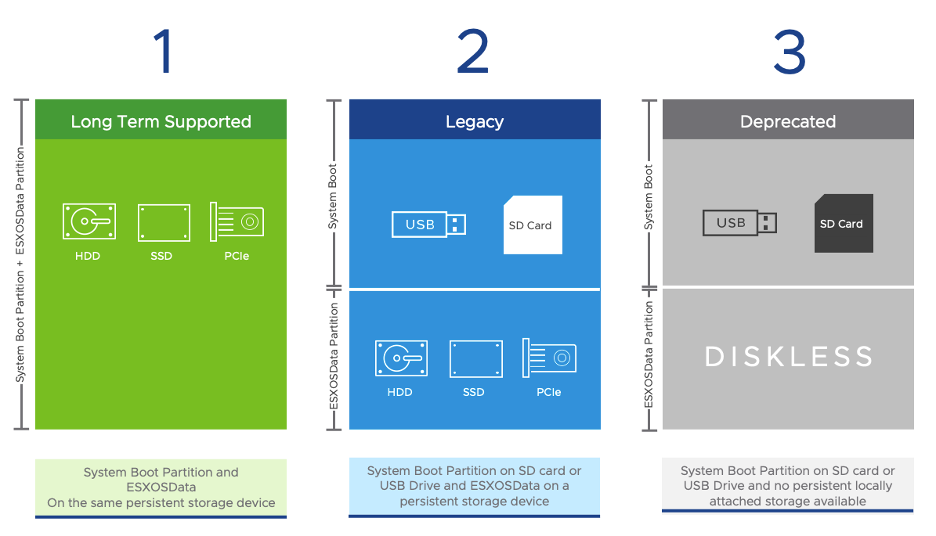

Eventually, VMware decided to mark the possibility of booting ESX-OSDATA on SD cards and USB devices as “deprecated” in vSphere 7 Update 3. There will be no SD card boot option in the next major vSphere version. Right now you should just upgrade your hosts with SD/USB cards at least to vSphere 7 Update 2c. Also, I need to mention that dual-SD is not a solution either, since they will have all the same drawbacks.

In the future, SD/USB devices will be open for use only in 8 GB configuration on such a device (as a boot media) + separate traditional disk for storing ESX-OSDATA partition. In this case, you need to turn on the ToolsRAMDisk option and configure the /scratch partition on persistent storage (HDD/SSD/FC-disks). You just won’t be able to create the /scratch partition on the SD/USB device (more details here). The /tmp partition (250 MB) can be relocated to RAM-disk.

In general, VMware has 3 ways to deploy ESXi, two of which are no longer recommended, which means that you have to gradually get rid of them:

Conclusion

This is it for now! I picked the best for you, but there’s more to find out about the future of VMware, and if you want to know details, you can find them here. Hope it will be useful!