INTRODUCTION

Some time ago, I wrote an article about backup storage media. Today, I’d like to talk about secondary storage.

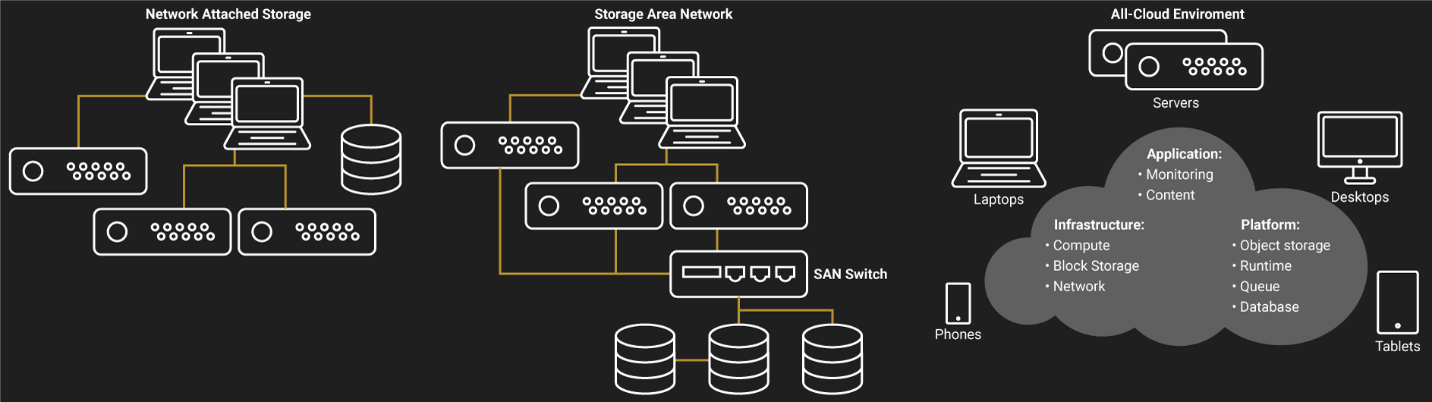

Before I move on, I want to clarify what I mean by “secondary storage” here, just to make sure that we are on the same page. Secondary storage is the storage where the actively used data resides. It can be both some local storage like SAN or NAS, or some public cloud hot tier. Well, it’s absolutely true that you can use disk arrays too, but let’s think of them today just as NAS-like servers packed with many disks, ok? That’s entirely up to you “which side you are on”, and there’s no “one-size-fits-all” solution. NAS, SAN, and public cloud storage… Whatever secondary storage you choose, it has own pros and cons. I discuss them in this article.

SO, WHAT’S THE DIFF?

SAN

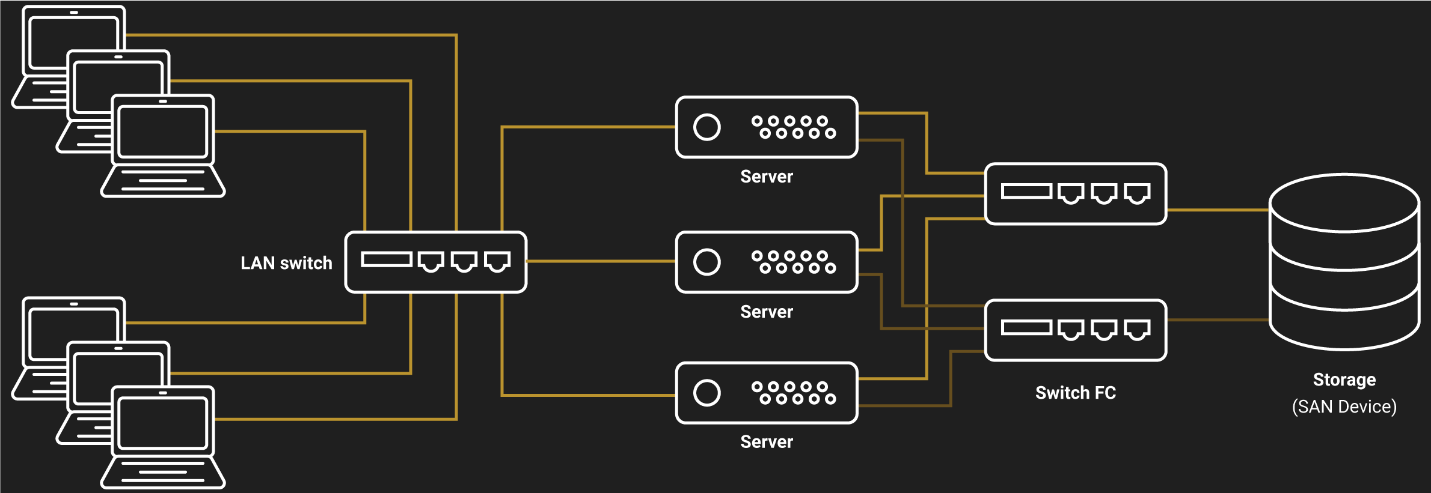

Here is how a SAN (Storage Area Network) device looks like.

SAN is a disk array attached to some client with a dedicated network. It is a block-level storage system that can be accessed via such fast protocols as FCoE, iSCSI, Fibre Channel, etc.

So, why do you typically use SAN? To achieve high performance and build shared storage for your VMs! SAN utilize disks better allowing you to squeeze maximum performance of them. SAN can be managed as a single entity, allowing you to slice up the different storage pools and present them over the network as shared storage.

SAN are quick, SAN are nice, but they have several disadvantages. Fist, their LUN are accessed by a single client. It is also possible to share LUN using cluster services, but such systems require additional input. Second, even a mediocre SAN will cost you a dime. And, the last but not the least, managing such storage is quite an effort- and time-consuming routine. You need narrow specialized knowledge to orchestrate this architecture. With all that being said, SAN are expensive and a bit hard in management.

Here is what SAN infrastructure typically like.

NAS

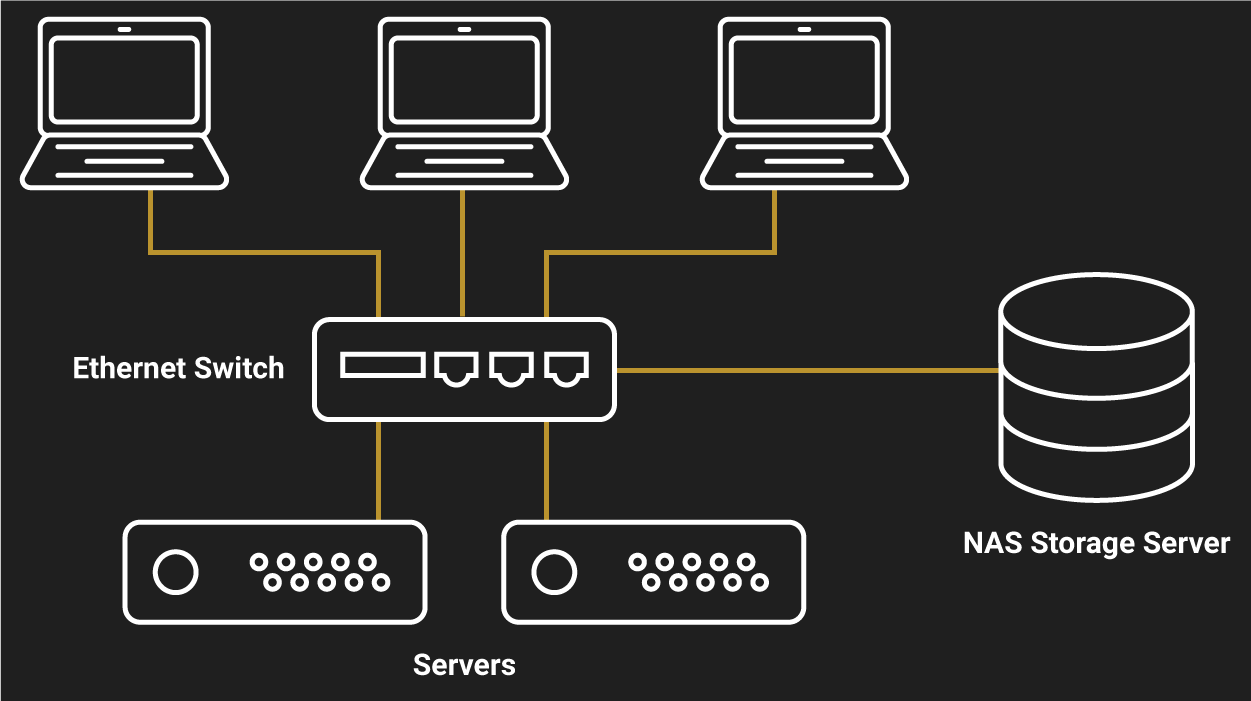

Here is how a NAS (Network Attached Storage) device looks like.

Now, as I discussed what traditional SAN architectures look like and their pros and cons, let’s talk about NAS. And, as it is another local storage option, I’d like to compare it to SAN here.

Let me explain the concept of NAS briefly. NAS devices consist of the bunch of disks arranged in some RAID to maximize the overall performance and achieve the required data redundancy. These devices have core operating systems aboard. Those OS do all low-level processing and file systems maintenance. File systems, in their turn, share disks content over the network on the object level. NAS present the storage over high-level protocols like SMB, NFS, AFP, etc.

NAS systems are ready-to-go hardware bundled with software that delivers the shared storage. Each bundle component is easy to scale up and down. The last but not the least nice thing about NAS is, they bring a lot of cool features to your infrastructure. They are, basically, built into the device! NAS provide such features as antivirus protection, file versioning, auto-backups, and many other things. Ask your NAS vendor about any!

Compared to SAN, NAS systems are easier to integrate into the existing environments and to set up. NAS are typically configured as a network share, and they do not need any particular infrastructure. Therefore, these devices are cheaper.

But, you need to view NAS systems just as storage and nothing more than that. You see, some NAS cannot provide the block storage for your VMs. So, you won’t be able to utilize the devices as shared storage for the VMs.

The image below depicts what typical NAS infrastructure is like.

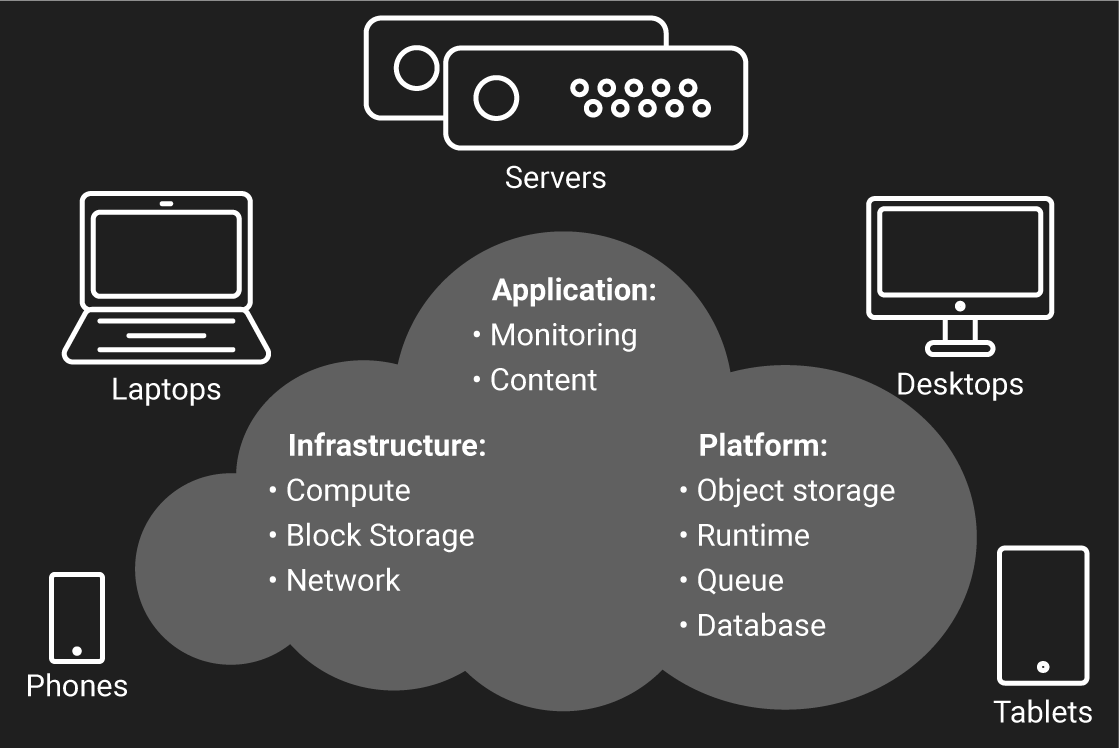

Public cloud

Public cloud is commonly used for disaster recovery and keeping archives and backups. But, if you de-stage all data to the cloud anyway, why don’t you just keep it in the cloud from the beginning?

Regarding how skeptic guys at the IT party may be when it comes to using the public cloud as the secondary storage, I’d like to start with advantages of keeping your data in the cloud. First, you use as many resources you need and pay only for the resources you used. You can also share data in cloud fairly easy that provides you a room for collaboration; your colleagues and partners can access the data from any part of the globe. And, you won’t be the guy who will manage and take care of the storage infrastructure;-).

Scale as you want, pay as you go; looks nice to me! Second, your data is always available. If you run an all-cloud environment in Azure, you will have production up and running no matter what happens to your main site. In addition, Azure provides you the compute power and block storage that you need for your VMs. Looks good for guys running online shops and other season-sensitive businesses.

On the other hand, the public cloud has some disadvantages. I bet that you already know them: security and speed.

You see, each time you upload data to the cloud, you literally give it to some guys you don’t know. For security concerns, it may be still nice to keep some data on-prem. Second, the public cloud is slower. It takes a while to deliver the instructions to the cloud provider’s server room and get the response. It’s where the latency between the storage and users or applications comes from.

Furthermore, your cloud provider may have a pretty slow Time to First Byte, the time you need to wait until the upload actually starts, for cold data. So, before de-staging your data to the cold tier figure out how long you should wait to retrieve it. And, the last but not the least, you need to be aware of hidden payments. You need always to keep an eye on what resources you use in the cloud. Public cloud flexibility may play a trick with you, and you may overuse capacity and compute resource and get a huge sum at the end of the bill. Well, here services for managing subscriptions come into the play, but I guess that they require a separate article.

Here is the scheme of how an all-Azure environment looks like.

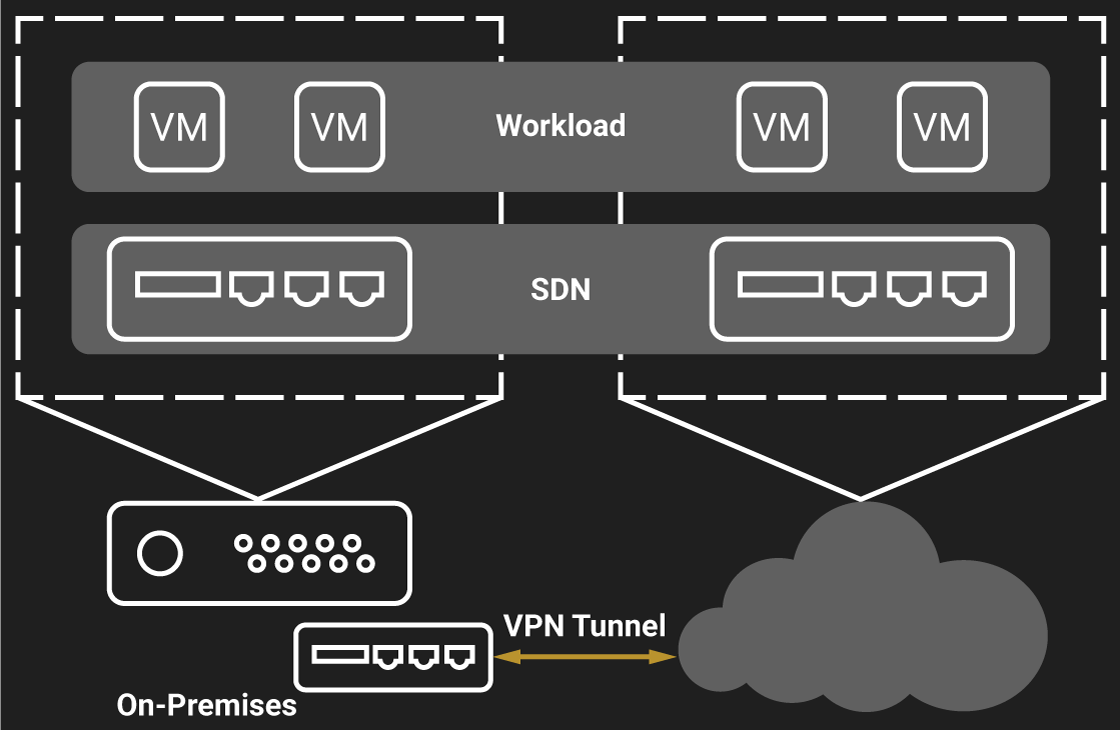

If you are unsure about going entirely to the cloud, try a hybrid-cloud scenario. In that scenario, you can use the cloud as a disaster recovery site. Here is what hybrid cloud deployment looks like.

Run Hyper-V servers on-premises and migrate VMs to Azure (or any other cloud) whenever you need.

USE CASES

Now, let’s look at some close to the real life things. Namely, let me briefly discuss how you can apply all those technologies discussed above.

File server

File server scenario seems the most common way of how you use storage. Here, NAS and public cloud seem the best options because of multi-user sharing capabilities. They both support backups and versioning that seem handy file server features. But, if you run a startup that can hardly afford NAS devices, why don’t you use public cloud until better times?

Why is SAN not good enough for building shared storage for this scenario? First, you need some more hardware to build shared storage. Second, connections become a bit more complicated. In this way, you need to leave more money on the table. The diagram below shows access difference in all architectures.

Applications and databases

NAS is good, but when it comes to providing block-level storage, they do not work sometimes. Let’s say, you need the block-level storage to present it as a datastore for your VMs. In that case, you choose SAN (or running instances in the public cloud). It’s absolutely true that some NAS provide block-level access, but in this article, I talk about things in general, so, unfortunately, I need to disregard such good devices. Sorry.

Database logs and tables are files. So, you can use either a NAS or the cloud. There is a good article showing how databases work with NAS:

https://blogs.msdn.microsoft.com/sqlblog/2006/10/03/sql-server-and-network-attached-storage-nas/

But, you must keep in mind that network errors and storage OS performance may impede database integrity. In this way, a SAN seems the best option again.

Backup and recovery

NAS are good repositories for files. You can set up their OS as a backup server and have the job done once the need arises.

Yet, the public cloud seems the best backup repository. Before, I wrote an article covering on backup media. Check it out:

https://www.starwindsoftware.com/blog/hyper-v/keep-backups-lets-talk-backup-storage-media/

ALL ROADS LEAD TO… PUBLIC CLOUD!

You may argue that all roads lead to public cloud: cloud providers keep your data on the physical storage. However, for the common users, this claim is valid. Sure, you can use tapes for archives, but public cloud seems a better solution for backup and archiving purposes in 2018.

When it comes to uploading data to the public cloud, you need some intermediate software (gateway) to do that. There’s actually a bunch of them available, but I know (and used) Cloudberry. It gives you some freedom to choose where to backup and has a pretty intuitive interface. And, another nice thing is that the solution integrates with Veeam Backup & Replication. It allows not only local-to-cloud replication but also cloud-to-local and cloud-to-cloud replication.

Cloudberry provides a 14-day trial version. Well, I guess it is always nice to try a thing before you buy it. Here’s the configuration guide:

https://www.cloudberrylab.com/download/Backup_Installation_and_Configuration_Guide.pdf

CONCLUSION

In this article, I reviewed popular secondary storage options. Among the traditional SAN and NAS architectures, I also discussed public cloud in the context of being used as secondary storage. I hope I was not advocating for the public cloud that much in this article (I do like cloud computing) :). Still, I hope to make you less skeptic about public cloud.