During the recently held Explore 2023 conference, VMware made many interesting announcements of products and technologies that will appear in the near future. One of the main headlines was the announcement of the imminent release of the second update of the flagship virtualization platform – VMware vSphere 8 Update 2.

During the conference, they shared a video that summarized all the new features of the platform. It highlighted several new functionalities that were especially helpful for administrators of large virtual infrastructures:

With the introduction of ChatGPT in November 2022, there has been a huge interest in generative AI in the industry. By January 2023, ChatGPT became the fastest-growing consumer software application in history, with over 100 million users. As a result, GenAI is now a strategic priority for many organizations. vSphere has been at the forefront of AI since introducing the AI-Ready Enterprise Platform in March 2021, and with this release, VMware continues to scale and improve its GPU virtualization technology. Along with AI-related improvements, VMware is also expanding the availability of Data Processing Unit (DPU) technology on more hardware platforms so customers can experience these performance benefits.

Let’s take a look at the new features related to hardware introduced in VMware vSphere 8 Update 2:

1. Virtual Hardware version 21

We will start, oddly enough, with improvements to the software, but affecting the hardware as well.

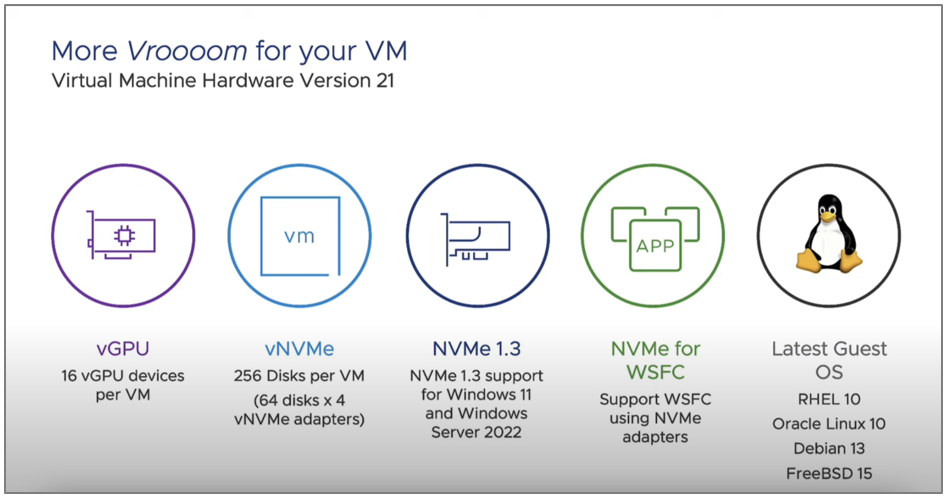

In the new, 21st version of Virtual Hardware, virtual machines received the following enhancements to their capabilities:

- Increasing the maximum number of vGPU devices per VM from 8 to 16

- You can connect up to 256 NVMe drives to a VM

- Support for NVMe 1.3 specification for Windows users and Windows Server cluster failover with NVMe drives

- Compatibility checks for new operating systems: Red Hat 10, Oracle 10, Debian 13 and FreeBSD 15

Remember, to take full advantage of these features, you need both vSphere 8 update 2 and Virtual Hardware 21.

Heavy workloads (especially AI) continue to require more and more GPU power. In this release, the maximum number of vGPU devices that can be assigned to a single VM has been increased to 16, doubling the upper performance limit for large workloads. For AI, this means that you can reduce the training time for AI/ML models and run top-tier models with larger datasets.

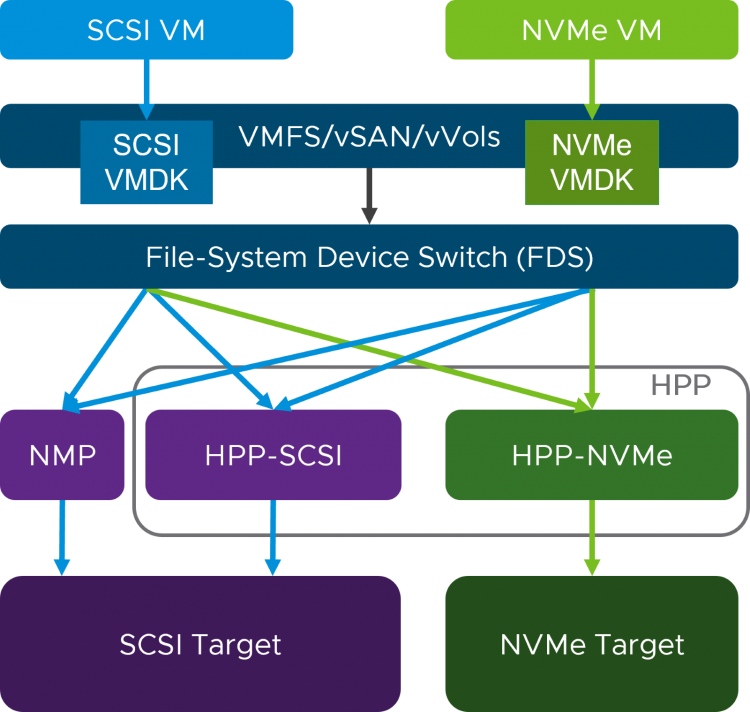

It is also important to note that in Update 1, VMware implemented virtual machine storage translation with full backward compatibility. VMware has made it so that, with any combination of virtual machines using SCSI or vNVMe controllers, and a target device being SCSI or NVMe, the path can be translated in the storage stack. This provides a design that allows clients to transition between SCSI and NVMe storages without the need to change the storage controller for the virtual machine. Similarly, if a virtual machine has a SCSI or vNVMe controller, it will work on both SCSI and NVMeoF storages.

Now in Update 2 it is possible to connect up to 256 NVMe disks to a VM, which meets the needs of almost all VMware enterprise customers.

2. Workload placement and GPU-aware load balancing in DRS

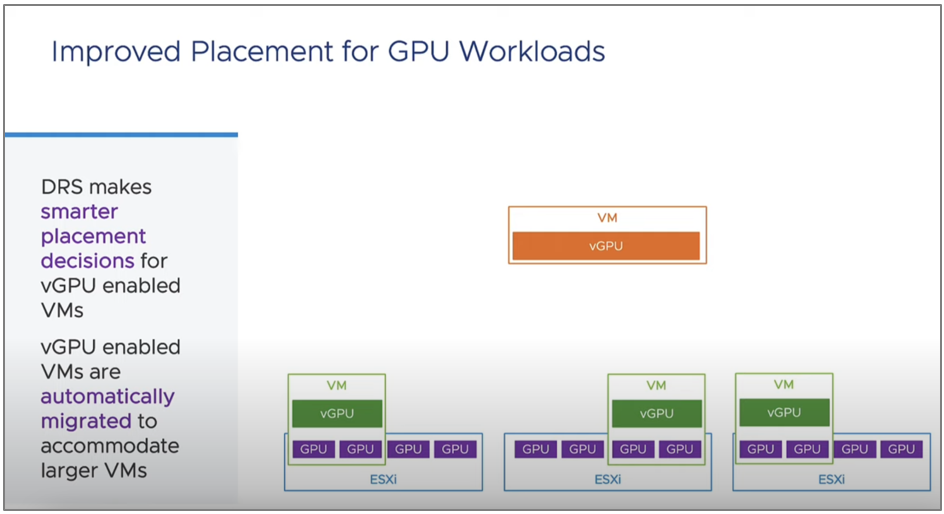

In past vSphere releases, VMware added the ability to use vMotion hot migration technology for GPU-intensive workloads. This is a big step forward for users who use virtual machines for artificial intelligence and machine learning tasks, because they need to move them for proper balancing and even loading of hardware resources.

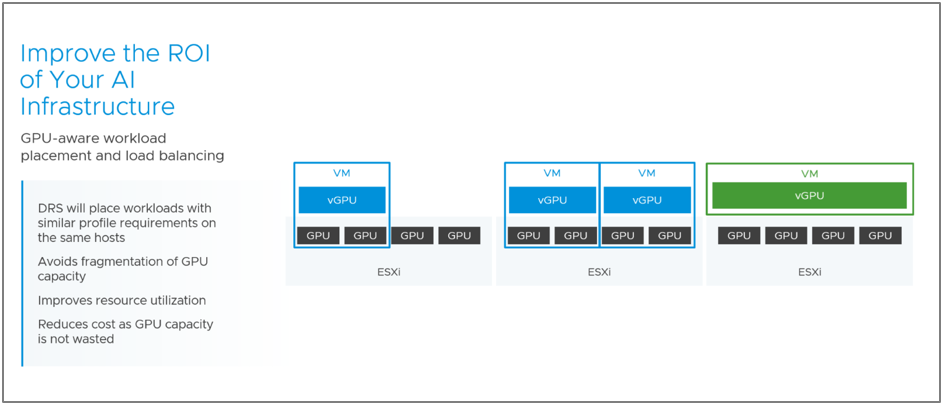

Moreover, based on user feedback, VMware identified scenarios where workloads might not fully utilize the available GPU resources. To address these issues, the load-balancing mechanism was improved. Now, DRS considers the sizes of vGPU profiles and tries to combine vGPU workloads of the same size on one host. This also assists with the initial placement when powering on machines with GPU support, helping to avoid GPU capacity loss due to fragmentation.

Look at this picture. We initially had three machines, each using 2 of the 4 GPU modules available on each host. In previous releases, when a heavy workload with a capacity of 4 GPUs appeared, such a VM would simply not start due to the lack of available capacity.

Now one of the 2-GPU machines will be “moved” using vMotion technology by the DRS mechanism to another host so as to ensure the functioning of a large load:

So, workload placement and load balancing now take into account the number of available physical GPUs in the cluster. Also, the DRS will try to place workloads with similar profile requirements on the same host if they fit there. This increases GPU resource utilization, which reduces costs because fewer GPU hardware resources are required to achieve the desired level of performance.

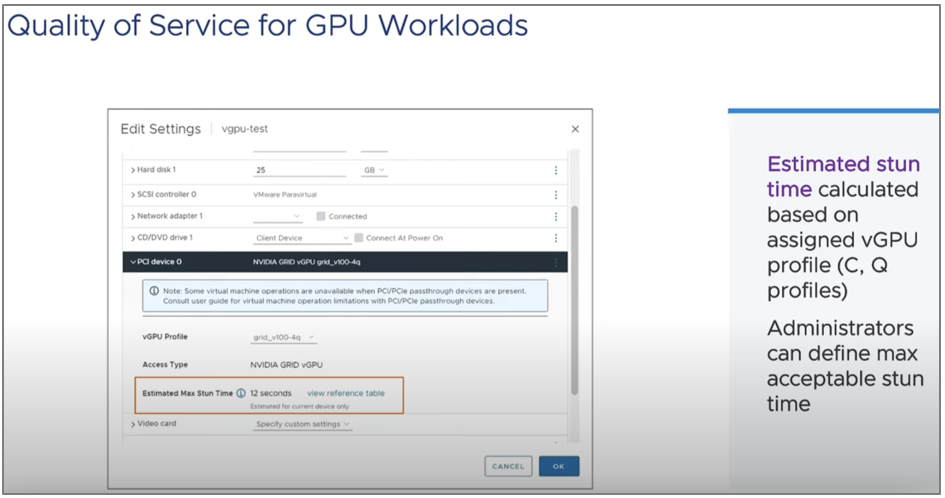

3. Quality of Service for GPU loads

When using heavy workloads with a vGPU, the “stun time” (the time when the virtual machine is temporarily not executing operations) during migration can be significant. vSphere 8 Update 2 provides administrators with an excellent tool for estimating the maximum possible suspend time for vGPU-enabled VMs.

This is determined based on network speed and vGPU memory size:

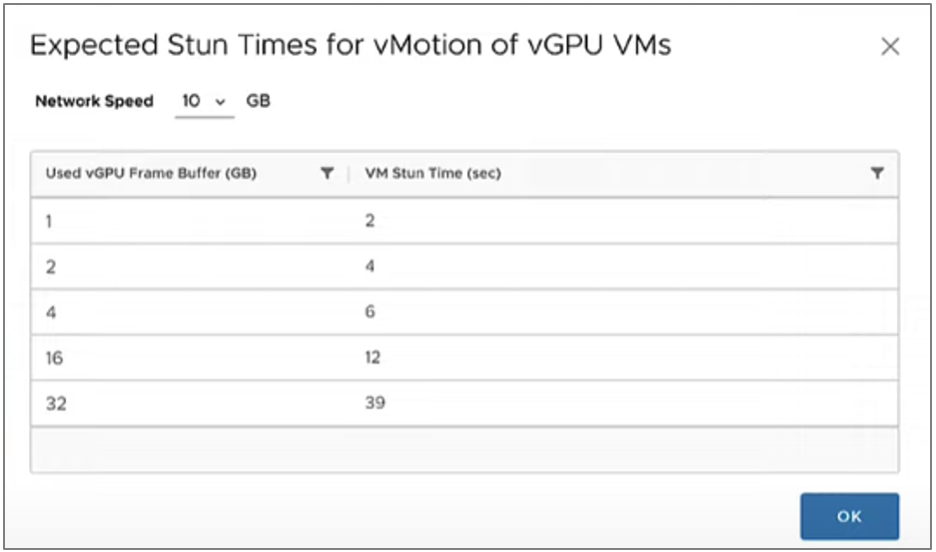

Now you have a reference table from which you can find out how much the virtual machine will pause, depending on the vMotion link speed between the ESXi hosts and the size of the Frame Buffer in gigabytes:

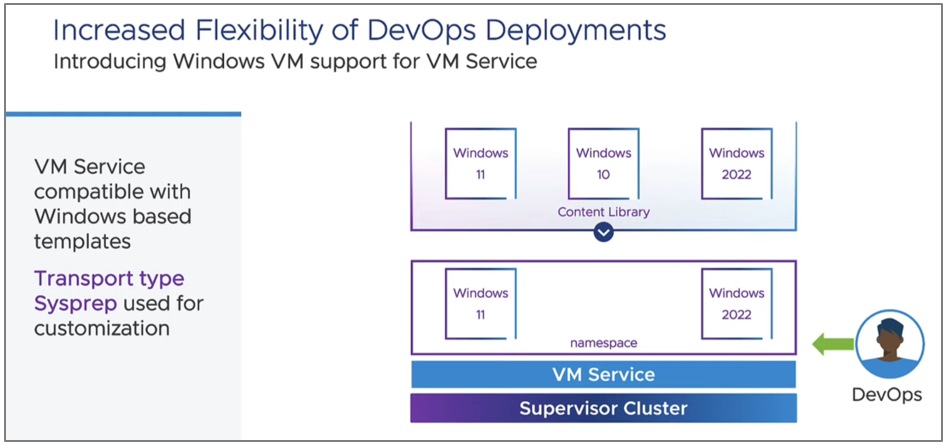

4. VM Service support for Windows and GPU machines

VM Service is the ability to deploy VMs using commands and APIs, which allows you to create combinations of VMs and containers in a single environment.

VM Service implements a great way to provide VMs in a self-service mode, but in the past, it was limited only to Linux machines and specific configurations. Update 2 removes these limitations.

VM Service can now be used to deploy machines on Windows alongside Linux. Also, the VM can be deployed with any virtual hardware, security settings, devices, multi-NIC support, and passthrough devices that are supported in vSphere, allowing full compliance with traditional vSphere machines. It is important to note that VM Service can now be used to deploy working VMs that require GPUs.

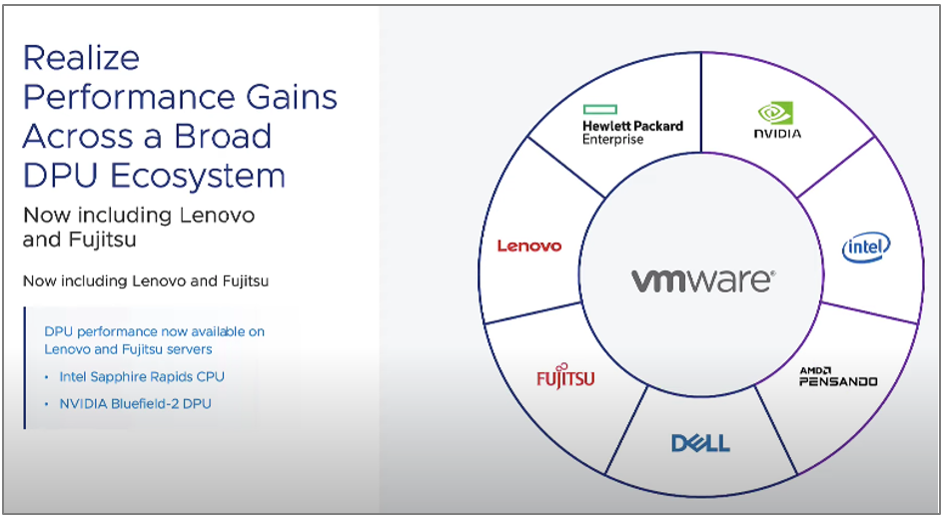

5. Expanded DPU support to include Lenovo and Fujitsu server hardware

A year ago, with the introduction of vSphere 8, VMware introduced DPU support, allowing customers to move infrastructure workloads from the CPU to a dedicated DPU, thereby improving the performance of business workloads.

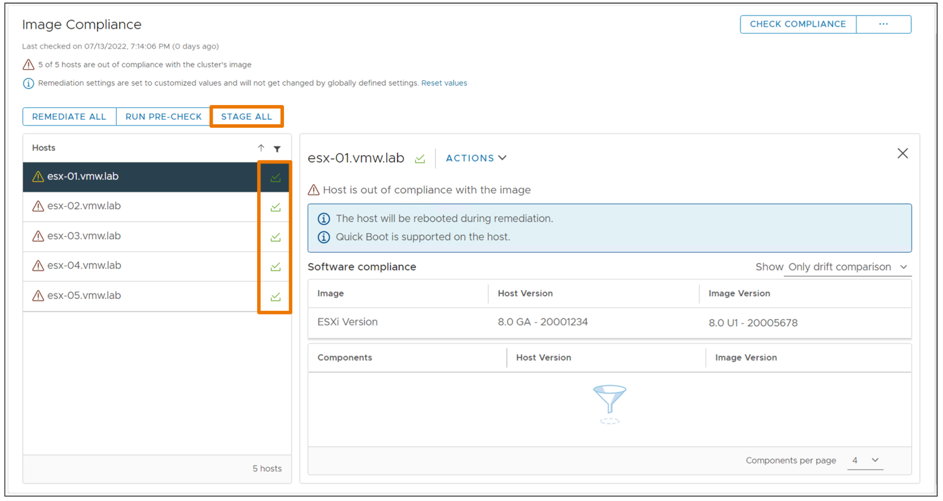

vSphere 8 added DPU support in vSphere Lifecycle Manager to automatically update ESXi hypervisors on these server systems. At the same time, staging of updates and upgrades, parallel rollout of updates and work with standalone hosts were supported to complete the transition to vLCM from the outdated Update Manager.

vSphere Lifecycle Manager can send updates to staging in advance for subsequent rollout in the production environment. Staging updates can be done without putting hosts into maintenance mode. Firmware updates can also be submitted for staging through integration with Hardware Support Manager.

With the release of vSphere 8 Update 2, customers using Lenovo or Fujitsu servers will now be able to take advantage of new vSphere DPU integration features and performance benefits.

DPU Performance technology can now be used for Intel Sapphire Rapids CPU and NVIDIA Bluefield-2 DPU devices.

In the future, VMware plans to increasingly expand support for DPU technology in server hardware from various vendors, so if your server is not yet supported, you just need to wait a little.

Conclusion

VMware keeps pace with the times and understands the growing needs of users to apply new enterprise AI tasks. In the future, these systems will be very common, so the infrastructure for this needs to be prepared in advance, which, in fact, is what both VMware and hardware manufacturers are doing now. New capabilities in terms of GPU operations and support for DPU technology for new systems are proof of this.

In the future, we will see greater integration of the vSphere platform with new hardware technologies, as well as a greater focus on supporting AI and machine learning workloads.