Introduction

One of my go-to Veeam backup target storage solutions is the DELL R740XD2. It allows for 26 3.5″ HDDs which gives you a choice of 8TB, 12TB, 14T, and 16TB for size. You can use the configuration choices in this article and apply them to your hardware. Do note that solutions with 2.5″ disk bays don’t work well for this use case unless we use flash. The reason is that 2.5″ HDDs do not exist in large enough sizes to achieve our capacity requirements. On the other hand, 2.5″ SSDs are overkill for backups in many environments. On top of that, using large SSDs (7.5/15/30TB) is a costly option and might not be available for such servers. I have DELL EMC R740 servers with 15TB SSDs, but that is a different use case and not a default solution. You can read more about that solution in A compact, high capacity, high throughput, and low latency backup target for Veeam Backup & Replication v10.

Figure 1: DELL EMC R740XD2 (image courtesy of DELL)

Let me share my thoughts and, may handier, my configuration choices. In the end, this is what I use, and it is my best effort within a specific budget and for the needs I encounter. Testing all possible permutations is time-consuming and often not feasible. You cannot reproduce the entire lab environment and don’t get the time to do so if you could. However, what you read here is a good start for many (most?) of you, that might be all you need, or you can test and tweak it further. I am using these servers as the building blocks for Veeam Scale-Out Backup Repositories that leverage hardened repositories as extents with immutability on XFS. Read my three-part article series for more info about that.

The DELL R740XD2 BOM

Realize that hardware evolves fast. So, this is to give you an idea. It will change over time. A great tip is to work with your TAM to get the best components you can. Not all options and possibilities always show up on the on line configurators.

| Number | Item |

| 2 | Intel Xeon Silver 4214R 2.4G, 12C/24T, 9.6GT/s,16.5M Cache, Turbo, HT (100W) DDR4-2400 |

| 1 | Chassis Config 1, 24×3.5″ HDD + 2×3.5″ Rear HDD, Single PERC, for Riser Config 2 or 3 |

| 1 | Riser Config 2, 2xLP, Dual CPU, R740xd2 |

| 12 | 16GB RDIMM, (2400-3200MT/s, Dual Rank) |

| 1 | iDRAC9,Enterprise |

| 24 | 7.2K RPM SAS ISE 12Gbps 512e 3.5in HDD (8TB/12TB/14TB/16TB) |

| 2 | 7.2K RPM SAS ISE 12Gbps 512e 3.5in HDD (8TB/12TB/14TB/16TB) in Flex Bay |

| 1 | BOSS controller card with 2 M.2 Sticks 480GB (RAID1) |

| 1 | PERC, H740P, Mini Monolithic |

| 1 | Dual, Hot-plug, Redundant Power Supply, 1100W |

| 1 | Trusted Platform Module 2.0 |

| 1 | Broadcom 57414 Dual Port 25GbE SFP28 LOM Mezz Card |

| 1 | On-Board LOM dual 1Gbps |

In most environments today, I have or deploy 25Gbps or better. My switches of choice are the Dell EMC S52XXF-ON models (25-100Gbps). See Networking S Series 25 100GbE Switches. Don’t bother with 10Gbps switches anymore; bar the ones you have already running. Scale-out solutions and backups require bandwidth.

Figure 2: PowerSwitch S Series (Image courtesy of Dell EMC)

How did I arrive at this BOM?

As with all things in life, it is about balance. Finding that sweet spot that meets your capacity and performance needs in full, doesn’t cost a fortune, and keeps things simple yet elegant. The result is always valid when configuring the systems, depending on the requirements and environment. It will evolve as time goes by. That is ladies and gentlemen, how I came to this BOM for the RD740XD2. But let’s answer some things with a bit more detail.

Server network connectivity

Go for 25Gbps or better. 10Gbps is a LOM thing nowadays. I went with the Broadcom 57414 dual-port 25GbE SFP28 LOM Mezzanine card. These are cost-effective and quality cards. Naturally, don’t forget to buy the SPF25+ modules as well. Why two NIC ports? That is how they come, and it matches fine with the environments I protect. They run Hyper-V, S2D, or Azure Stack HCI clusters. These systems already have redundant 25/50/100Gbps switch independent SMB networks for storage-related traffic. We piggyback on these for backups

The dual LOM 1Gbps NICs I throw in a NIC team (bond) on Ubuntu. I use active-backup (mode 0) for independent switch configs. Lots of options, but I don’t need anything fancy for the management network. Choose an option that applies to your situation.

I am not diving into networking details here, but I need to mention that we define the 25Gbps backup networks in “Preferred Networks” in Veeam Backup & Replication. Also, note that Veeam does not do bandwidth aggregation for you. If you need more bandwidth, you have to team or bond the NICs in some way or use bigger pipes. My dual backup networks here provide redundancy but not failover for existing jobs, nor do they provide bandwidth aggregation.

What and how many CPUs do I need?

The world runs and on and optimizes for dual sockets. So that choice is easy. We want lots of cores (for concurrent tasks) yet don’t want to break the bank. That resulted in the selection (at the time) for the Intel Xeon Silver 4214R 2.4G, 12C/24T, 9.6GT/s,16.5M Cache, Turbo, HT (100W) DDR4-2400. You can get better processors. Don’t get lower spec processors. Be my guest if you like AMD and can get them in your systems. And as these servers will run Ubuntu 20.04 LTS, I do not need to worry about core base licensing costs! I have a total of 24 cores. That is a nice balance.

How much memory do I need?

I went with 192GB of RAM. That is plenty. 192GB fills the 12 memory slots (6 per CPU socket) with 16GB DIMMS for an optimal performance configuration with the dual-socket CPU layout. That size and number of DIMMs do not break the bank.

More memory (larger DIMMs) is overkill. Fewer memory DIMMs of 16GB don’t save enough money to warrant the less optimal configuration from a performance perspective. I am also not going to drop to 96GB with smaller DIMMs and still fill out the memory slots for performance reasons. That doesn’t put you on the safe side memory-wise and will not save money at the time of writing. Veeam Backup & Replication V11 (or later) does a superb job optimizing memory utilization. As a result, 192GB (12*16GB) is the sweet spot that gives you spare capacity and optimal performance in the most cost-effective manner. That’s it. As a straightforward answer, it does not get any better. 192GB in total, all memory slots filled, is a safe bet (you have a nice margin), and is optimized for performance. Just do it.

Use RAID60, not RAID 6.

I do not want to build RAID 6 groups larger than 14 disks. That is my maximum. Why? Because I need to consider the risk of losing multiple disks in the same RAID 6 group. If there are 24 or 48 disks in one group, the odds are higher. So, with our 24 disks per R740XD2 server and two global hot spares, I go over that number when I use RAID 6. By doing RAID 60, we reduce that risk as we get hardware-managed stripes over multiple RAID 6 groups of 12 disks. So that concern is addressed. It also reduces the impact of rebuilds on the system’s performance. That’s a nice added benefit. The two global host spares give us time to replace a failed disk before becoming too nervous. Remember that rebuilds can take a while, especially with larger HDDs. RAID 60 comes at the cost of more spindles required to meet your capacity needs. But in the risk/cost balance, this is worth doing.

RAID 60 gives you real-world usable storage from 145TB (8TB HDDs) to 290TB (16TB HDDs) with dual parity on 24 disks and two global hot spare disks.

Large disks mean you can still have sufficient capacity in a server when using RAID 60. Also, remember that most of the time, these servers are building blocks for a Scale-Out Backup Repository (SOBR). That means that more nodes help spread the load and reduce the impact of losing a node on both performance and capacity. So, it is all good.

Is one RAID Controller enough?

Yes. You can have two, but the PERC H740P with 8GB of cache and one controller can easily handle the 26 HDDs. So, two controllers are often not needed in this situation. Only if you want the absolute best throughput two controllers will help, but many of you will not need it. The side effect of two controllers is that you have at least two disk groups. That would give you 12 disks per group in this server, where each group has one global hot spare. That is over-optimizing. Also, if you require a single volume with two controllers, you will need LVM to create that in Ubuntu 20.04. If this system had 52 disks, I would go for two controllers, but not now. Remember, these servers provide backup repository extents as SOBR building blocks, so you have scale-out here. And from an N+1 design, you are better off with two, three, or four servers than with one of two larger capacity servers.

Do I lose disks for the OS?

No. I use the DELL BOSS controller option and boot the system from those M.2 SSDs in RAID 1. The performance is more than adequate for the OS, and at 240GB or 480GB, the size is more than enough. Choose the 480GB if you need it for the NFS cache. But here, I’m cool, it is a hardened repository, and it only stores immutable backups files and does not handle any other roles.

Figure 3: DELL BOSS controller with M.2 SSDs

In future versions, you can expect the BOSS controller and disks to be “hot-swappable.”

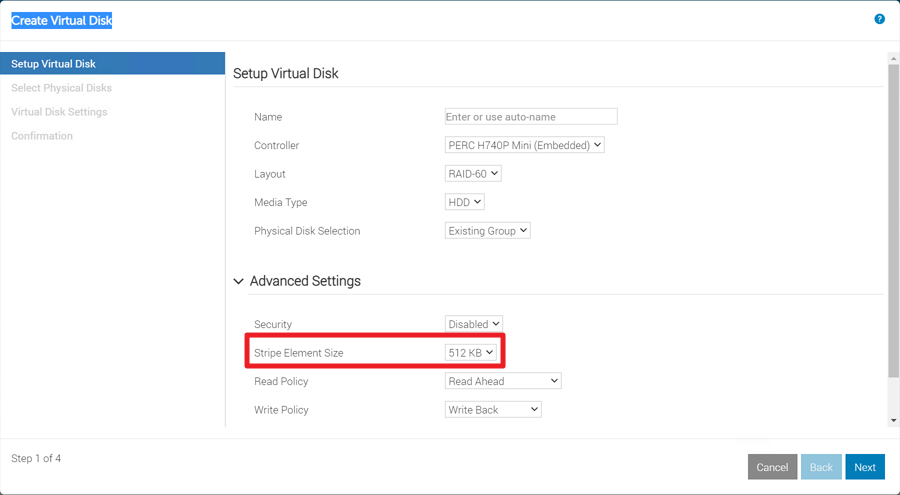

Any RAID tweaking and settings to share? Virtual disk stripe size!

The Perc H740P allows for some tweaking. The most significant tweak is the stripe size used for the virtual disks. I chose 512K as the fasted option for our purpose, so that is what I use. If you have a controller that allows for only 128K, or 256K, don’t sweat it, it will do fine.

Figure 4: Mind your stripe size!

But if you can, go for 512K. That way, it aligns with the Veeam default block size of 512K if you use the default “Local storage” option, which is 1024K but, due to an average compression ratio of 50%, turns that into +/-512K on the repository. See Your Veeam backups are slow? Check the stripe size! – Virtual to the Core for more info.

See Veeam backup job storage optimization later in this article for more on this.

Does the 8GB cache size matter, or can I get a cheaper controller?

The PERC H740P has 8GB of cache with a battery backup. That means the RAID controller cache can still write its content to disk in case of power loss. The RAID controller cache optimizes physical write operations to disks and is the secret sauce for performance with HDDs. So more usable RAID controller cache is better. The quality of the RAID controller makes the solution here. Don’t get cheap here!

Finally, and I hope this is obvious, do not turn it off. So combined with our controller’s other virtual disk, below are all the settings used.

- DISK GROUP: 24 disks (2 disks as global hot spares)

- RAID type: RAID 60

- Disk cache policy: Enabled

- Write policy: Write Back

- Read policy: Read ahead

- Stripe Size element: 512K

Other tips?

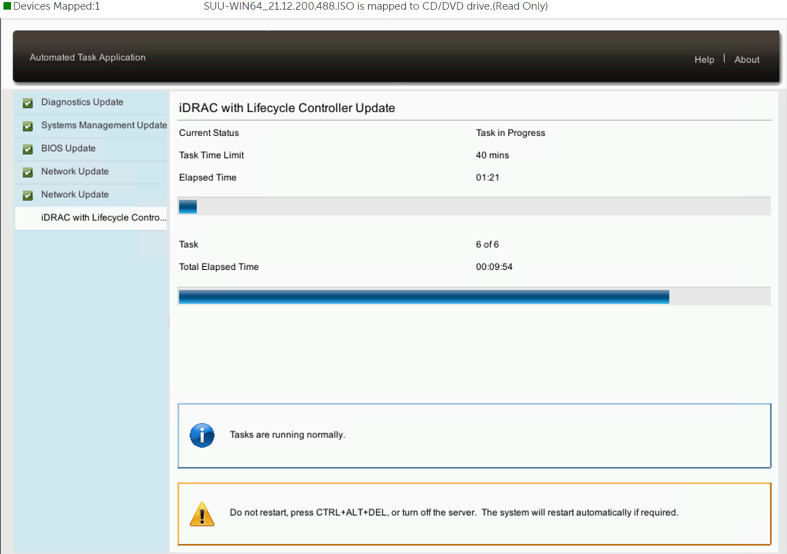

Yes, keep your BIOS, firmware, and drivers up to date. The same goes for the OS, Ubuntu 20.04.

Figure 5: Keep your BIOS, firmware, and drivers up to date

Ubuntu 20.04 LTS

What file system and with what settings?

XFS baby! If this server had Windows Server 2019 or 2022, I would happily do ReFS on local Storage Spaces with Mirror Accelerated Parity. But this is a Linux, Ubuntu 20.04 LTS host. Only when our OEM supports 22.04 LTS can we consider using or upgrading. For Veeam hardened repositories, the file system of choice is XFS. XFS provides not just immutability but also better performance for synthetic operations. So, it is the best choice for our requirements.

Important. You want XFS with data block sharing (Reflink) enabled for Veeam’s Fast Clone to work. So mind the following:

- size=4096 sets the file system block size to 4096 bytes (max supported),

- reflink=1 enables Reflinkfor XFS (disabled by default),

- crc=1 enables checksums, which is required when Reflink is enabled (enabled by default).

Also, with our Perc H740P RAID controller’s RAID 60 disk group and PERC Virtual Disk, I had to specify the maximum log size, or I got this message when formatting the volume. You will not see that issue with virtual machine labs for XFS on Hyper-V. XFS seems smart enough to want to align with the RAID virtual volume’s strip size of 512K.

“log stripe unit (524288 bytes) is too large (maximum is 256KiB) log stripe unit adjusted to 32KiB.”

It does not fail. But I sized the log stripe to the maximum supported due to the sequential nature of writing backup files and the fact it aligns closer with my RAID controller’s virtual disk stripe size of 512K. I let “mkfs.xfs” decide the best settings for all the other potential options. It is better at this than I am. That is an estimated guess. I might come back to this if it turns out badly.

So as an example example for /dev/sda that gives us:

|

1 |

sudo mkfs.xfs -b size=4096 -l size=256 -m reflink=1,crc=1 /dev/sda |

Any Veeam optimizations?

Yes, some of which I have blogged about before.

Veeam Preferred networks

Read the following two blog posts of mine.

Make Veeam Instant Recovery use a preferred network – Working Hard In ITWorking Hard In IT

Veeam backup job storage optimization

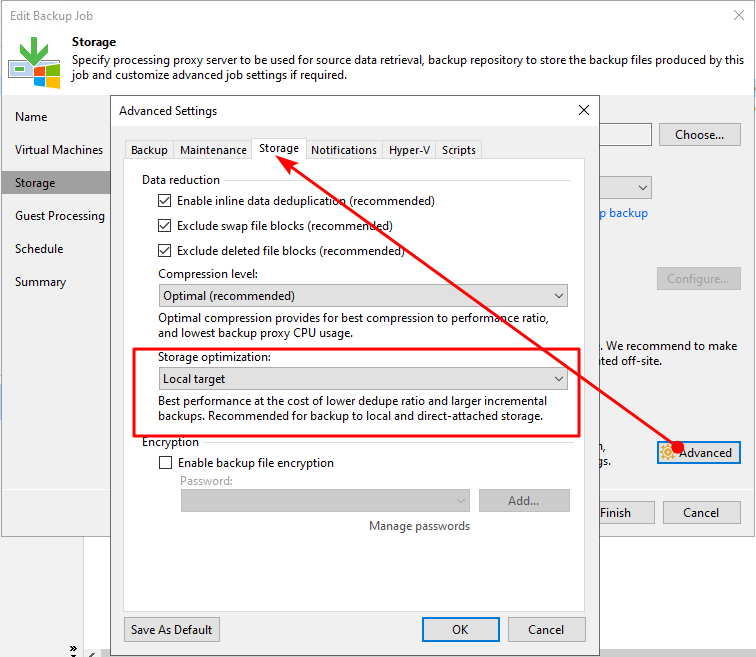

In Veeam Backup & Replication, in the settings of a backup job under Storage, Advanced, on the Storage tab, under storage optimization, you can play with both “Local target” and “Local target (large blocks).”

Figure 6: Checks and balances. Take your pick.

See what suits your needs best. Want to try and squeeze out the last drop of performance at the cost of some capacity? See what using “Local target (large blocks) does for you.” If that suits your needs better, maybe you can try with 1024K for the RAID virtual disk stripe size. On the other hand, do you want or need to optimize the capacity usage and keep the size of your incremental backups a bit smaller? “Local target” is the option you want. You decide; both options will serve you well. I chose “Local target” for reasons described in “Any RAID tweaking and settings to share? Virtual disk stripe size!”

Use per-VM backup files

Yes, rarely will not using that option do anything for you that outweighs its benefits. Multiple write streams are required to optimize and utilize the capabilities of your system. Let’s say a Veeam backup write stream achieves 1GB/s. With a compression ratio of about 50%, you get around 2GB/s throughput. Per-VM backup files allow you to utilize multiple write streams (8 to 16 in concurrent tasks) to go up to 5GB/s or higher. Just tweak the parallelism (the number of VMs and their virtual disks) backing up simultaneously inside a backup job versus the number of concurrent backup jobs until you no longer see improvements. Then, back off to settings just below that point. Don’t push your system over the limits. If you don’t use per-VM backup files, you can only tweak the number of concurrent jobs to get multiple write streams.

Conclusion

That was it. You have now read how I configure Dell servers to use with XFS for Veeam hardened repositories. I hope it is of use. For your designs, what do you do? Care to share and discuss this in the comments?