Introduction

One of the pleasures I find in my job and even in my spare time is designing and testing solutions for the networking requirements of applications. Of course, that entails catering to the needs of the architects and developers. But it also means complying with security policies and the realities of how networking works. The latter sounds obvious, but it isn’t. Many Azure application designs or architecture drawings that come across my desk have magical connectivity “assumed” where they state X should talk to Y. There are no specifics or details, not even a mention of how that would happen.

Figure 1: Networking (image by Photo by Brett Sayles)

Now, granted, software-defined networking in Azure takes care of many things for you under the hood. However, that does not mean you can always hope for network traffic to flow as you think it should automatically. It requires design and the validation of that design. But you cannot design what you do not understand or know. That takes reading, learning, and lab work to understand.

Finally, you want to plan for the future and ensure we don’t paint any stakeholders into a corner. Doing things fast, with minimal effort, and without thinking things through, can lead to costly and disruptive redesigns.

Communication is key!

When designing solutions, I usually make many drawings and build lab environments to test my assumptions to ensure I get everything right. I use colored pens, markers, draft paper, or a whiteboard. Such drawings tend to be busy as they contain and communicate much information. Nowadays, a digital whiteboard or an interactive touch monitor makes it possible to share with the entire world, even from the comfort of my home office. Tools like Excalidraw or Microsoft Whiteboard make the difference here.

Figure 2: Whiteboarding – an excellent tool for designing & communicating options/solutions

That whiteboard work I then translate in Visio or diagrams.net (formerly known as draw.io) documents that describe the patterns. There are many patterns and variations of those patterns. I do not make drawings for each one, just the ones I need. But over time, you collect quite a few of them. Then, when discussing solutions with the developers or architects, I can fall back on those patterns or leverage them as a base for specific designs.

Routing application gateway traffic to a private endpoint.

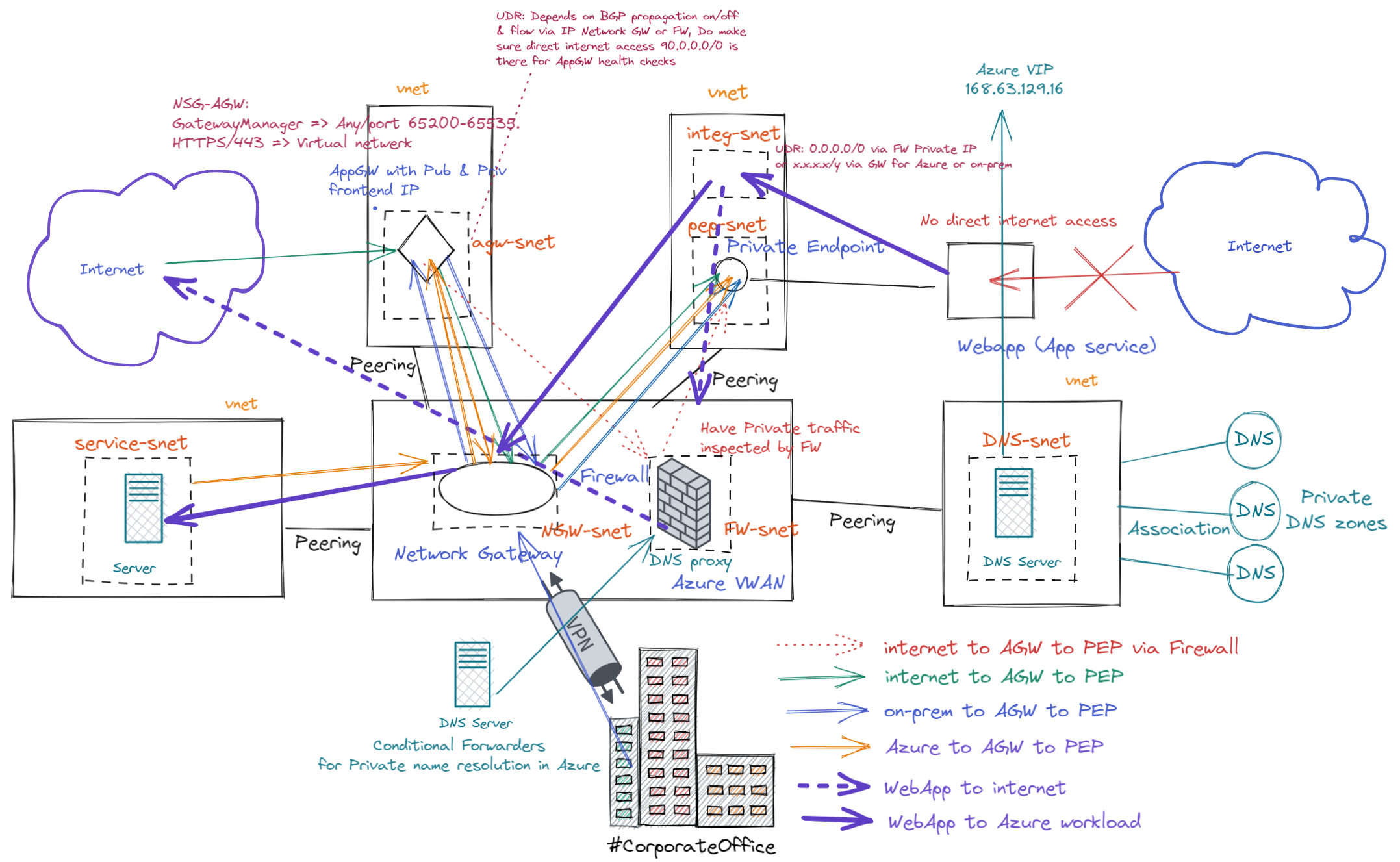

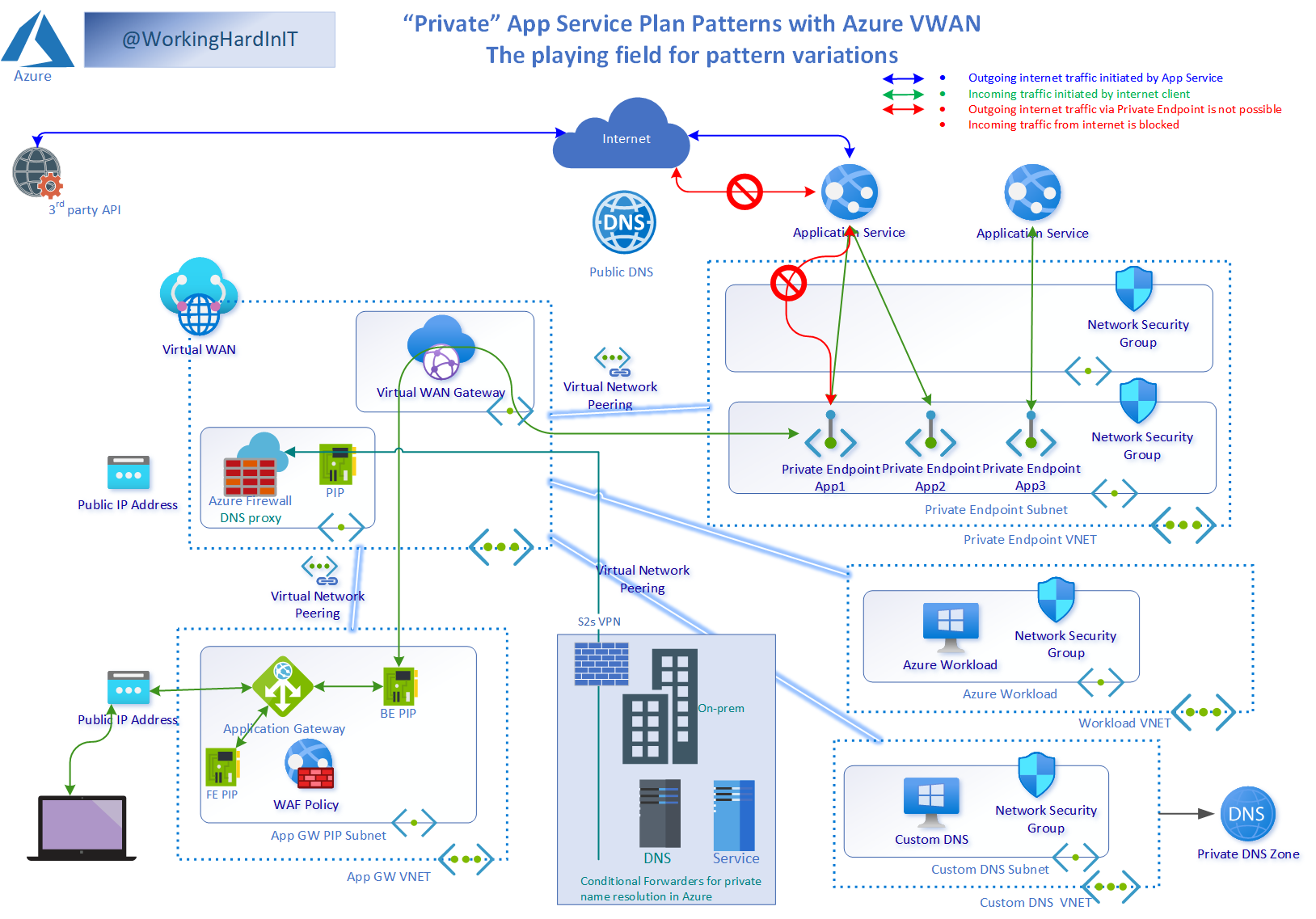

Here I will discuss some basic patterns I have when routing traffic via an application gateway to a private endpoint of a backend application in an Azure VWAN environment with a secured hub.

The challenge we always face is creating maintainable solutions that can be rolled out as Infrastructure as Code via a DevSecOps pipeline. That means we must develop a limited set of op options we can leverage for most use cases without too much customization. You can’t support 25 patterns and hope not to drown in the effort of maintaining those. On the other hand, there is no “one size fits all.” We must strike a balance between the needs and wants of developers, application requirements, maintainability, and complexity of the solution. So we tend to come up with some patterns we’ll use. That means we can still be flexible but avoid needing 25 solutions for 25 services or applications.

The Azure VWAN environment

Setting the stage

Before discussing the patterns, we should look at the Azure VWAN environment. That will give you a visual overview of the landscape. Think of it as a map with the geographical landmarks marked on them that will help you navigate your network traffic to where and in the way it is required.

For simplicity’s sake, I have only drawn an Azure Virtual WAN with one secured hub, i.e., a virtual hub with a firewall. Yes, we can draw up patterns for multiple secured HUBs, which leads to some interesting findings regarding inter-hub routing, but that is a subject for another article.

With Azure Virtual WAN, we have transitive peering with all the connected VNETs by default unless we leverage custom route tables and the firewall to manipulate traffic flow. While we can certainly do that, we will not discuss this here.

Figure 3:The Azure VWAN environment with Web App and App Gateway

Here we have a run-of-the-mill Azure Virtual WAN with a secured Hub, meaning we deployed an Azure Firewall. However, public access is blocked automatically for you when creating a private endpoint for the web app. So we need another way to get to our web app in the Application Gateway (AGW). Via the public IP of the AGW (front end), we can access our web app (Application Service) over its private endpoint (back end.

Deciding where the private endpoints and AGW live

As you might notice, the AGW has its own VNet/Subnet, just like the Private endpoints. I follow Microsoft’s preferred solution (see Use Azure Firewall to inspect traffic destined to a private endpoint – Azure Private Link | Microsoft Docs). I combine scenarios 1 and 4 from the above article as I also have on-premises access. And I use an Azure VWAN. However, if you dedicate an AGW to a single app or several apps in a service plan under the control of the same developers, you can most certainly place the private endpoints in a subnet of the AGW VNet. That fits perfectly into the DevOps mindset, where they control as much as possible for easy working and flexibility and have as few dependencies as possible on a centralized AGW managed by an Ops team. Perfect, right? Yes, it is an excellent approach.

However, that is different from Microsoft’s favorite. That is a solution where we put the private endpoints in their own VNet/Subnet(s). It fits perfectly into the hub/spoke networking model, scales well, and avoids running into the 400 routes limit of a routing table, as we can add a route to the subnet(s) where the private endpoints live. The dedicated VNet/Subnet can belong to a DevOps team that manages it for all the different applications and services that share the AGW. That leaves them in control. In a shared fashion, where multiple groups share the same resources, the SecOps team manages the private endpoint VNet/Subnet(s). Such an environment requires good DevSecOps integration regarding pull requests and pipelines to keep things flowing smoothly. As there is some peering involved, it introduces an extra cost. Cynically, some argue that many of Microsoft’s best practices incur additional charges. On the flip side, there is a benefit to them as well. It is up to you to decide what fits your needs and budgets best.

Decentralized, Centralized, or Hybrid AGW

For an AGW with or without private endpoints, I see several options. One option is to create a central AGW managed by Ops that serves all developer teams. That is the old-school IT (on-prem?) approach, and it does work. However, it makes Devs dependent on the Ops team. Also, security (policy) wise, some people might want all the private endpoints ending up in the same VNet for that central AGW. But we can avoid this (what we discussed before) by deploying the remote endpoints in their separate VNET(s)/subnet (s). Doing so per application or DevOps team keeps them in charge of their endpoints. Centralizing the AGW in larger environments a central AGW might lead to scalability issues. That central AGW also becomes a single point of failure, and a problem with it could impact all apps. So this is fine for a small environment but not when you plan for growth and complexity. I have one AGW like this in large environments to cater to small projects with no AGW and networking skills. They want to consume connectivity and not be bothered by any details.

The second option is an AGW dedicated to a dev team or business unit. That keeps the DevOps benefits we discussed before, and as the apps all belong to the same DevOps team, they can control it if they want, and then it is up to them to decide where to place the endpoints. If they put them in the VNET of the AGW, they can avoid some network configuration required to make things work. DevsSecOps can collaborate, and you optimize for the best of both worlds, depending on the environment.

Finally, a dedicated AGW for a single application is what I do when the need for isolation is very high or the service is so demanding that it is warranted. In that case, the DevOps team does not share the environment, and you can put the private endpoints in the AGW VNet.

As a final note, because I am using an Azure Virtual WAN, and part of that is managed as a global resource by MSFT, I do not deploy an AGW in the hub. Instead, it is in a spoke of the Azure Virtual WAN Hub.

The default network traffic flow

The default network traffic flow will be as seen in the image above. A client on the Internet connects to the AGW public IP address, the WAF policy runs if enabled, and if all is good, the traffic flows over the private IP of the AGW via the VWAN Hub to the private endpoint. All thanks to the transitive peering the VWAN provides. Very convenient.

Note: When you need to connect to an Application Gateway from inside your network, be it in Azure or on-prem via an S2S VPN, you must create an Application Gateway with a public and private frontend IP. Remember, you cannot make one with only a private frontend IP. However, you can block internet traffic from connecting to the public frontend IP. Also worth mentioning is that creating a private endpoint for an AGW is in public preview at the time of writing.

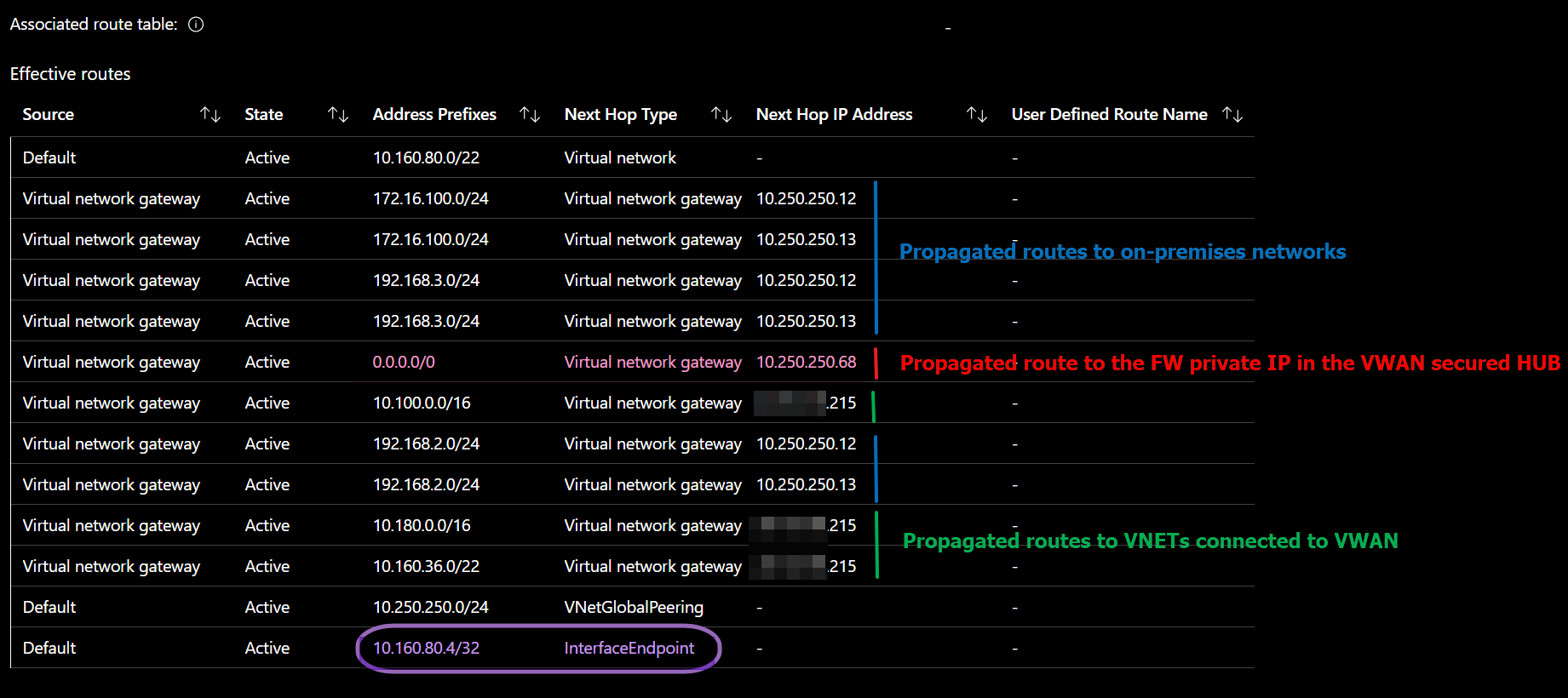

When using directly peered VNETs, the routing for traffic to the private endpoints can become a bit more involved, especially with many of them. That is because a /32 route towards a next hop type of “InterfaceEndpoint” for every private endpoint IP is created. Behind that InterfaceEndpoint, Microsoft performs its software-defined routing for the endpoint to reach its service.

Azure creates those /32 routes in the routing table of the subnets in the VNET where the private endpoints live. Additionally, they appear in system route tables of every directly peered VNET. So with the AGW and the Private Endpoints in separate VNETS, which we transitively peer via the VWAN, we can avoid much of that maintenance overhead. In addition, UDR support for Private Endpoints helps prevent us from hitting the 400 route limit. More on that later.

Figure 4: A peek at a routing table in an Azure VWAN environment

In the routing table above of a VM in the subnet where the Private Endpoint lives, note the /32 route for the IP address sent to a “Next Hop Type” of “InterfaceEndpoint” (purple). Also, note the propagated routes for the on-prem networks via the active-active S2S VPN tunnels (blue), the route for internet traffic via the firewall private IP address (red), and the routes for the connected VNETs (green).

We now have a map of the environment. We also better understand the content of the routing table in such an environment. Armed with this, we can discuss patterns for manipulating traffic flows to and from the Private Endpoints of our web apps.

Routing the traffic flow

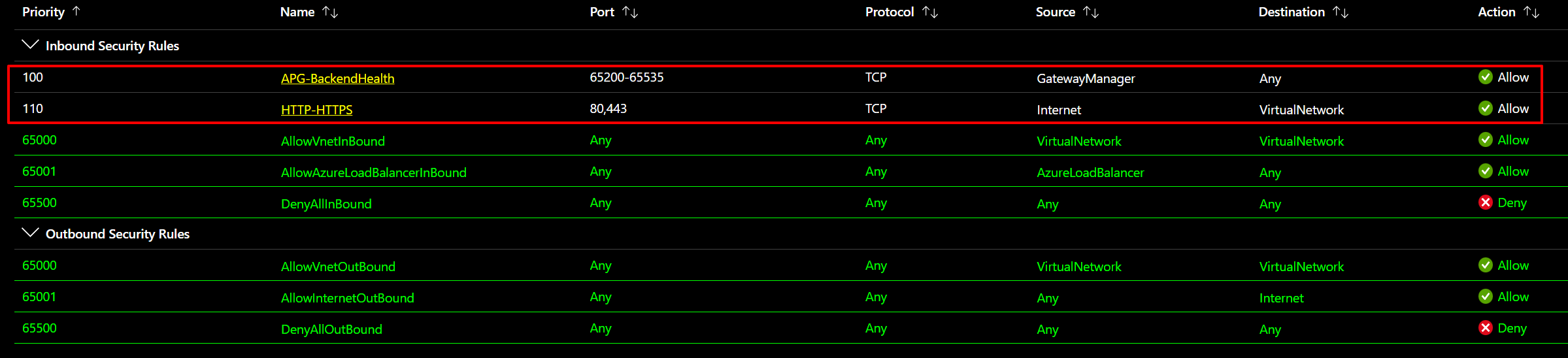

For our AGW scenario, we will use Network Security Groups (NSG) and User Defined Routes (UDR) to help secure and route network traffic.

One variation to look at is sending traffic from the AGW destined for a Private Endpoint via the firewall. And vice versa, have outgoing traffic from our web app flow via the firewall.

Bar network connectivity and routing rules, how do we get traffic from the AGW private IP address to route to the Private Endpoint IP address in the remote endpoint subnet and arrive at our web app? Well, first of all, we need a DNS name resolution that can resolve name queries for Private Endpoints.

DNS

There are two options for DNS when it comes to Private Endpoints. These are a custom DNS server solution or an Azure DNS Private Resolver.

These are the ones that will allow resolving DNS queries from on-premises locations as well as enable easy centralization of the DNS solution. In this environment, we use a custom DNS server solution since we have traffic that might come in from on-premises, and we are leveraging an Azure Virtual WAN with a secured Hub, i.e., the hub has a firewall. That is important as we will use the DNS proxy capability of the Azure Firewall.

So the above deserves some further explanation. First, On-prem DNS servers cannot conditionally forward to the Azure VIP directly, so it must pass via a custom DNS server or the Firewall DNS proxy. On top of that, we cannot link a secure hub to a private DNS zone. That is because Microsoft manages the secured virtual hub, not you! Furthermore, since we might be using the firewall in the VWAN to filter traffic from either on-premises or VNET clients going to services exposed via Private Endpoints in a Virtual WAN-connected virtual network, it needs name resolution.

Finally, the custom DNS server can conditionally forward DNS queries for on-prem or handle them in various ways (manually maintained forward lookup zones, secondary DNS servers of the on-premises DNS servers, or domain controllers with integrated DNS). The custom DNS server in the drawing represents all these options. In addition, the various DNS design possibilities deserve their dedicated article(s).

Last but not least, the Azure DNS Private Resolver is also an option. An Azure DNS Private Resolver does not require custom DNS servers. So if you don’t have Active Directory extended into your solution and, as such, a need for custom DNS servers anyway, this is your new first choice. If not, it can integrate with existing custom DNS servers, but I am not sure it is always worth the additional cost in such a scenario.

UDR and NSG, as well as some firewall details

To route traffic from the AGW to the Private Endpoint, we need to use a UDR that we will assign to the AGW subnet. With a UDR, you can propagate the gateway routes or not. Both options are valid. It just depends on what you want to achieve and how. So we will look at both.

But before we do that, you might already wonder why, as we are using an Azure VWAN with transitive peering, we need a UDR. So the AGW subnet and the PEP subnet should be able to talk to each other over their connected (peered) VNETs to VWAN if I did not block it via some custom route table in the VWAN or block it in the firewall?

So why do we need that UDR? Well, that has to do with the AGW backend health checks for which the AGW needs to reach the Internet directly. Since we have a secured Hub in our Azure VWAN, the default route for the Internet passes over the firewall and does not get to the Internet directly from the AGW. That breaks the Azure infrastructure communication for the backend health checks because that traffic must be able to communicate directly to the Internet. The return traffic must access the AGW over ports 65200-65526.

That also means you must open ports 65200-65526 on any NSG bound to the AGW subnet. In case you are worried about that traffic, don’t. Azure protects those endpoints with certificates and is not usable by external parties or yourself for anything else. So, add an inbound rule with a service tag as the source and use “GatewayManager” as the service tag. First, fill out * for the source port ranges. Leave the destination on any and select custom for the service type. Next, fill out 65200-65535 for the port range and choose TCP for the protocol. Please give it a priority and fill out a descriptive name. Remember to allow inbound traffic for HTTP/HTPPS as well. After all, the aim is to connect to a web app.

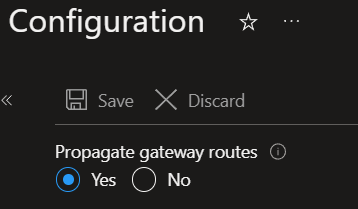

We can also disable BGP on the routing table. You do this by setting “Propagate gateway routes” to “No” under the configuration of your route table. Note that you can block BGP propagation from the VNET connection on the VWAN. However, that affects all subnets in the VNET, which might differ from what you need.

Anyway, this blocks the AGW subnet from seeing all the rouse the (default) route table in the VWAN propagates. It helps keep the network layout from prying eyes. As a side effect, the AGW can reach the Internet directly. However, we need to use a UDR with a route telling the AGW how to get to the Private Endpoint (hint: via the firewall’s private IP). If you don’t have a firewall, you could select one of the HUB its router IP addresses. That works, but you lose redundancy.

Furthermore, you cannot use “VirtualNetworkGateway” as the “next hope type.” Either the VWAN propagates the IP address via BGP, or you configure this via a local virtual NetworkGateway. The latter is not the case here. The gateway is not local but lives in the Azure VWAN.

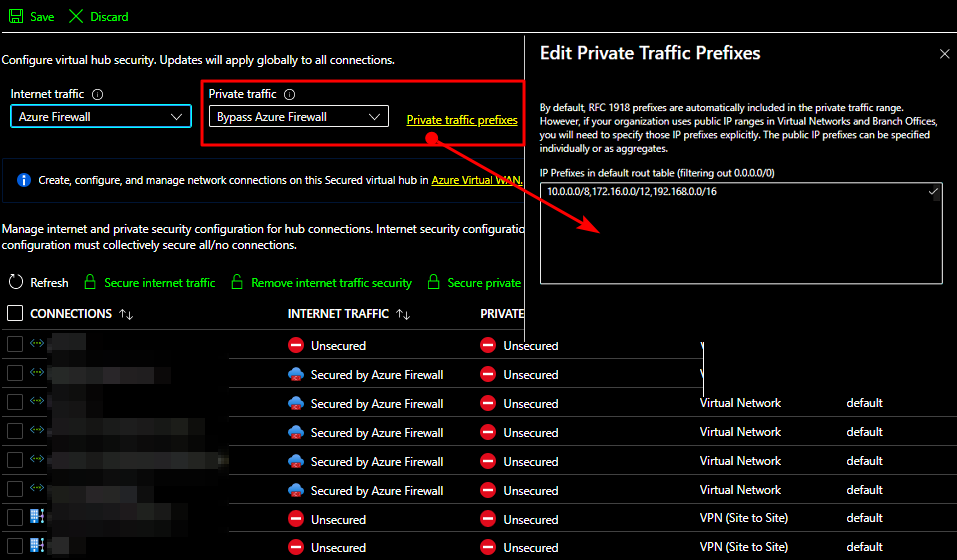

Luckily, a firewall is often a hard requirement, so that is not a big issue. But what if, for some reason, you do not want to inspect that traffic via the firewall at all? Well, that is the default setting. Even if you send the traffic over the secured HUB Firewall’s private IP address, the private traffic bypasses the firewall by default. So you need to configure the private traffic for inspection by the firewall, and before support for UDR with Private Endpoints, which makes life much easier now, you had to enter the /32 prefixes for the private endpoints. While you can change this in the firewall configuration, note that this affects all VNETs. If that is not what you desire, stick with a UDR on the subnet. But, in that case, you must “micro-manage” the routing yourself. Naturally, we also have to configure the firewall rules to allow the traffic, or it will be blocked. For HTTP/HTTPS traffic, FQDN rules (application or network rules) will work just fine.

Figure 5: Remember, the private traffic bypasses the Azure firewall by default.

Firewall specifics

Certificates

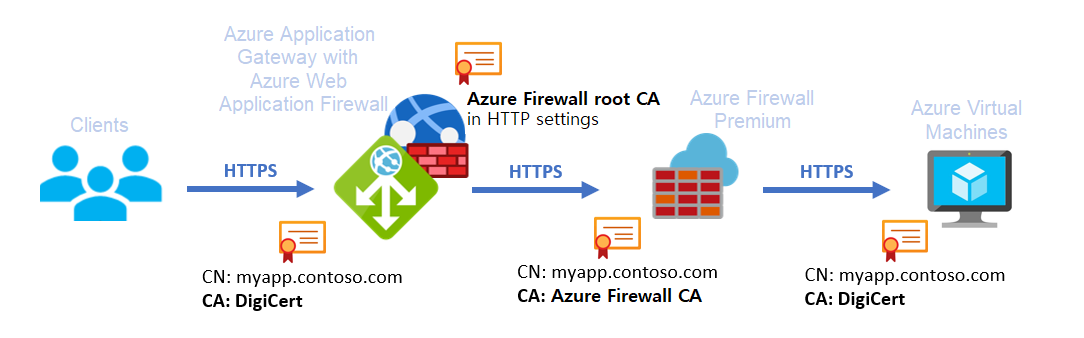

I will not go into certificate configuration here. There are many ways to skin a cat, but you will need a trusted cert from a CA for the Application Gateway. Next, you need a cert for the App service. For this certificate, you can use an automatically provisioned (DigiCert) one or another public/commercial one. Your choice depends on your needs and ability to manage and automate the process around certificate life cycle management. But if you leverage the Azure firewall, you’ll need the AGW to trust the firewall certificate, and for that, you upload the root cert for the private CA that issues the firewall certificates.

Figure 6: Image courtesy of Microsoft Zero-trust network for web applications with Azure Firewall and Application Gateway – Azure Architecture Center | Microsoft Docs

People with hands-on experience using certificates in anything more than a one- or two-tier application involving the decryption and re-encryption of TLS traffic know that certificate lifecycle management can be very challenging. It imposes a considerable operational burden, while full end-to-end process automation is often near impossible. Not just for technical reasons but often due to separation of duties, security policies, politics, and lack of resources to achieve this. That challenge is constantly reinforced by “design by committee” URL naming schemes where people for whom function follows form instead of the other way around come up with elaborate standards that trip up many automation scenarios as they seem to change quite often, despite being a “standard.”

But despite the above challenges, and no matter what, it is paramount to get monitoring and alerting processes around certificate lifecycle management in order. A smooth flow for creating, renewing, revoking, and deploying certificates is critical to avoiding service outages.

The application gateway sits in front of the firewall.

Why did I put the Application gateway in front of (or in parallel with) the firewall? Well, the application gateway takes care of inbound TLS inspection. The Azure firewall provides outbound and East-West traffic TLS inspection. That means Azure to Azure or on-prem to Azure traffic and vice versa over private networks.

It is essential to realize that the Azure firewall cannot do inbound TLS inspection from the Internet. So by putting the Azure Application Gateway in front of the Azure Firewall, we can let the WAF do its job. Additionally, we allow for the firewall’s TLS inspection as we send the traffic from the application gateway to the private IP of the firewall, which makes it East-West traffic. See Azure Firewall Premium features for more information on this. Also, see Firewall, App Gateway for virtual networks – Azure Example Scenarios for more information on how to let network traffic flow. It is an exciting topic.

Sure other scenarios exist, and they exist for valid reasons. However, this setup seems to satisfy most security policies and fit into most environments.

Application rules and network rules in Azure firewall

When reading up on Azure firewall and protecting Private Endpoints in Use Azure Firewall to inspect traffic destined to a remote endpoint – Azure Private Link | Microsoft Docs, we find it is better to use application rules versus network rules. That is because this helps maintain flow symmetry. With network rules, we need to SNAT the traffic destined for private endpoints, or we’ll run into asymmetric routing issues.

Closing thoughts

There is a lot of ground to cover, and I did not go into detail about DNS, Application Gateway, Azure Firewall, and certificates. And as this is an article and not a book, you’ll need to read up on this. In the future, I might have some articles on these subjects. In a follow-up article, I hope to look at some patterns in-depth and discuss their configuration in more detail. That should help you when testing this out in the lab.

You might think this whole story is (overly) complex. Well, it is complex. Overly? Well, no. Things should be as simple as possible but not so simple that you don’t comply with the requirements anymore. Technology is not magic, and security requirements come at a cost. It requires resources (decrypting/encrypting), leading to latency, management overhead, SNAT, and routing, as data flows through the multiple parts that make up the solution. That makes troubleshooting more challenging and more tedious. Tooling might help here, but it is not a panacea.