In an Azure Stack HCI cluster, the network is the most important part. If the network is not well designed or implemented, you can expect poor performance and high latency. All Software-Defined are based on a healthy network whether it is Nutanix, VMware vSAN or Microsoft Azure Stack HCI. When I audit Azure Stack HCI configuration, most of the time the issue comes from the network. This is why I wrote this topic: how to design the network for an Azure Stack HCI cluster.

Network requirements

The following statements come from the Microsoft documentation:

Minimum (for small scale 2-3 node)

- 10 Gbps network interface

- Two or more network connections from each node recommended for redundancy and performance

Recommended (for high performance, at scale, or deployments of 4+ nodes)

- NICs that are remote-direct memory access (RDMA) capable, iWARP (recommended) or RoCE

- Two or more NICs for redundancy and performance

- 25 Gbps network interface or higher

As you can see, for a 4-Node Azure Stack HCI cluster or more, Microsoft recommends 25 Gbps network. I think it is a good recommendation, especially for a full flash configuration or when NVMe is implemented. Because Azure Stack HCI uses SMB to establish communication between nodes, RDMA can be leveraged (SMB Direct).

RDMA: iWARP and RoCE

You remember about DMA (Direct Memory Access)? This feature allows a device attached to a computer (like an SSD) to access to memory without passing by CPU. Thanks to this feature, we achieve better performance and reduce CPU usage. RDMA (Remote Direct Memory Access) is the same thing but across the network. RDMA allows a remote device to access the local memory directly. Thanks to RDMA the CPU and latency is reduced while throughput is increased. RDMA is not a mandatory feature for Azure Stack HCI but it’s recommended to have it. Microsoft stated RDMA increases Azure Stack HCI performance about 15% in average. So, I recommend heavily to implement it if you deploy an Azure Stack HCI cluster.

Two RDMA implementation is supported by Microsoft: iWARP (Internet Wide-area RDMA Protocol) and RoCE (RDMA over Converged Ethernet). And I can tell you one thing about these implementations: this is war! Microsoft recommends iWARP while lot of consultants prefer RoCE. In fact, Microsoft recommends iWARP because less configuration is required compared to RoCE. Because of RoCE, the number of Microsoft cases were high. But consultants prefer RoCE because Mellanox is behind this implementation. Mellanox provides valuable switches and network adapters with great firmware and drivers. Each time a new Windows Server build is released, a supported Mellanox driver/firmware is also released.

Switch Embedded Teaming

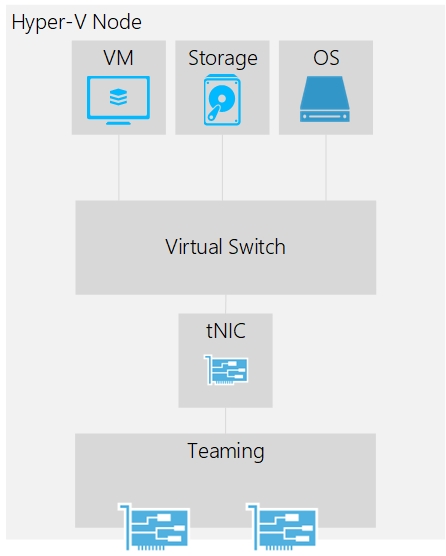

Before choosing the right switches, cables and network adapters, it’s important to understand what the software story is. In Windows Server 2012R2 and prior, you had to create a teaming. When the teaming was implemented, a tNIC was created. The tNIC is a sort of virtual NIC but connected to the Teaming. Then you were able to create the virtual switch connected to the tNIC. After that, the virtual NICs for management, storage, VMs and so on were added.

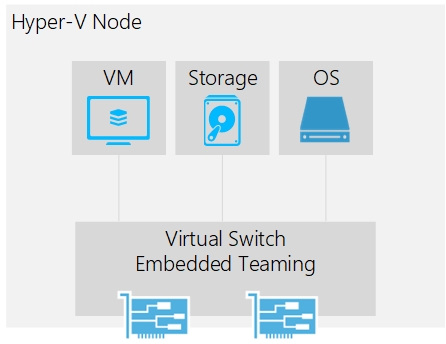

In addition to complexity, this solution prevents the use of RDMA on virtual network adapter (vNIC). This is why Microsoft has improved this part with Windows Server 2016. Now you can implement Switch Embedded Teaming (SET). The teaming depicted above is now deprecated. Today you must use SET:

This solution reduces the network complexity and vNICs can support RDMA. However, there are some limitations with SET:

- Each physical network adapter (pNIC) must be the same (same firmware, same drivers, same model)

- Maximum of 8 pNIC in a SET

- The following Load Balancing mode are supported: Hyper-V Port and Dynamic. Hyper-V Port is the recommended mode by Microsoft to achieve the best performance.

For more information about Load Balancing mode, Switch Embedded Teaming and limitation, you can read this documentation. Switch Embedded Teaming brings another great advantage: you can create an affinity between vNIC and pNIC. Let’s think about a SET where two pNICs are members of the teaming. On this vSwitch, you create two vNICs for storage purposes. You can create an affinity between one vNIC and one pNIC and another for the second vNIC and pNIC. It ensures that each pNIC is used.

The design presented below is based on Switch Embedded Teaming.

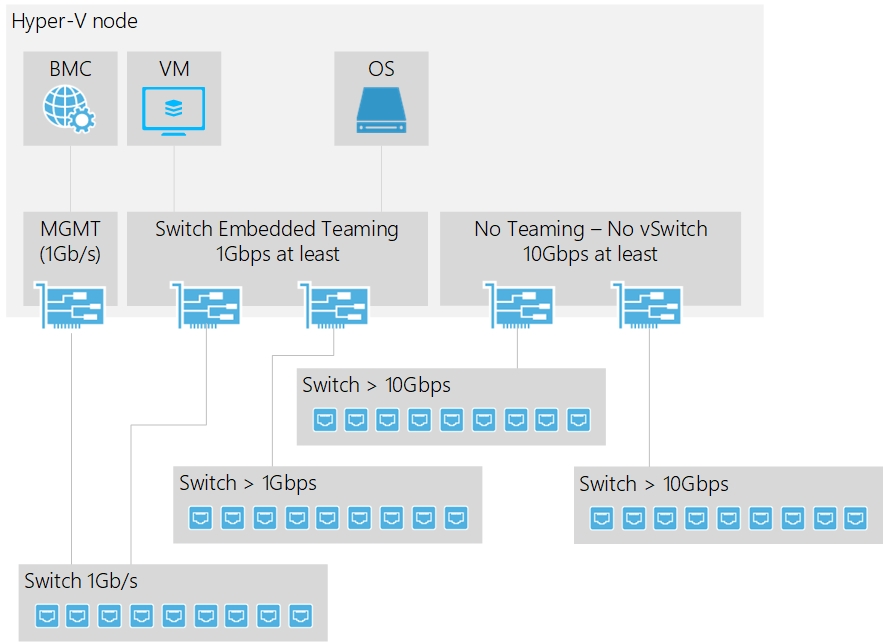

Network design: VMs traffics and storage separated

Some customers want to separate the VM traffics from the storage traffic. The first reason is they want to connect VM to 1Gbps network. Because storage network requires 10Gbps, you need to separate them. The second reason is they want to dedicate a device for storage such as switches. The following schema introduces this kind of design:

If you have 1Gbps network port for VMs, you can connect them to 1Gbps switches while network adapters for storage are connected to 10Gbps switches.

Whatever you choose, the VMs will be connected to the Switch Embedded Teaming (SET) and you have to create a vNIC for management on top of it. So, when you connect to nodes through RDP, you will go through the SET. The physical NIC (pNIC) that are dedicated for storage (those on the right on the scheme) are not in a teaming. Instead, we leverage SMB MultiChannel which allows to use multiple network connections simultaneously. So, both network adapters will be used to establish SMB session.

Thanks to Simplified SMB MultiChannel, both pNICs can belong to the same network subnet and VLAN. Live-Migration is configured to use this network subnet and to leverage SMB.

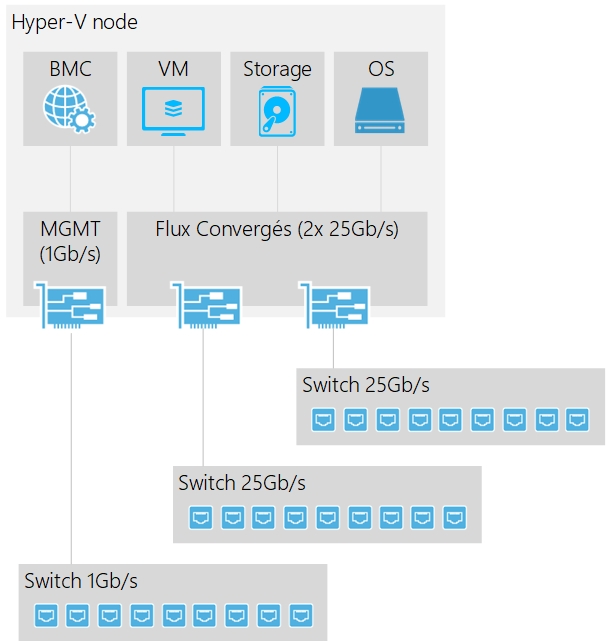

Network Design: Converged topology

The following picture introduces my favorite design: a fully converged network. For this kind of topology, I recommend you 25Gbps network at least, especially with NVMe or full flash. In this case, only one SET is created with two or more pNICs. Then we create the following vNIC:

- 1x vNIC for host management (RDP, AD and so on)

- 2x vNIC for Storage (SMB and Live-Migration)

The vNIC for storage can belong to the same network subnet and VLAN thanks to simplified SMB MultiChannel. Live-Migration is configured to use this network and SMB protocol. RDMA are enabled on these vNICs as well as pNICs if they support it. Then an affinity is created between vNICs and pNICs.

I love this design because it is simple. You have one network adapter for BMC (iDRAC, ILO etc.) and only two network adapters or more for cluster and VM. So, the physical installation in datacenter and the software configuration are easy. However, for highly intensive cluster it can bring some performance limitation. In addition, in some companies, the network topology is complex, and it is easier to separate cluster traffic (SMB, live-migration) to dedicated switches.

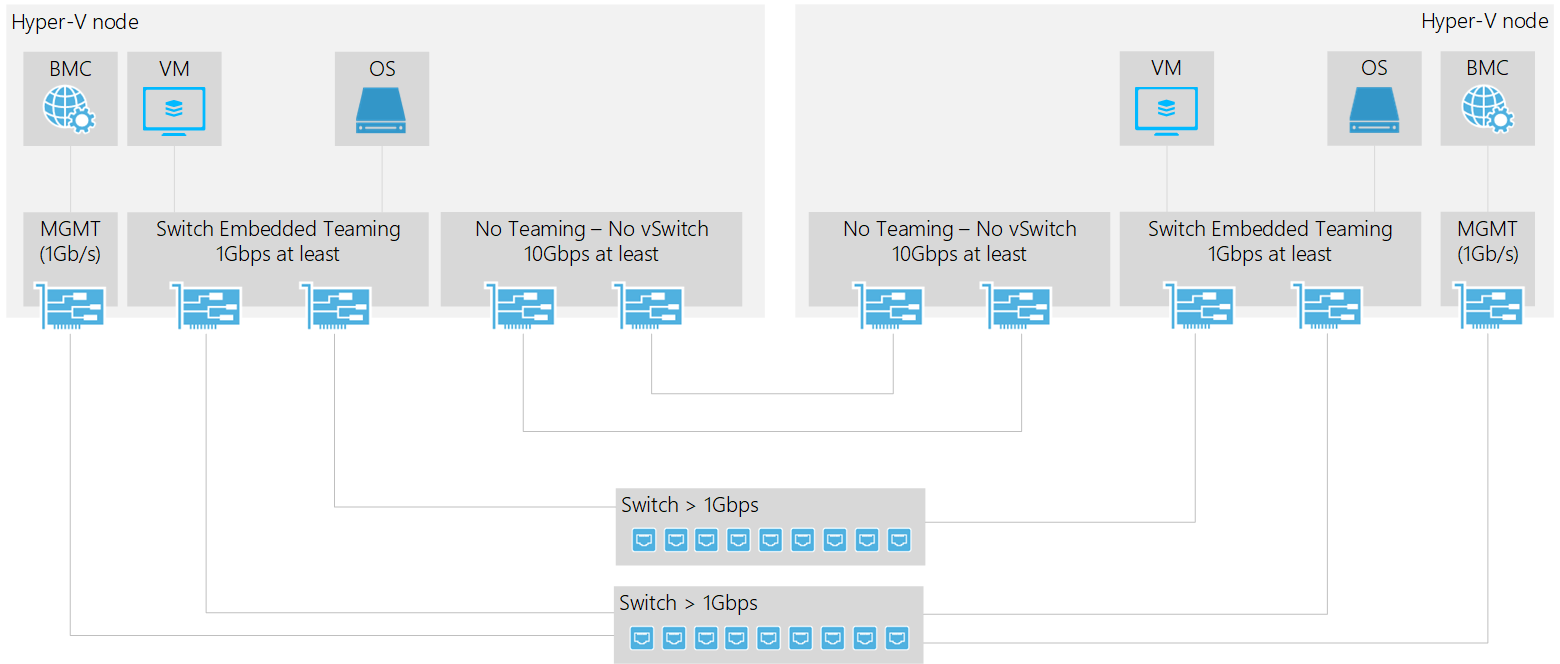

Network Design: 2-node Azure Stack HCI cluster

Because we are able to direct-attach both nodes in a 2-Node configuration, you don’t need switch for storage. However, Virtual Machines and host management vNIC requires connection so switches are required for these usages. But it can be 1Gbps switches to drastically reduce the solution cost.