Introduction

In the spring of this year, VMware released VMware vSphere 7 Update 2 that includes a whole new range of features and improvements. However, whilst the most important ones are widely known, possibly to every system admin out there, there are also a few much less known but still quite impressive details.

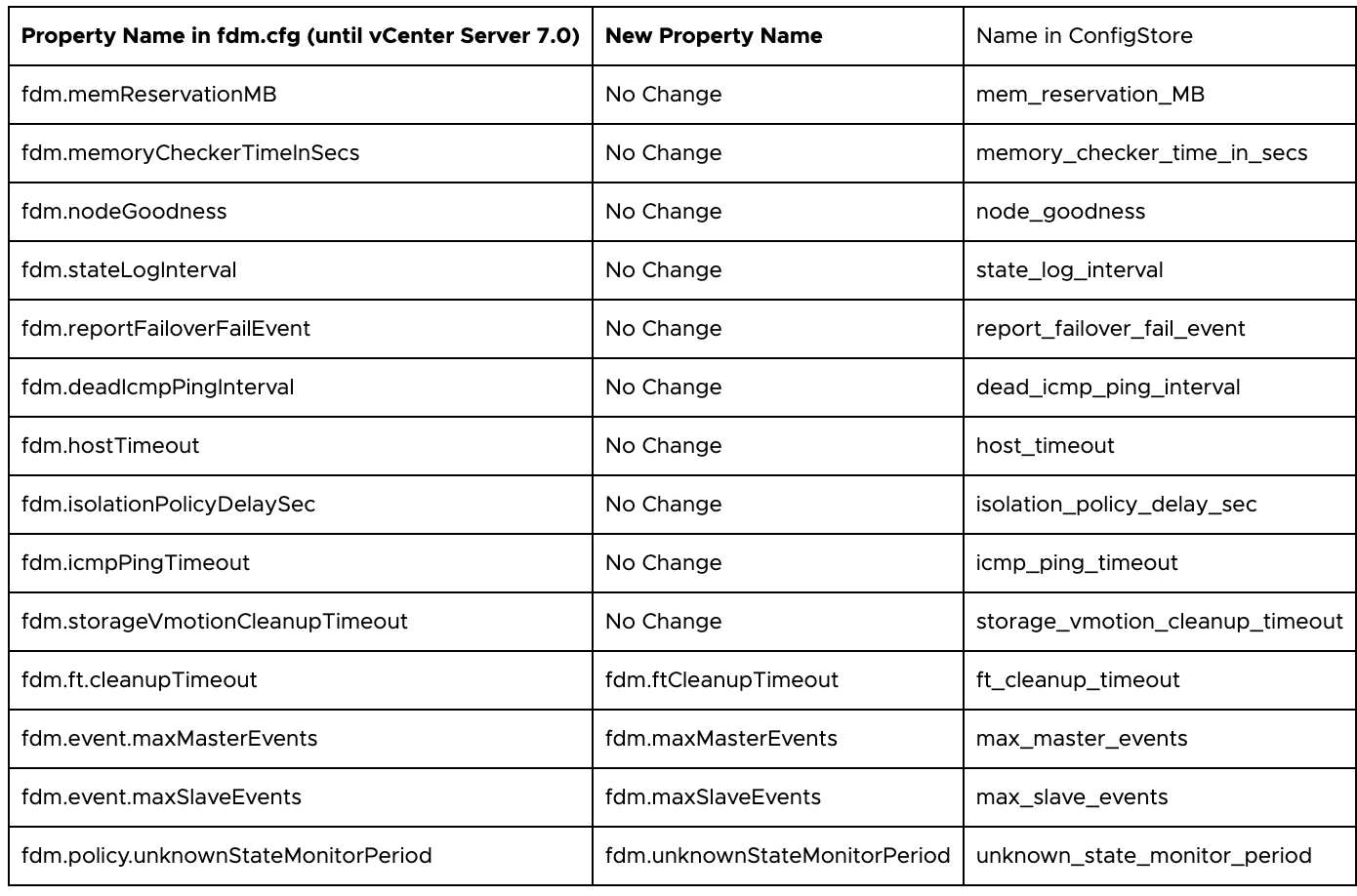

VMware vCenter Advanced Settings Now in ConfigStore

Update 2 has already surprised a lot of VMware vSphere 7 admins right after its release: a lot of advanced settings were moved from the esx.conf and other configuration files. And that’s not all! For instance, FDM (HA – High Availability) global settings used to be stored in the /etc/opt/vmwware/fdm/fdm.cfg file. Guess what? Not there anymore too.

The thing is, a lot of different settings from different files now reside in the ConfigStore internal storage, and you need to use the command line utility configstorecli to work with those. Changing the settings is possible through json configuration files importing & exporting. Let’s take a look at the details of new FDM Advanced Settings after their relocation to ConfigStore:

For example, check-out the FDM/HA settings in JSON-file with a following command:

|

1 |

configstorecli config current get -g cluster -c ha -k fdm > test.json |

You can change or add the advanced parameters in the very same however you deem necessary, and then check-in it to ConfigStore:

|

1 |

configstorecli config current set -g cluster -c ha -k fdm -infile test.json |

As of now, there’s little to no information about the use of this utility, but it will be completed with the VMware notes on the subject. For example, here’s how you export virtual switch settings to JSON (removed from esx.conf file):

|

1 |

configstorecli config current get -c esx -g network_vss -k switches > vswitch.json |

Machine Learning Loads Improvement with NVIDIA

While VMware vSphere 7 Update 2 improvements completely stole the show, some innovations were a little bit overlooked, including those related to the NVIDIA-based machine learning loads. Long story short, there are three of them.

- NVIDIA AI Enterprise Suite is now certified for vSphere

The main source of information is NVIDIA. The collaboration between the two companies has resulted in the AI analytics and Data Science software suite now being completely certified and optimized for vSphere use.

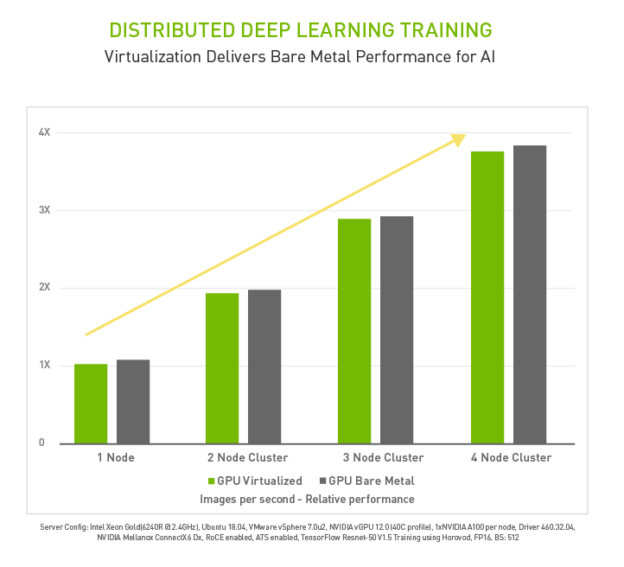

Optimizations do not only include development tools, but also deployment and scaling, which are now quite easy to perform on a virtual platform. All this led to the fact that there are practically no overhead expenses on the virtualization of machine learning tasks for NVIDIA cards:

- There’s now Ampere-based support from NVIDIA for the latest generations of GPU

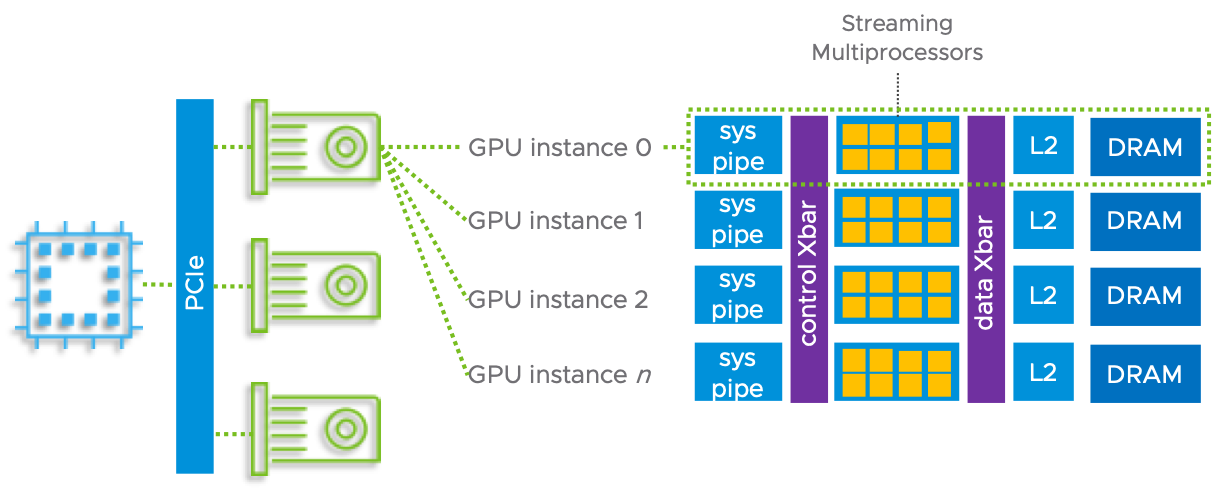

The NVIDIA A100 GPU, currently the most powerful machine learning GPU from NVIDIA (in this product niche), is now fully supported with MIG technology (more about this here). Also, vMotion and DRS of virtual machines are supported for these cards.

The classic time-sliced vGPU approach implies the execution of tasks on all GPU cores (streaming multiprocessors, SM), wherein tasks are divided by execution time based on fair-share, equal-share, or best-effort algorithms (more info here). Naturally, that one doesn’t provide complete hardware isolation since it operates within the dedicated framebuffer memory of a specific VM as per policy.

Now, for the Multi-Instance GPU (MIG) mode, a VM has certain SM-processors, a given amount of framebuffer memory on the GPU itself, and separate communication paths between them (cross-bars, caches, etc).

In this mode, virtual machines are completely isolated at the hardware level. In fact, unlike MIG, the normal operation mode is merely a sequential execution of tasks from a common queue.

- There are also more optimizations to vSphere in terms of device-to-device communication on the PCI bus, henceforth providing performance advantages for NVIDIA GPUDirect RDMA technology

There are classes of ML tasks that go beyond a single graphics card, no matter how powerful, such as, say, distributed training tasks. Here, communication between adapters on multiple hosts over a high-performance RDMA channel becomes more than vital.

The direct communication mechanism over the PCIe bus is implemented through the Address Translation Service (ATS). That is a part of the PCIe standard. Basically, it enables the graphics card to send data directly to the network, bypassing the CPU and host memory. Furthermore, it goes through the high-speed GPUDirect RDMA channel.

On the receiver side, everything happens in a completely similar way. This approach already appears to be much more productive than the standard network exchange scheme (more here).

Precision Time for Windows

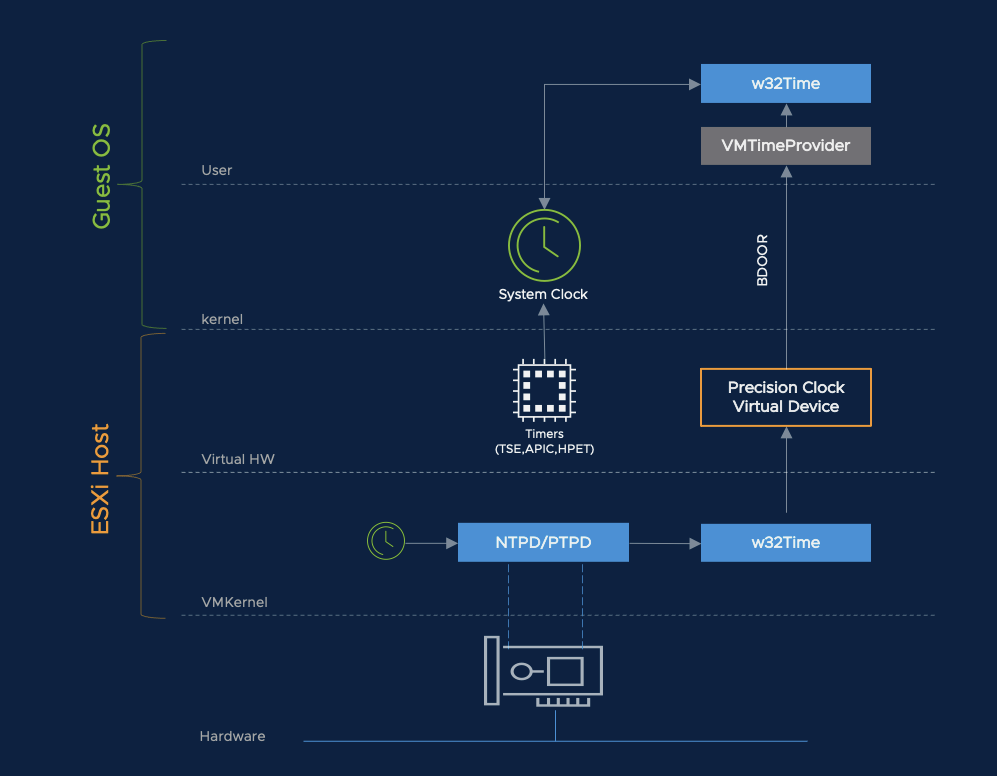

Few people are aware that vSphere 7 Update 2 adds support for the new Precision Time for Windows engine. Before, almost all VM tasks used the NTP + Active Directory bundle. Admittedly, it works fine in the vast majority of cases. NTP provides millisecond accuracy, and if you were to require any greater accuracy (for example, financial applications), then there’s a special equipment with PTP (Precision Time Protocol) support.

VMware has decided to make another improvement for users who require more responsive time services. vSphere 7 Update 2 now includes support for Precision Time for Windows. That’s a mechanism meant to improve the accuracy of time synchronization in Windows.

The Windows Time Service (W32Time) is a service that relies on NTP to provide accurate time information to OS clients. It was initially designed to synchronize time between hosts in Active Directory, but has already grown beyond these tasks and is open for use to the apps. The W32Time architecture is plugin-based. That means that the DLL can be registered as a precision time service provider. These can be both purely software solutions and complex systems with special equipment supporting the PTP protocol.

The APIs for developing such plugins are publicly available, everyone can write their own. Therefore, of course, VMware has developed its own VMware Time Provider (vmwTimeProvider), coming in the package with VMware Tools for Windows-based VMs. It receives time from the Precision Clock virtual device ( vSphere 7.0+) and transmits it to the W32Time side over a closed and fast channel (paravirtualization), bypassing the network stack.

The vmwTimeProvider is essentially a user-space plugin. Theoretically, such a device would require its own driver, but VMware has its paravirtualized interface that takes advantage of Memory-mapped I / O (MMIO) technology optimized for read operations.

The Precision Clock device receives time from the hypervisor core (VMkernel), one of the sources being the HyperClock device that provides the system time to the kernel.

To sum up, if you configure VMkernel and HyperClock to receive time via the Precision Time Protocol (PTP), the Precision Clock device will be able to transmit it to virtual machines with great accuracy.

Finally, it should be noted that vmwTimeProvider isn’t installed by default since the systems without special requirements for time services just don’t need it at all.

The vSphere Virtual Machine Service (VM Service) in vSphere 7 Update 2

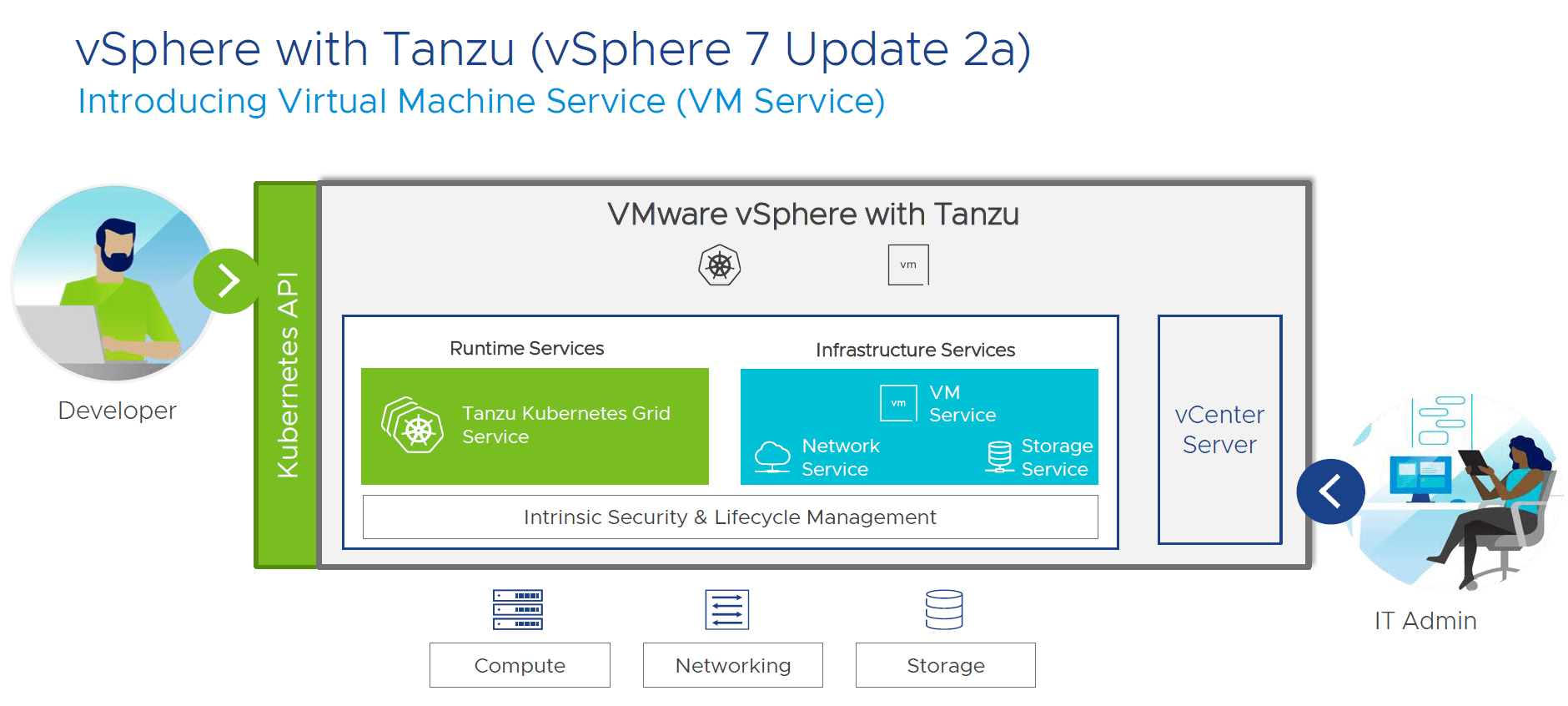

This feature was introduced in Update 2a, and it deserves the utmost attention of those system admins and anybody who usually works with the Kubernetes container environment in vSphere with Tanzu. Why? The Sphere Virtual Machine Service (a.k.a VM Service) provides those developers and admins with the ability to deploy VMs.

This new feature allows DevOps teams to manage VM and container infrastructure through standard Kubernetes APIs. That way, you get a completely seamless process for the new services deployment and infrastructure availability.

The VM Service complements the previously announced Network Service and Storage Service to provide API management of network and storage in a vSphere with Tanzu environment respectively. Let’s take a brief look at those features:

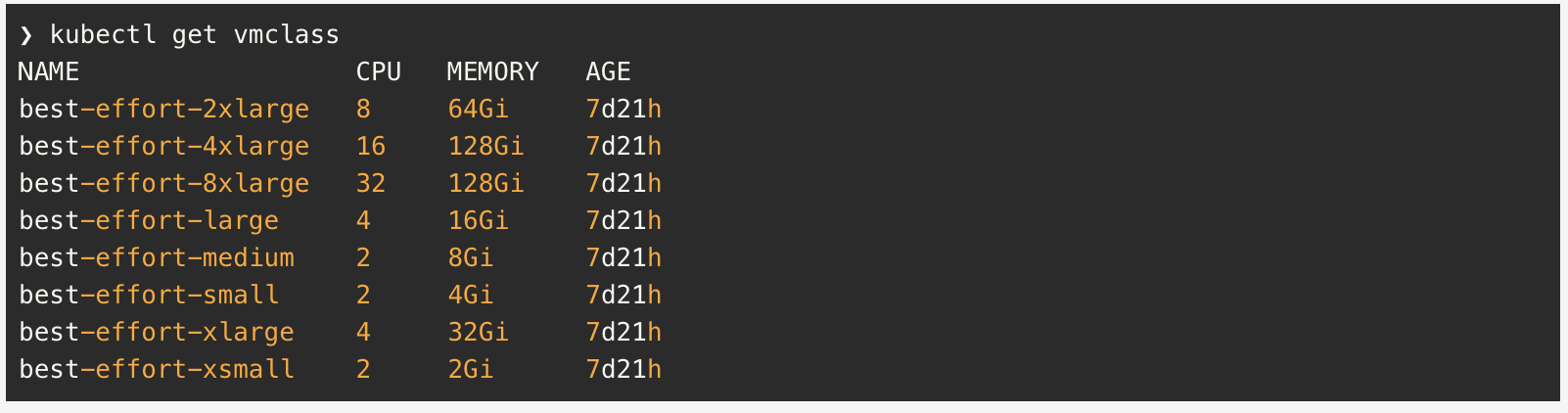

On the vSphere side, this particular service is embedded directly into the vCenter. That allows you to manage VM images (VM Images / Content Libraries) and VM classes (VM Classes / VM sizing).

On the Kubernetes side, the component is called VM Operator. It creates and maintains Kubernetes Custom Resources (CRs / CRDs). It can also communicate with the component on the vSphere side.

VM Service will provide companies with the following benefits:

- Kubernetes developers no longer need to create VM creation requests for administrators;

- The admin can easily preconfigure the specified VM classes available to developers by setting limits for their resources and providing protection and isolation from the production environment;

- Certain containerized apps, for example, can use a database hosted in a VM. Here, the developer can create a specification of such a service in YAML and maintain the resulting structure on their own;

- Thanks to the Open Source nature of this particular service, you can also refine and create new services to meet the needs of the large teams. The repository for the VM Operator component is here.

Suspend-to-Memory While Updating VMware ESXi Hosts

As you already know, when updating the virtual infrastructure in the part of ESXi hosts using vSphere Lifecycle Manager (vLCM), the host in the HA / DRS cluster is put into maintenance mode. That, obviously, involves evacuating virtual machines to other hosts using vMotion. After the host upgrade is finished, it is taken out of maintenance mode and the VMs are gradually moved back with DRS. Depending on the nature of the load, this process can take from several minutes to several hours, and not everybody is happy about it.

The second choice, which is shutting sown VMs, updating ESXi, and then turning it on, is also not acceptable, as it provokes the long downtime of the VM services (you don’t just need time to update the host, you need some time to turn off and on your VMs, or their slow Suspend to disk).

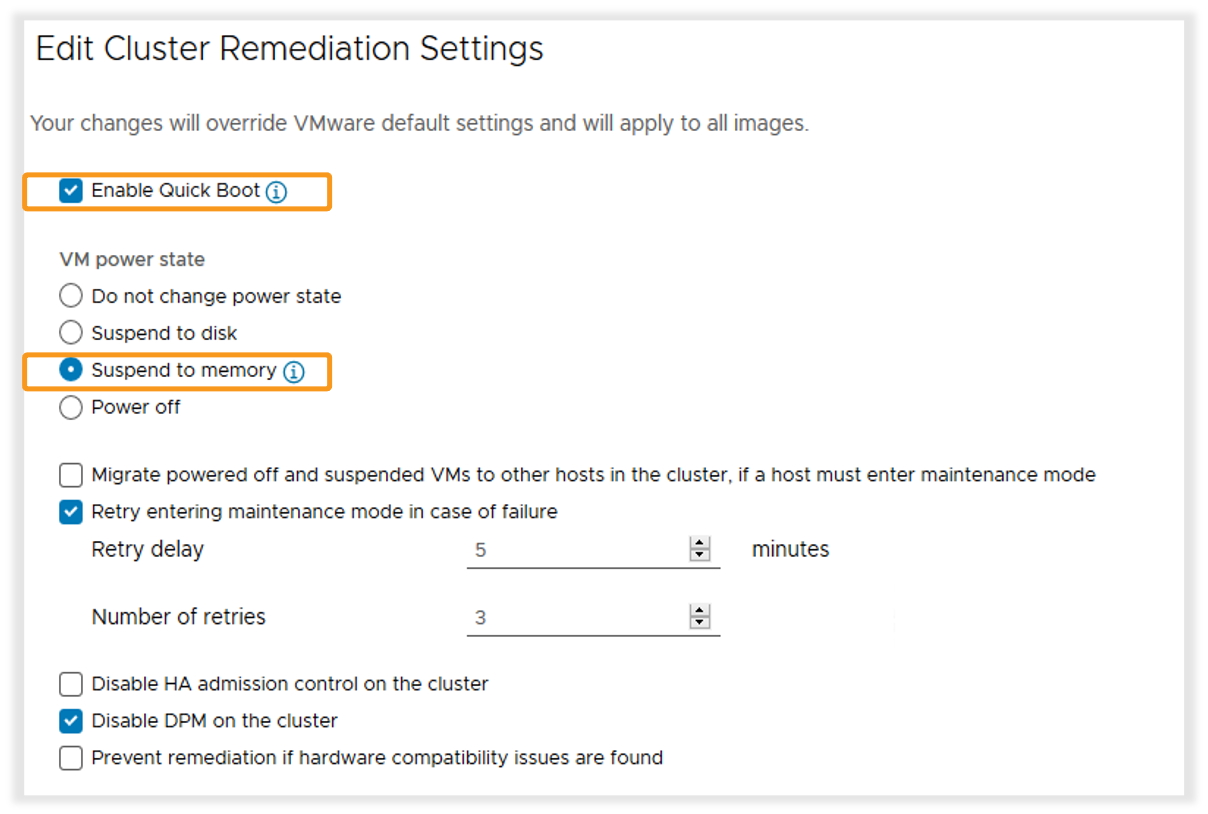

VMware came up with the Suspend-to-Memory technology to deal with this obstacle, first appearing in VMware vSphere 7 Update 2. It’s quite simple: when the ESXi is updated, its VMs are suspended to keep their state (Suspend) in RAM. Naturally, as you can guess, you just can’t reboot the host in this state. That’s why this solution is only used in conjunction with ESXi Quick Boot which implies updating the hypervisor without rebooting the server.

Keep in mind, though, that Quick Boot functionality is not available for ALL servers. More detailed information on the support of this technology from the side of server systems is here, and these are the pages of server hardware vendors, where you can find out all you want to know technology:

- Quick boot on Dell EMC

- Quick Boot on HPE

- Quick Boot on Cisco

- Quick Boot on Fujitsu

- Quick Boot on Lenovo

The Cluster Remediation cluster setting for VMs is set to “Do not change power state” for VMs by default. This functionality implies their vMotion to other servers. That’s why you must set “Suspend to memory” to use this feature, as in the picture above.

When using this type of update, vLCM tries to Suspend virtual machines into memory. In case this one doesn’t work out (for example, not enough memory), it simply won’t go into maintenance mode.

On another note, Suspend-to-Memory supports vSAN and also works with products such as vSphere Tanzu and NSX-T.

Take a look at this small demo of how it works:

Conclusion

As I have already said, among major breakthroughs of the latest VMware vSphere 7 Update 2, some pretty impressive innovations were somewhat lost to the public attention, and here are the most important of them. Each of those features can greatly improve performance in certain use cases. And what about you? Did you know about all these new features in VMware vSphere 7 Update 2?