Introduction

A standard SMB share leverages caching to make things work fluently. Hiccups are hidden and file processing appears to go fast as caching often makes things looks faster than they are under the hood. For the vast majority of knowledge worker applications that support working against a file share, i.e. Word, Excel, PowerPoint and many other tools this works fine. For software that requires and is dependent on an absolute guarantee data is consistent and persisted at all times this is not enough. Backups to file shares are one such use case. If the SMB Shares “lies” to the SMB client that all is written to storage but it is actually still cached things can go wrong. Most of the time, even with a small hiccup, things still go well, but that is not guaranteed. You might have an application think that all data is persisted without it ever having happening. With high available SMB shares that leverage continuous availability, this is taken care of by design. See SMB Transparent Failover – making file shares continuously available. This comes at the cost being less performant. But less does not mean insufficient as the underlying storage might well be fast enough to perform well. That is often the case and that’s why I do build highly available and continuously available file share solutions with SMB 3 clusters and clients. I have written a blog post on this potential problem you can read here When using file shares as backup targets you should leverage continuous available SMB 3 file shares

Not every solution requires high available storage. But that doesn’t mean that it can afford to lose data and not know about it. For those use cases we have been urging Microsoft to add functionality to SMB 3 that gives us the option not to leverage any OS caching. That option has come in Windows Server 2019. Let’s take a look at that and see how usable it is.

The fix is to use writethrough

If you have a use case where consistency and reliability is the prime directive and the use case tolerates no data loss the fix is as simple as it sounds. Disable caching and avoid ever telling an application that data has been written before it actually has.

In Windows Server 2019 (1809) and Windows 10 1809 you’ll find a new option -UseWriteThrough in the New-SBMMapping commandlet. It does exactly what it says and doesn’t use caching when set to $True. It actually will have written the data before it tells the application it has done so. It was introduced to give user the option to prefer reliability over performance in non-continuously available file share scenarios. As you can see this is set from the client. IT actually should even work against SMB2 to file shares on other OS versions.

It looks like this:

|

1 |

New-SmbMapping -LocalPath 'S:' -RemotePath '\\DidierTest06\FastShare' -UseWriteThrough $True |

The drawback is that this can impacts performance negatively. Well, actually it reveals the real performance of the underling storage of the SMB target. This can be a bit of a shock to the system so to speak. If that loss in performance makes the copy process to slow you can break another wise acceptable solution. Hence you have to test the impact and act accordingly. It might mean your storage solution needs to be improved on or that while the performance has dropped it is still plenty fast enough for the use case.

Stating that using write-through is just to slow would be unfair. If that was the case high available general-purpose file shares with continuous availability would be too slow by default. They are not. They do have the overhead of having to maintain and ensure consistency and integrity of data over anything else.

The real issue here is that caching gives people some illusion of sufficient speed where there is none. A SMB3 file share to a folder stored on a couple of HDDs might work just fine and you won’t think twice about it. When you take a way that caching you are confronted with the real performance (or lack there off) for those couple of (too few) HDD and that can lead to just a disappointing experience or render your previously working solution completely useless. Naturally vendors focus on avoiding such scenarios so they’ll warn about this. But don’t conclude you should avoid it. It means you should be aware of it and make sure your solution still performs well when you set the -UseWriteThrough parameter to $True.

The performance when using writethrough might very well be more than sufficient if you have enough HDDs providing the IOPS & latency required. The same goes when you have tiered storage, All Flash arrays or storage arrays with built-in caching for acceleration that are transparent for SMB3.

To show case this we’ll create a quick & dirty test setup with a file share server, a VM, DidierTest06 with decent storage where we create FASTSHARE on a volume. We’ll than add two SMB mappings to this file server on another VM DidierTest05 . One without and one with writethrough set. We’ll than compare the speed when moving some data in the form of a 8.5GB large ISO file.

|

1 2 |

New-SmbMapping -LocalPath 'F:' -RemotePath '\\DidierTest06\FASTSHARE' -UseWriteThrough $False New-SmbMapping -LocalPath 'S:' -RemotePath '\\DidierTest06\FASTSHARE' -UseWriteThrough $True |

First, we test our SMB mapping with -UseWriteThrough set to $False

|

1 2 |

$TimeFastWriteThrough = Measure-Command -Expression {Copy-Item "C:\Sysadmin\SUU-WIN64_18.04.200.246.ISO" -Destination "F:"} Write-$TimeFastWriteThrough |

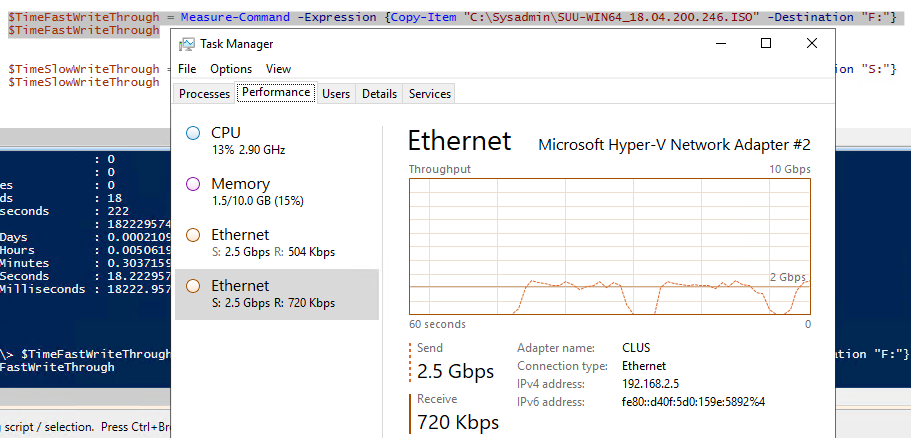

As you can see, we’re getting 2*2.5 Gbps as copy speed. That’s pretty decent. You might also notice we snuck in some SMB multichannel action as well just for fun.

Now we test the SMB Mapping with -UseWriteThrough set to $True.

|

1 2 |

$TimeSlowWriteThrough = Measure-Command -Expression {Copy-Item "C:\Sysadmin\SUU-WIN64_18.04.200.246.ISO" -Destination "S:"} $TimeSlowWriteThrough |

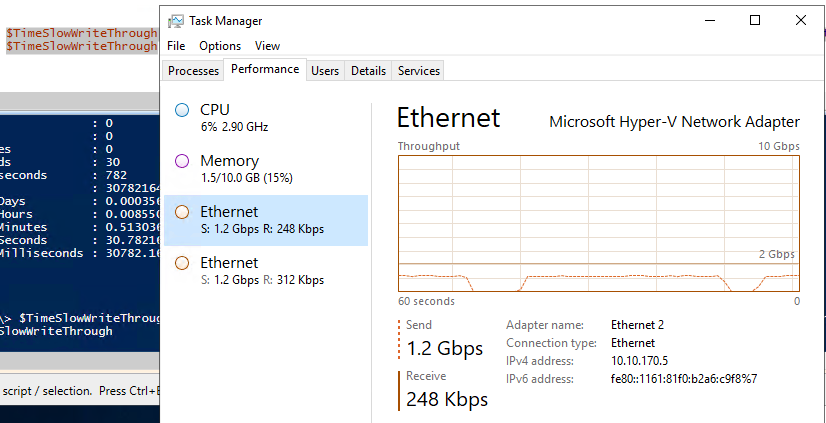

What you see here is basically a reduction with 50 percent. Repeated testing puts it at a reduction of anything between 40% to maximum 50% for this particular setup. Your mileage may vary. It is early days yet and I have not used this at scale with various storage solutions. If you need this you’ll want to have excellent performance from your storage array and as such VSAN from StarWind would be a great choice in value for money.

What does this mean

The results are clear. Using writethrough reduces the speed by significantly. OUCH! That is too much, too slow, too painful to bear… or is it? Again, what is more important, speed or reliability, is totally dependent on the use case and you’ll have to design your storage solution for that. Checks and balances.

The balanced statement here is that when using writethrough, the performance will always be less than with caching enabled. That difference might be acceptable for the use case as other parts are slower anyway or the loss in performance doesn’t matter much. When using modern state of the art storage solutions (SSD, NVMe) it might not matter at all. Sure, it is clearly measurable but that alone doesn’t make something relevant. Slower doesn’t necessarily mean too slow. You must, as always, consider your use case.

Let’s look at an example for backups. You might have 4 servers with target shares that ingest backups simultaneously. As the reliability is more important, we might find that even while the raw speed is significantly less it is still fast enough for the purpose ore you might decide to add backup targets or improve the storage performance of those 4 targets.

Conclusion

This option to disable caching with SMB mappings is a welcome addition and helps out in certain scenarios. I would however like to see another option and that is to use writethrough that does not require an SMB mapping but is an option an application interface can use when connecting to an SMB 3 share via an UNC path or that a SMB3 share can force for any SMB 3 client connecting. Let’s face is, mappings are a bit old school and not the most automation friendly. I am advocating this with Microsoft and I would think it would make SMB more complete as we would have the choice in any scenario. I’m the business of building rock solid solutions that are highly available to continuous available. This means I’m always looking into the benefits and drawbacks of design choices. I study, test, measure and verify what I design and build in the lab as well. I don’t do “Paper Proof of Concepts” or assumptions I cannot confirm. I highly recommend it.