A closer look at the competing RDMA flavors for use with SMB Direct

InfiniBand

InfiniBand works, it’s fast and it’s well established. For many years (since 1999) it ruled the High-Performance Computing realm of the IT world. In 2012 it was one of the better value propositions for SMB Direct in terms of available bandwidth & low latency for the money. It is a bit of an outlier and it exists within its own non-routable InfiniBand network fabric. It does require a subnet manager (typically on the switch) but that’s about it. The entire lossless network challenge is dealt with for you by InfiniBand switches. Depending on your needs it might be a good and price effective choice. As long as you have Windows Server drivers you’ll do fine and you don’t require any of the Ethernet benefits. Think about it, your FC fabric or even iSCSI fabric lives or lived in isolation and in many cases that were a good thing, just not for convergence. Convergence is great but is or can be complex. We’re getting better at managing that complexity, Ethernet dominates the world and times have changed. You owe it to yourself to explore the other options. InfiniBand can be a bit of hard sell if you don’t already have it as it might scare some people away due to the link with HPC. This doesn’t mean InfiniBand is bad. There is an interesting market for this and Intel with Omnipath seems to want to compete for it. But it is less common for Wintel SMB Direct use cases and I do not see this grow.

RoCE

We said InfiniBand is solid, respected and well established. It’s not Ethernet however and as such a bit of an outlier as the datacenter, drums beat the virtues of Ethernet ever louder. The InfiniBand Trade Association (IBTA) figured they could fix that. RoCE came about in 2010 when IBTA implemented IB on Ethernet. That was RoCEv1 and it works over layer 2, which means it is not routable. Lacking an InfiniBand fabric, we need to achieve “lossless” network some other way. That is DCB comes in of which you must at least implement Priority Flow Control (PFC). This requires VLAN tagging to carry the priority. ETS is used for QoS. In 2014 we got RoCEv2 which moved from the IB network layer to UDP/IP (not TCP/IP) which enabled layer 3 routing and ECMP load balancing.

Remember that in Part II we talked about QCN in DCB, how that was layer 2 only and basically somewhat of a unicorn? We now also have DCQCN which is something you can thank or blame Microsoft for.

Let’s dive into some history.

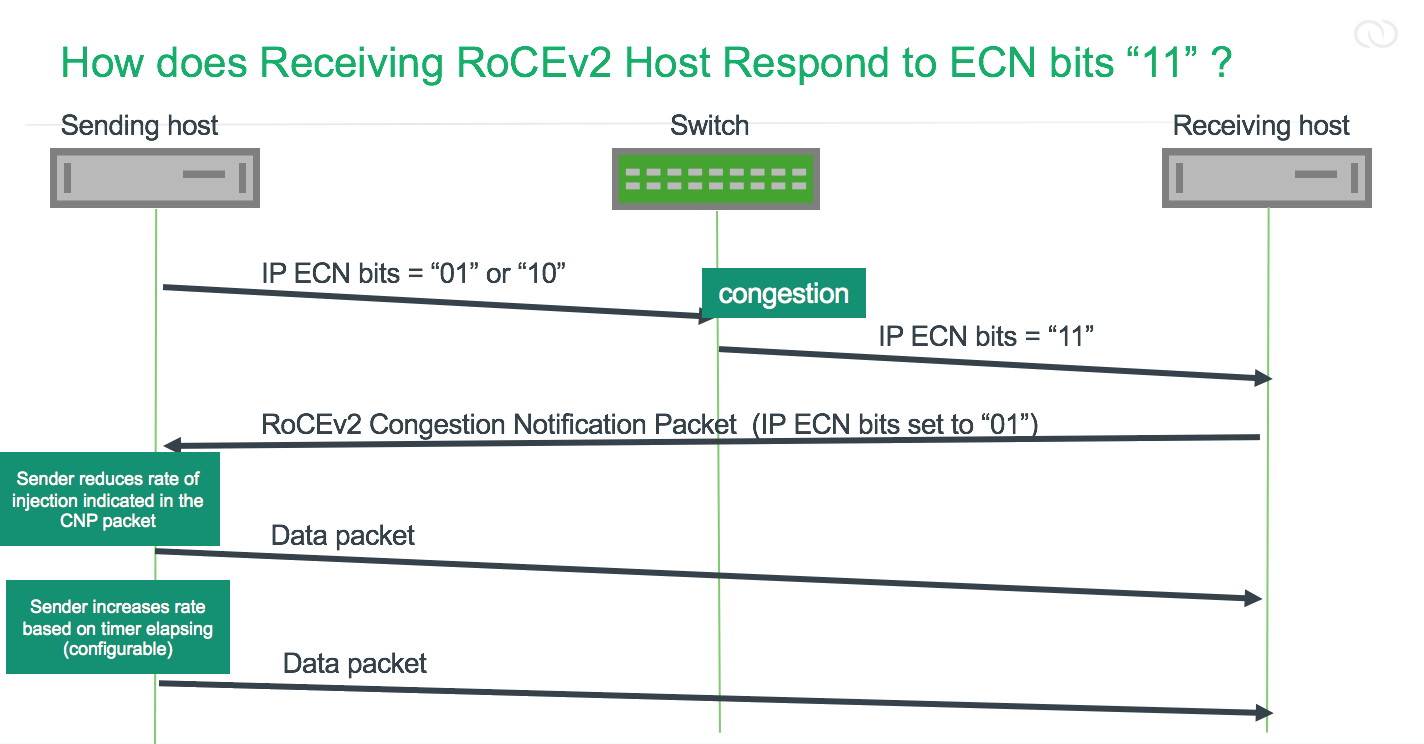

RoCEv2 included an optional RoCE Congestion Management protocol (RCM). This leverages Explicit Congestion Notification (ECN) to enable end to end congestion notification.

ECN Image courtesy of Cumulus Networking Inc.

RCM is nice but it’s a concept that says nothing about the protocol/algorithm used to determine congestion at the congestion point and notify the sender to reduce its transmission rate by x % for y amount of time.

Meanwhile, Microsoft has this little cloud project called Azure in full swing that really needs RDMA for all that East-West traffic and that leveraged RoCEv2. They had some issues and while they were at it they worked with their RoCE vendor to work out some things. DCQCN was introduced as an algorithm on top of RCM (2015). Microsoft leverages PXE boot to deploy hosts. This is broken by VLAN tagging needed to carry the priority for PFC/ETS (DCB). They dealt with this by removing the VLAN tagging requirement by leveraging DCSP which already exists for that purpose.

If you think MSFT is the only one working on improving RoCE, you’re wrong. Other vendors are as well and L3QCN (Huwai) is one such example. Then there is the discussion around RoCE being improved on the NICs via Improved RoCE NICs (IRN). Improved RoCE NICs deal will packet loss and don’t require PFC.

RoCE players

Mellanox today is one of the most used RDMA cards out there. They overcame early issues with firmware and drivers, which I guess were due to a learning curve with the Windows OS. As long as you can handle PFC configuration correctly in smaller environments you’re good. In large, more traffic intensive environments DCQCN might need to come into play and it adds to the complexity and the learning curve. But if you need it you can handle that and if not, you probably don’t need it or you need to buy it as a solution. Think about what you did when you implemented FCoE? You bought a converged stack, didn’t you? My experience with Mellanox has been good and it’s the major deployment out there and I find many of my colleagues deploy it as well. But the complexity of lossless Ethernet remains a concern and must improve.

Broadcom (Emulex) has a RoCE offering, but in the field, things have not gone smooth and support issues arose. When it comes to Ethernet, Emulex has been in rough waters (remember the yearlong VMQ issues?) and they’ll need to step up their game in my humble opinion to overcome the negative perception. Once bitten twice shy.

Cavium (QLogic) also has a RoCE (v1 and v2) offering. It’s special as you’ll find Cavium in the iWarp section as well. They do what they call Universal RDMA. You can have both RoCE and iWarp running concurrently. Cavium seems to be a company that focuses on the strength of its offerings, sees the value of all arguments and decided to serve both iWarp and RoCE RDMA solutions to the world. More on that later. If the quality and support of their offering are as good as their eloquence and manners this is a company to watch. The only “odd” thing is the RoCE configuration requiring us to dive into the BIOS which is not optimal from my point of view and which I’d like to see addressed. I also wonder how to set 2 or 3 lossless priorities? I’d love to see one consistent way of configuring RoCE on the client overall vendors.

iWarp

Historically iWarp has had to deal with some hard-technological challenges in making RDMA over TCP/IP to scale and perform. Today they seem to be doing that very well. iWarp is in a stronger position than 4 years ago. At that time Intel fell of the iWarp bandwagon and Cheslio was left as the only dealer of iWarp cards. They also had some issues with drivers, some price hikes and concerns around support & shorter life cycles of cards.

Technically iWarp is not easy to do. Doing RDMA over TCP/IP as efficiently as on InfiniBand (RoCE) is quite a challenge. But that’s for iWarp to handle and this should not be our concern especially as they have done a great job at it. It is interesting to see that some people figured out that iWarp could be improved by not dragging the entire TCP/IP stack onto the card but limit this to UDP (iWarp over datagrams). The idea here is to make iWarp consume less memory and scale better in HPC environments. Which makes you think about how RoCEv2 (UDP) does things. I don’t know if anyone ever tried to take this idea any further or not.

The lure of iWarp for SMB Direct is that it doesn’t require DCB or maybe even ECN (DCQCN) to use it. With RoCE at least DCB for PFC is a hard requirement. In that regard, iWarp is a lot more plug and play and it will integrate a bit better into smaller brownfield scenarios where you might not have the complete and final control over the network.

While big players like Azure leverage RoCEv2, we, mere mortals, do not normally deal with environments at that hyper-scale. Many of us talk about 4 to a couple of dozen servers, maybe a few hundred, some of us perhaps thousands. So even if iWarp would not be able to match the numbers on latency that InfiniBand or RoCE achieves, does it matter? Depending on the test results I’ve read there is small to a negligible difference or none at all.

If you need to get below 2 µs, does it matter if you can do 600 ns? When is very low latency just bragging rights? What if those bragging rights just cause you some headaches and Microsoft a bloody nose as they get support calls around anything to do with SMB 3 which are potentially just DCB configuration issues. There is a certain lure to that proposal, right? The danger I see here is that saying iWarp just works because it’s TCP/IP might be too simplistic, you need to avoid congestion in the fabric as well to have good performance. On top of that when NVDIMMs come into play ultra-low latency becomes more and more important. You could conclude that you have to look at the use case when picking a RDMA technology. That’s sound advice for most technologies.

Now that Intel is back in the iWarp game, that message is re-enforced. Due to the fact that they are not doing RoCE. Do note that Intel competes with InfiniBand via Omnipath so RoCE might be less opportunistic for them to do.

The current popular kid and new on the iWarp block today is Cavium (they bought QLogic). Yes, QLogic, the ones that sold their TrueScale tech to Intel and are a traditionally strong FC player. However, with the acquisition of the Broadcom Ethernet business they got a stronger Ethernet presence and so far, their firmware and drivers have been better than the Broadcom ones in the past. Cavium brings RDMA to the table. As the cards can actually do both RoCE and iWarp and as such give people some investment protection if they would change their mind on what flavor of RDMA they want or need to use. Maybe they have a need to use both (brownfield, not every vendor bought solution gives you a choice). For storage vendors, it would allow storage arrays to talk to clusters that have either iWarp or RoCE.

Do note that due to the fact that RoCE and iWarp cannot talk to each other you have to be a bit consistent within a solution. If you mix both, it will create “RDMA Silos” where the iWarp Silo cannot talk RDMA to the RoCE Silos. I said that Cavium lets you use both at the same time, but that doesn’t mean iWarp can talk to RoCE or vice versa.

One thing is clear and that is that Microsoft likes Cavium and iWarp right now. This shows in Storage Spaces Direct with Cavium FastLinQ® 41000 and in The Evolution of RDMA in Windows: now extended to Hyper-V Guests. It helps them with a better SMB Direct experience and potentially helps mitigate the need and complexity of having to extend DCB into the guests.

Microsoft is doing business with Cavium on multiple fronts by the way (Project Olympus and Cavium™ FastLinQ® Ethernet First to Achieve Microsoft Windows Server SDDC Premium Certification), so there are some love and mutual benefits at work here. The one thing that would be interesting is if Azure is willing to do the experiment of running iWarp at public cloud scale.

If you’re confused about who’s buying what company or part of the product portfolio, don’t feel bad. It’s a bit of a confusing landscape after many years of (partial) acquisitions, sales etc., especially when this includes rights to some of the brand and product names.

Concerns around RoCE/iWarp FUD or reality?

DCB & ECN (+ variants) for RoCE are complex

There is no doubt that DCB & ECN are configurations that will trip up (many) people. Depending on your use case you might use iWarp to avoid such use cases when possible. Complexity is always a concern and there is value in “Everything Should Be Made as Simple as Possible, But Not Simpler”. Do note that converging everything on the same Ethernet fabric is not helping to make things less complex and that problems in converged environments might lead to a bigger blast radius and more fall out.

DCB & ECN for RoCE do not scale

True in the sense that ultra-high throughput at very low latency are at odds with each other and making that scale is a challenge to say the least. It requires a “near lossless” environment to pull off under heavy load. And whether we use RoCE or iWarp, making that happen is a complex matter. The iWarp benefit here is that TCP/IP is built for lossless environments. But whether it still works well enough for (hyper-) converged use cases when congestion runs out of hand in large-scale deployments is another matter. Right now, RDMA scales by having many smaller units of deployment and that model will remain for a while. The real challenge is inter-DC communications.

Trade association versus Standards body

InfiniBand and RoCE come out of the Mellanox camp and the standards are defined by the InfiniBand Trade Association. iWarp is an IEEE/IETF standard. Some people prefer the fact that IEEE/IETF is a standards organization over IBTA (a trade association). In practice, I’m a realist, I tend to focus on what matters and works. There is vender SQL (T-SQL, PL-SQL) and standard ANSI SQL and realities in the field are that people use what works for them. So, to me, that’s not a problem by definition.

FUD, Infomercials & Marchitecture: extra work, expensive switches & complex configurations

Now all vendors involved have “infomercials” and “architecture” that play to their strengths but also bash the competitor. Some do this a bit more aggressively than the others, but for a European, this style of marketing leaves a bad taste and raises my suspicion of foul play.

When I see the argument being made that 20 lines of PowerShell causes an enormous overhead and as such scalability issues due to the DCB configuration needed on every single windows host, I laugh. The amount of PowerShell in a highly automated environment will not kill deployment processes due to 20 extra lines, nor will any software-defined networking at scale.

The remark that RoCE requires more expensive switches with more advanced features is only valid for a small-scale environment with the cheapest possible, low port count 10Gbps switches. But in any other setup, the higher end commodity switches do have the capabilities on board. Juniper, Mellanox, Force10, Cumulus, CISCO, …

When it comes to the extra configuration needed on switches as well as the complexity of configuring them, there is indeed a challenge. Networking has traditionally been a slow adopter of true automation. But let’s face it many other complex configurations on switches also exist. When doing networking at scale, being able to handle automation and complexity is a need that has to be addressed. Software Defined Networking is here for a reason. For TCP/IP you might very have to deal with DCB, ECN, DCTCP configurations on switches as well, which also add complexity. Claiming we have won, we are right & you are wrong isn’t very helpful. Research into dealing with congestion is a “hot” subject in data center technology. All big players (Azure: https://www.microsoft.com/en-us/research/wp-content/uploads/2016/11/rdma_sigcomm2016.pdf, Google: http://conferences.sigcomm.org/sigcomm/2015/pdf/papers/p537.pdf) are working on this as are many academics.

Mudslinging and FUD by vendors make their other valid concerns seem less trustworthy. It’s not a good approach. If anything, it could be a sign of how afraid they are of each other or how cutthroat the competition is.

Consider your use case

I’m not comfortable stating that either RoCE or iWarp is better suited to every use case at any scale. I will leave that to storage vendors (NVMe over Fabric), Cloud providers like Azure, AWS & Google and OEMs to decide on. Maybe Azure could switch to iWarp, maybe at a certain cost in some respects because the benefits gained outweigh that cost. I don’t know. At such scale engineering issues will pop up. At my scale, RoCE has served me well, just like iWarp has.

My biggest concern: Vendor interoperability and a lack of competition at the NIC side

The most valid concern is if RDMA will work properly between different vendors, both for switches and NICs. While I have done PFC between different switch types I have always kept the RDMA NICs limited to a single vendor. One reason is to avoid interoperability issues, the other one is the fact that the choice was limited and not all vendors had a truly usable product out there. Due to the fact that there are not that many vendors that concern remains under control. But that might change faster when RDMA becomes ever more common. One test I am working on is to let Cavium RoCE and Mellanox RoCE cards communicate with each other in a cluster or even between clusters.

Where are things heading or do I have a crystal ball?

In the future with the complexity of RoCE not diminishing, but seemingly increasing – and with that the confusion – it could very well be that iWarp can steal the show in the coming years in the SMB Direct space. One of the reasons is that any vendor leveraging SMB Direct doesn’t need or want the hassle with support calls about their applications that are actually related to DCB/ECN configuration issues or design mistakes. Complexity tends to lead to those support call numbers rising. The other reason is that there are two vendors that will challenge the current players for dominance in the iWarp market. Those are Cavium which has their products ready now and probably Intel, who need to speed up its efforts to compete.

None of the competing vendors will call it quits. InfiniBand is the odd one out when it comes to Wintel shops so while this might be a good and relatively easy to implement option it is a harder sell. It’s a separate fabric that doesn’t talk to other fabrics (it’s like FC in that respect) and in a world that has crowned Ethernet king due to its ubiquity and fast evolving capabilities (200Gbps and beyond) it’s not seen as the way forward and I very much doubt it will be in the SMB Direct space.

RoCE has had a few years of perceived dominance as Intel dropped out of the iWarp business. The only vendor left at that time frame suffered from some early bad customer experiences, a bit of price gauging, ill-advised marketing techniques and longevity concerns but they have made iWarp progress to 100Gbps at latencies that are very impressive nowadays.

Meanwhile, Mellanox with RoCE did deliver but had scalability issues and suffered of the challenges associated with lossless Ethernet configurations. Time will tell if they can address these. Early on they also had the usual driver/firmware issues (it’s not unlike any other NIC in that respect). Some of the competing RoCE offerings where a disaster. Emulex created a bad RoCE experience and their support statement around it is confusing to me. In the eyes of many, they’d better focus on FC (where they were the gold standard for a very long time). They are under increased pressure from their competitors and they operate in a non-growth market, Fibre Channel. As such moving into Ethernet was a smart move but the execution was done rather badly resulting in some damage to their reputation.

Intel now backs iWarp but the Omnipath roadmap is confusing a lot of people and it’s not yet a mainstream choice when you want to do RDMA. On the iWarp front, they’ll now deliver LOMs with iWarp at 10Gbps and that’s bound to replace the standard 1Gbps LOMS over the years. The fact that it might give iWarp a boost in the server room when economies of scale come into play. But in the long term they’ll need to move beyond 10Gbps and deal with the concern about offloading CPU cycles. Intel seems to have an entry level RDMA play and a HPC competitive play competing with InfiniBand. This leaves plenty of space for companies that focus more on RDMA on Ethernet.

When I look at RDMA / SMB Direct to the guest, nested virtualization and containers, iWarp could very well have the benefit here as getting DCB down to that level could be a lot more involved. Whether this will happen and if this could slow down RoCE versus iWarp adoption in this area remains to be seen. Problem and support wise the favor seems to be turning a bit to iWarp. If that’s good enough and the support issues disappear that’s understandable.

As a result, Cavium may be the proverbial dog that will run away with the bone while the others are fighting over it in the SME space. That is when they execute right and the whole mood around RDMA flavors doesn’t change again. Even when that happens they have both horses in their stable and it seems they are doing well at building relationships and making friends. But do not dismiss Mellanox and RoCE, they have been very active in Open Networking and collaborating on SONiC (Microsoft’s Software for Open Networking in the Cloud) when it comes to RDMA. Cavium is also supporting this initiative.

Image courtesy of Microsoft

Conclusion

My personal score so far is that since 2012 I have been running RoCE Mellanox in production and I still do. I have tested other vendors for both RoCE and iWarp but they did not last that long. Basically, they made it to the lab but ended up in the cupboard or have been removed from production when Windows Server 2016 went into RTM (longevity issue). Even with the DCB requirement, RoCEv1/RoCEv2 has worked very well for me. I do control the entire stack in such cases. My efforts have, somewhere along the way, gained me a nickname “RoCE Balboa, the Belgian Bear”. Still, I’d hate to count on some mediocre service provider to get it all sorted out for me. iWarp could be the way out in such scenarios as well.

In regards to both iWarp & RoCE and getting some more competing offerings, I have Cavium cards in the lab right now for testing. It’s good to re-evaluate vendors and options every now and then. When I use iWarp, my DCB skills are not lost, just not needed in those environments. They do come in handy when I use RoCE or build converged networks. For the smaller server deployments, RoCE has not failed me at all with just PFC/ETS for many workloads. With HCI in the picture and 25/50/100Gbps speeds, DCQCN/ECN complexity concerns are added to the mix. The entire learning curve and bloody nose that people got with PFC/ETS could be repeating itself here. But let’s be honest there are also technologies needed to deal with TCP/IP issues under congestion (PFC, ETS, ECN, DCTCP, …) and not all of them are plug and play either at the switch side of things.

It might well be worth noting that the main focus of many people in the Wintel world is SMB Direct. For good reasons, SMB Direct has many applications in the Windows OS. Do note, however, that the entire discussion around RDMA extends well beyond those use cases. And those can have other concerns & priorities than users of SMB Direct in regards to choosing a RDMA flavor. RDMA is a technology growing in importance and we have seen various points of view to what approach is superior. For SMB Direct in regards to S2D I can see people/OEMs sway to iWarp if only to avoid the issues & support calls. You manage the complexity when needed, avoid it when possible if that can be done without problems.

You’ve read enough of my musing right now. That’s it actually, thinking out loud and not accepting statements at face value. We’ll see what happens in the next 12 to 24 months. Algorithms & technology keeps evolving. All I do is look around and map out the landscape to see what fits the needs and requirements the best.

Image courtesy of Geralt at https://pixabay.com/photo-3213676/

I’ll keep my environments and solutions well connected and running. That’s where the value I provide lies, in delivering solutions and serving needs to make business run in a connected world. I make things work, the tools I use are selected for the job at hand.