This is the final installment of a four-blog series covering data management. The length of this series is owed to the “mission-critical” nature of the subject: the effective management of data. To organizations that are confronting a tsunami of new data, between 10 and 60 zettabytes by 2020, data management is nothing less than a survival strategy. Yet, few IT pros know what data management is, let alone how to implement it in a sustainable way. Hopefully, these blogs will help planners to reach a new level of understanding and begin preparing for a cognitive data management practice.

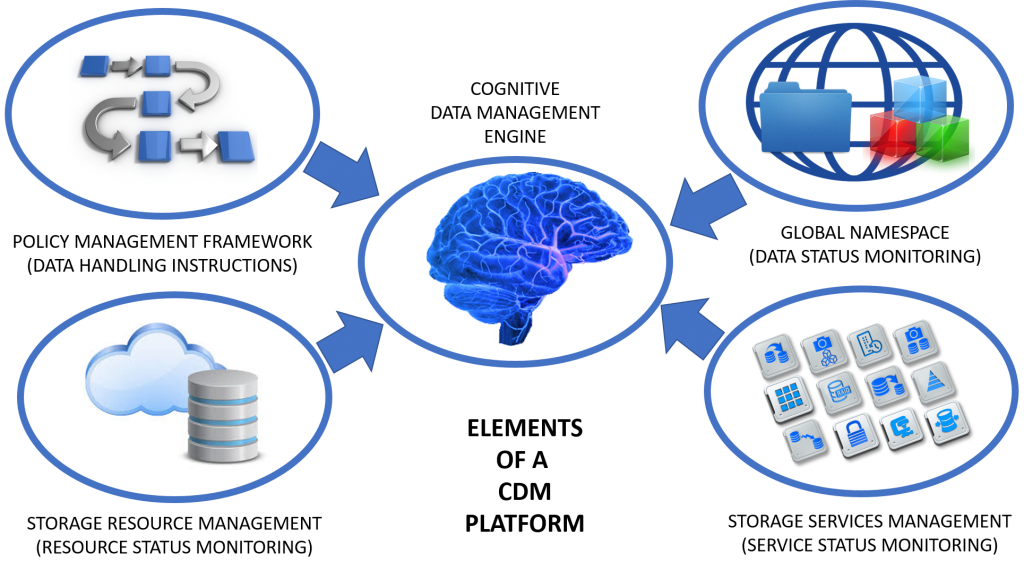

In previous installments of this blog, we have deconstructed the idea of cognitive data management (CDM) to identify its “moving parts” and to define what each part contributes to a holistic process for managing files and more structured content.

First and foremost, CDM requires a Policy Management Framework that identifies classes of data and specifies their hosting, protection, preservation and privacy requirements of each data class over its useful life. This component reflects the nature of data, whose access requirements and protection priorities tend to change over time.

Management policies that are defined in the Framework ultimately serve as the instructions or rules for a Cognitive Data Management Engine, providing direction regarding what data to move, the circumstances under which it must be moved, the characteristics of a suitable hosting target at a given time in the life of the data, and what services should be allocated to the data and/or target environment to fulfill data stewardship requirements at a given stage. A well-defined policy framework is critical for data management strategies to succeed.

The second and third components of a CDM model are Storage Resource Management (SRM) and Storage Services Management (SSM) engines. Storage resources comprise the physical storage kit and the physical links and interconnects used to facilitate access to storage media so that data can be read and written. Storage Resource Management entails the ongoing measurement and reporting of capacity and performance details associated with each storage resource/target. Measurements are taken using whatever proprietary and de jure data collection methods that are supported by the storage kit.

Support for the broadest number of storage resource status collection methods is key for a robust CDM strategy since virtually no storage infrastructure is homogeneous (that is, comprising the same storage products from the same vendors). In fact, heterogeneity has become even more commonplace with the advent of proprietary storage silos advanced by flash array vendors and many software-defined/hyper-converged infrastructure appliance vendors.

Heterogeneous infrastructure components may present many different protocols and methods for providing status information to an SRM collector. Some are standards-based while others are proprietary. A good SRM engine supports as many status collection methods as possible.

A Storage Services Management (SSM) facility administers the delivery and monitors the status of special storage services, ranging from backup, snapshot, data reduction and migration to digital rights management, encryption, etc. These services may be provided in the form of on-controller storage array functionality, or as software-defined services operated under the auspices of an operating system or hypervisor, or as third party software services instantiated as a free-standing application software package.

Often, storage services are what were previously referred to as “value-add” array functionality, typically used by storage hardware vendors to differentiate their array product from their competitor’s or to justify higher cost for their kit. Like storage resources, services are provided via “brokers” (software programs usually) that can themselves become saturated with requests or otherwise overwhelmed by demand. To avoid calling on a single broker to fulfill all requests for a particular service, and to balance requests across multiple brokers, status monitoring is required. That is what SSM is all about.

Taken together, SRM and SSM update the “knowledge” of the Cognitive Data Management Engine about its environment so that specific data can be directed to the right target based on the access performance characteristics (IO/response time, latency, capacity, etc.), as well as current load and the availability of appropriate services. The CDM facility takes the “instructions” of the Policy Management Framework and the status information maintained by the SRM and SSM components to identify options for data hosting and migration and ultimately to move the right data to the right place at the right time.

To do this efficiently, the CDM engine also needs to monitor the status of data – the files and objects – that it is acting upon. Some sort of global namespace is created where metadata about files and objects are stored, together with the current location of each file or object. Periodic or continuous scanning of this indexed namespace is used to alert the CDM engine when “date last accessed” or “date last modified” or other metadata attributes signal the need to migrate data to a new location or to add or delete certain protection, preservation or privacy services in accordance with policy.

In operation, the CDM engine assesses the current state of data on infrastructure and applies policies for re-hosting data in response to changes in its access and update characteristics and requirements. In so doing, the data is generally migrated onto less performant, more capacious and lower cost media over time, freeing up expensive high performance capacity for new data, while moving older files and objects into archives or the trash bin, depending on the policy. In this way, CDM can optimize storage utilization efficiency automatically.

That’s the goal, at least. The reality is that cognitive processing is still very much a work in progress. Some CDM solutions use comparatively simple and straightforward Boolean logic or similar rules engines. Over time, as cognitive computing matures, more sophisticated analytical engines will be assigned the task of evaluating what data to move and where to move it to.

For now, the best practice is to build a capability in a modular way, using the best components available. That way, advances in technology can be integrated with the platform as they become available. The time to start is now.