Let’s have a look at some performance tips for a hardware which runs VMware ESXi. When installing ESXi hypervisor on a new (or older) hardware, one has to think about how to achieve the best possible performance as the system will execute many virtual machines (VMs) and needs to be tuned-up for performance.

Besides the advice that you should only use VMware-compatible hardware which is normal as hardware which is not on HCL or is a bit outdated might not be able to run the latest ESXi hypervisor. If this is the case, don’t worry. You can still try to install older version of ESXi and still use it for some non-critical workloads such as infrastructure monitoring or host some workloads which are not business-critical.

For production cluster you should only use however, a VMware certified hardware, which is hardware you can find on VMware HCL page. All the server components such as storage controller, Network interface cards (NICs) or CPU shall be figure on the HCL.

Bios settings

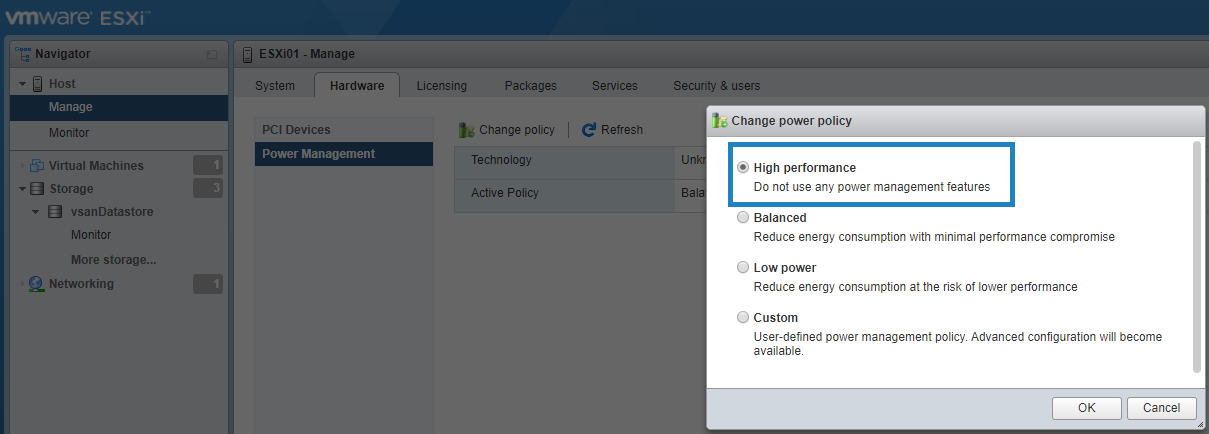

The first thing is to look at when setting up a system is the BIOS of the machine. You should configure it in “mode performance” and not in “balanced” mode concerning CPU state.

Another thing is the unused hardware. There might be some COM ports, LPT ports, USB controllers, Floppy drives, Optical disk drives, NICs or storage controllers which you won’t be using. After all, the server was made to be compatible with many different OS but VMware virtualization layer provides all the VMs need as virtual hardware.

So, after installing your ESXi you might go back to BIOS and disable your USB ports completely. Power saving modes should also be disabled.

Also, when you’re in the BIOS you should be looking at the hyperthreading settings which you should enable, as well as VT-x, AMD-V, EPT, RVI etc. Those are hardware virtualization extensions for x86 CPUs.

Use High Performance Settings

Storage Configuration

A vast topic and enormous improvements possibilities. If you care about performance, go All-Flash. Storage performance depends on workload, hardware, vendor, RAID level, cache size, stripe size, and so on.

You should check documentation from VMware as well as the storage vendor for tips related to the specific hardware you’re planning to use. Many workloads are very sensitive to the latency of I/O operations.

Unless the ESXi will be using local storage, it is a storage network performance which might be important. Usually ESXi is “hooked” to an external storage array by iSCSI or NFS or via a Fibre channel connectivity, so the local disks aren’t used for workloads. Local storage is usually used for local ESXi installation if the hypervisor is not installed on an USB stick or on a SATADOM.

If you’re selecting storage array (new or old), you might consider choosing storage hardware that supports VMware vStorage APIs for Array Integration (VAAI). This allows some operations to be offloaded to the storage hardware instead of being performed in ESXi.

VAAI can improve storage scalability, can reduce storage latency for several types of storage operations, can reduce the ESXi host CPU utilization for storage operations, and can reduce storage network traffic. When you clone,

Note: StarWind Virtual SAN has VAAI as one of the storage features. VAAI support allows StarWind to offload multiple storage operations from the VMware hosts to the storage array itself.

Some Network tips

You might want to use server-class NICs that support checksum offloading, TCP segmentation offloading, and the ability to handle 64-bit DMA addresses, and jumbo-sized frames. These features will allow vSphere to make use of its built-in advanced networking support.

For configuration in vSphere you can take advantage of some best practices from VMware as they have published many whitepapers on this topic. Just a few examples which should be put into application after installation. One of them is NIC teaming where you can setup a failover order and benefit from a smart DR plan which automatically switch failed NIC for a working one.

NIC team can share the load of traffic between physical and virtual networks among all of its members (or just a certain ones), and also provide a passive failover in the event of a hardware failure or network outage.

NIC teaming can assure redundancy and load balance the traffic. The configuration is possible 4 different ways (Route based on originating virtual port, route based on IP hash, route based on source MAC hash or Use explicit failover order). I invite you to read detailed whitepaper from VMware called

VM optimization – Using VMXNET3 as virtual adapters for your VMs will reduces the overhead required for network traffic to pass between the virtual machines and the physical network. You might be surprised if you check some of your older VMs and see which adapter types they’re using. Perhaps you’ll find some E1000, E1000e, VMXNET or VMXNET 2 adapters which are the old, legacy ones.

TIP: You can use a perfect tool for scanning your virtual infrastructure with RV tools and check which one of your VMs has still an old NIC type.

Replace old hardware

Old hardware is usually eating too much power and does not have enough performance to handle today’s workloads. Therefore, it’s important to plan and replace aging hardware.

Too many times admins try to optimize their infrastructure and software and usually it is the hardware has reached its’ limits.

New server hardware usually provides multi-cores CPUs (8, 16, or 32 cores per CPU) with a lot of RAM. It is not uncommon to find 256 Gb, 512 Gb, or even 1 TB of RAM.

The physical RAM is the first resource which usually reaches the limit and only after the VMware memory optimization techniques kicks in. Usually, hundreds of VMs configured with lots of vRAM can run on the top of a system with a lot of physical RAM. There is no limit in physical RAM and VMware license.

When it comes to choose of CPU, this might be a different story with the latest CPUs from AMD. It seems that AMD is really getting back to the race and provides pretty good alternative to expensive Xeon CPUs. In fact, their EPYC 7002 series has set new world records with VMware VM mark software. You can read more details here.

Final words

We could write more on optimization tips for VMware ESXi and there is a lot of topics which can be covered. Hardware, networking and storage are the most important ones as they form the base layer where the workloads run. Another optimization topic could be the virtual infrastructure itself. How to configure VMs, with which options, how many vCPUs or how much vRAM etc. But we might save this for next time.