I have been exploring containers and the wider serverless ecosystem for about a year now and I was quite pleased when Amazon offered not only an EC2 containerisation system but also a serverless one.

You may well be asking what is so special about running a container using AWS dedicated container wizards, after all, it is possible to just spin up an EC2 VM, install Docker and run a container on that and this is certainly true. The AWS containerisation system offers some very neat features that help fold the whole stack into the DevOps world in the terms of automation, elastic scale out and so on. In this blog, I will be taking a look at the basics of Fargate, what it is and how to get a container up and running.

The first thing to understand is that Amazon offer two types of container system – with the first one, they will setup an EC2 based cluster for you via a wizard.

This means that not only do you pay costs for anything that the container may do (network traffic, storage, etc.) but you also have to pay for the EC2 resources as well. While this may be perfectly fine if you, for example, need full control over such a cluster but may not be for test or dev cycles, it could also be that you just have a small project that does not justify the expense of a full EC2 based Docker cluster. For those sorts of things, Fargate is a very handy solution.

Under the hood, Fargate itself sits on an Amazon controlled EC2 cluster but this cluster is shared by multiple customers.

Amazon are understandably quiet on the tech but I suspect that they have some level of dynamic scaling of the underlying VM’s to ensure that there is always resource available to the containers that are running.

Something I found important to bear in mind with Fargate is that it is essentially a Docker engine. Anything you run on Fargate should be tested locally first to ensure that it works the way you expect it to as Fargate offers little more than a log in terms of troubleshooting tools to find out why a container isn’t working and there is no way to interact with that container therefore local testing is paramount. For example, if a container stops running, Fargate will remove it. At least with a local solution you can examine the container in more details, get a bash prompt into it and so on. These options are not there with Fargate and that is why I strongly advocate testing out a container locally first and only use Fargate to host a known, working solution.

In order to get a Fargate based container up and running there are a few things that are a few prerequisites:

- A VPC needs to be setup with an internal IP range/subnet, etc.

- Create a Fargate cluster

- A Fargate task definition needs to be setup

I always treat the VPC as just another site in terms of IP addresses. This makes things a lot easier should I need to setup a site to site VPN to that VPC or should I need any sort of routing set up between VPC’s later on.

The Fargate task definition will tell Fargate which image to use and is used for scale out and other tasks.

For this blog, I will be focusing on just the Fargate side of things.

Create the Fargate Cluster

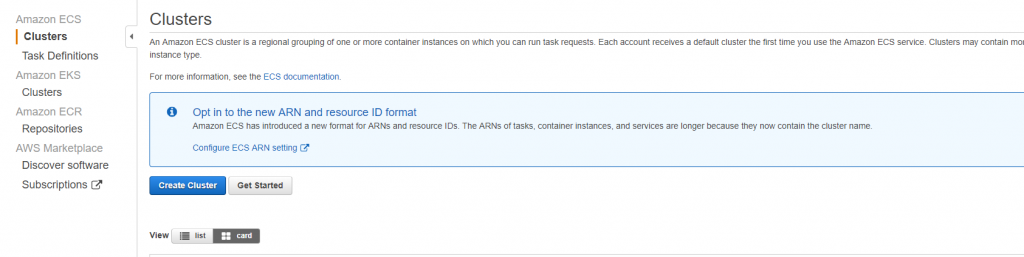

Under Amazon ECS, click on “Create Cluster”

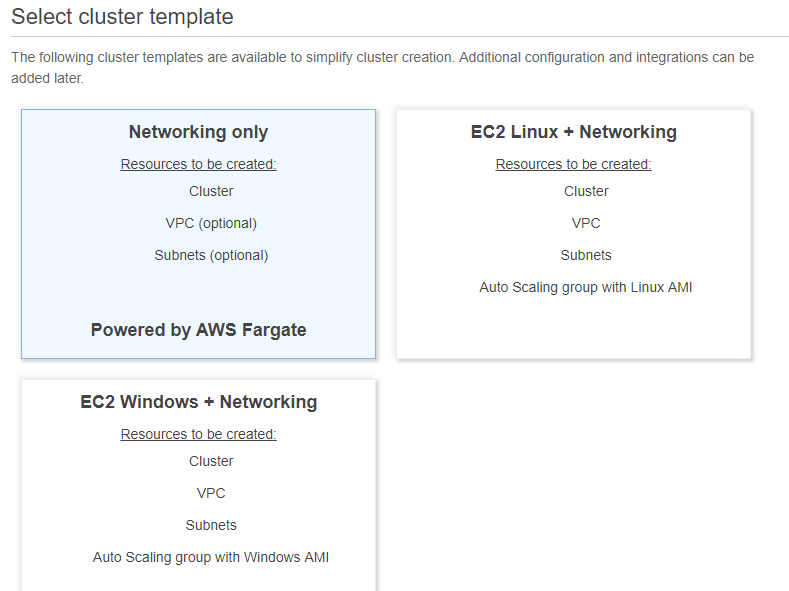

You will have a few options – notice that the top left option is the only one that runs on Fargate itself, the other two create the EC2 based virtual machines that can cause you a hefty bill. For this example, I am going for the networking only option here, as I want Amazon to host the cluster for me and have this as a serverless setup.

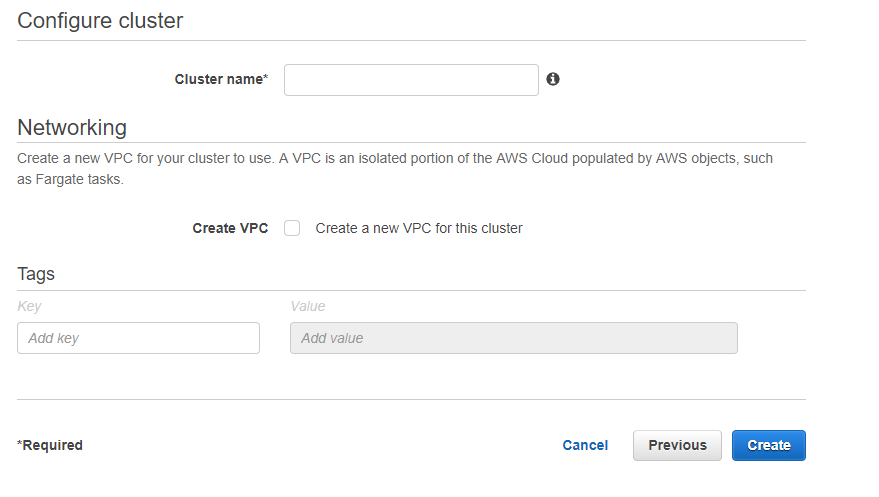

The final part is to give the cluster a name and state if you need to create a VPC or not, as I already have an existing VPC I will just give the cluster a name and hit create.

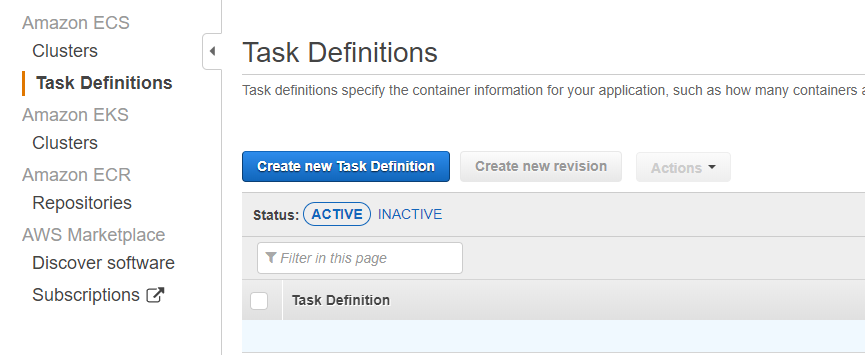

Create the task definition

Now that I have the cluster setup, I need to create a task definition to execute on the cluster. To do this, click into Task Definitions, Create new Task Definition, at the next screen, click on Fargate and next step.

The main task definition screen needs a few parameters to be filled out, most can be left to the defaults but you will need to give the task a name and select a task role. For this example, the default of ‘ecsTaskExecutionRole’ will work fine.

You will also need to allocate RAM and vCPU. For a simple container, 1GB RAM and 0.5vcpu will be enough. It is easy to update the task definition to grow the vCPU and RAM should it be required.

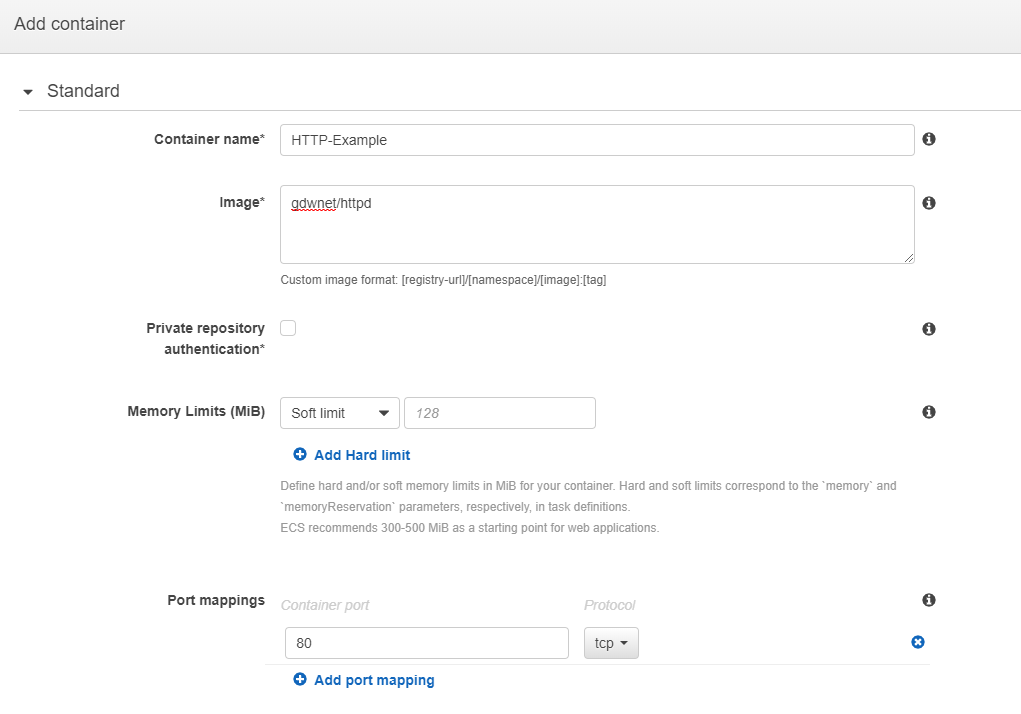

The key part of the task definition section is the ‘add container’ section as this is where you define the details of the container that you wish to deploy to fargate.

The Image is the container that will be pulled down into fargate, the one I am using here is hosted on Docker Hub in my own repository.

The final part is the port mappings. In this case, I will map port 80 externally to port 80 inside the container. When done, click on “add” and you should get a “succeeded” message.

Run the container

Now that the ECS Fargate cluster is setup, the VPC is setup and the task definition is setup it is time to run the container.

To do this, click on clusters, cluster name, tasks and Run new Task:

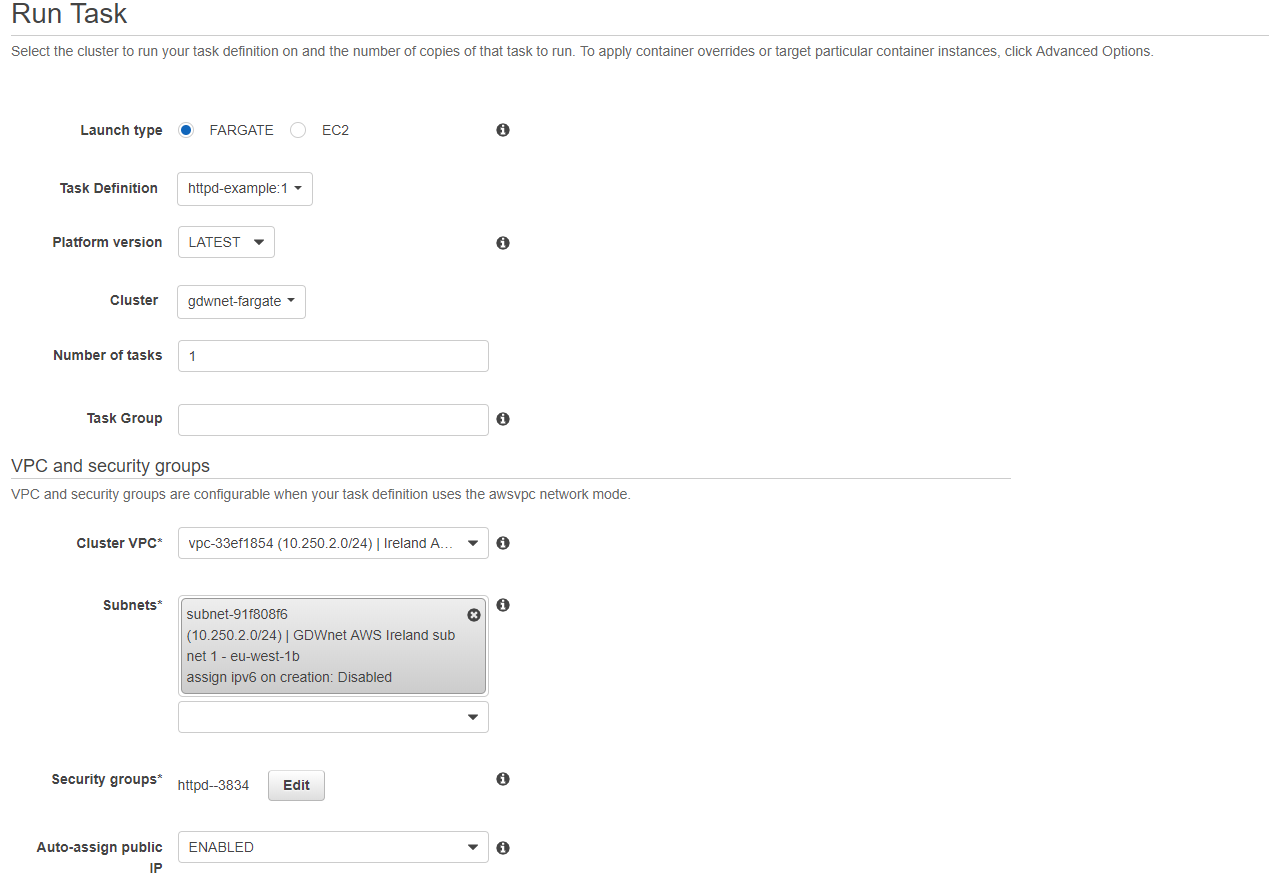

Again, there is a wizard to fill out. Most of the options are just drop downs that link into things like the cluster you wish to use, the task definition you want to launch and so on.

For this container, I am going to give it a public IP so it is reachable from the outside world. Note that the VPC MUST have an internet gateway connected otherwise you may find that it cannot download the image from the container repository. This is true even if the repository is Amazons own elastic container repository and if you give the container a public IP. The reason for this is that the public IP only applies to the container and before that IP can be applied, the image must be downloaded.

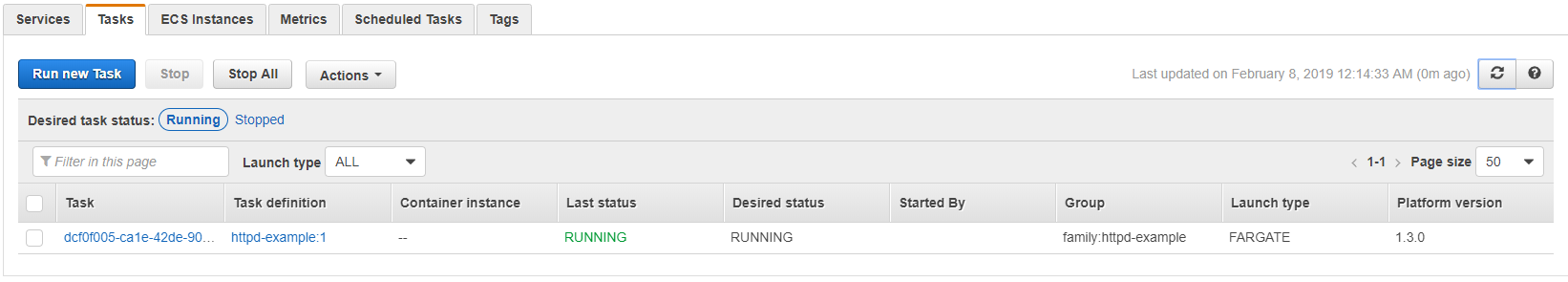

Finally, click on “run task” and the AWS console will step through several status changes of Provisioning, Pending and Running.

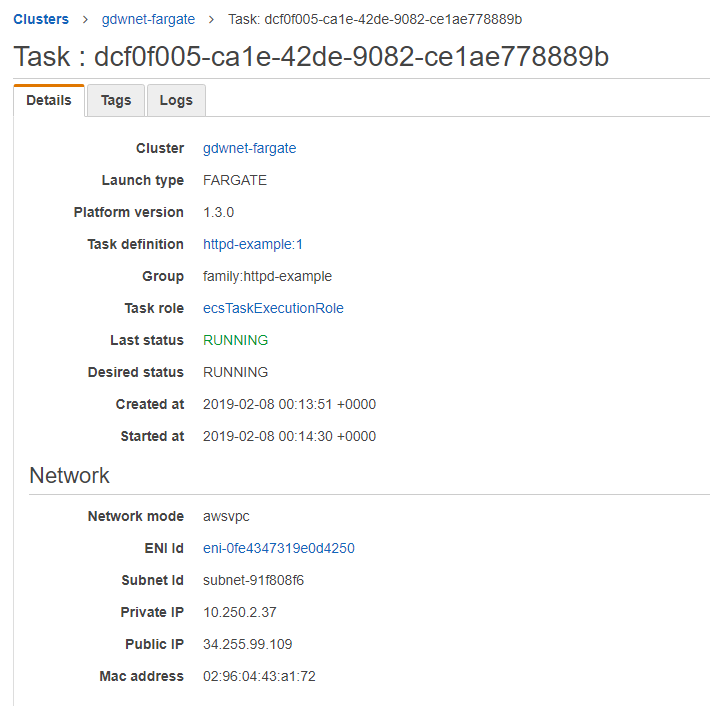

Clicking on the task ID will show details about the container including the allocated public IP:

As you can see, there are a few hoops to jump through in order to get a container up and running but once the VPC’s and task definitions are setup, launching the container is easy. At lot of the hoops are there to provide a level of flexibility around the container and the ability to run that container on either your own EC2 docker cluster, the fargate cluster or even an EKS cluster. This article really is just scratching the surface of what can be down with containers and I do hope to touch on a few more aspects in future articles.