Introduction

These days, many organizations keep huge amounts of data on premises. Things worked like that from the very beginning of IT era when data was stored and processed in one place. In our fast-pacing world, we must take into consideration all possible ways to improve the operational cycle and bring innovation into it. You don’t move forward – you move backward.

You want to minimize storage and maintenance costs? Wanna get an offsite disaster recovery storage solution with five nines availability? Need an affordable and easily accessible cold storage? You can consider migrating to a cloud! The migration to the cloud is a logical way of IT infrastructure evolution.

Many companies provide cloud services but there are few leaders that have huge research centers and that are pioneers in the cloud storage sphere. One of the available solutions on the market is Amazon Web Services (AWS). It offers a cloud storage service, called Amazon S3, for managing data on a sliding scale—from small businesses to big data applications. Amazon S3 is an object storage that can store and retrieve any amount of data from anywhere.

Challenges during data migration process

When we’re ready to move to the cloud, how do we get this data to Amazon S3?

You can upload data to Amazon S3 or add records to Amazon Dynamo with standard tools using AWS CLI or from the AWS GUI. It is a very easy and convenient way to do things but what to do if you need to upload terabytes of data to the cloud through a limited bandwidth network interface? For example, if you have 50Mbps channel, uploading 20TB to the cloud will take about 5-8 days if everything goes as expected, with no interruptions and close-to-maximum upload speed. Sure, you can wait one week, if it is acceptable for you, but how would you upload hundreds of terabytes of data? What about petabytes? In this case, you should probably wait for years! Luckily, there are ways to speed up the process.

AWS Direct Connect

The first and the most logical solution would be extending the bandwidth of the network channel to the cloud and here Amazon offers AWS Direct Connect. With AWS Direct Connect, you get the private, dedicated, and consistent network path between your local data center and AWS. It simply establishes a physical network interface between your data center and an AWS Direct Connect router.

Depending on how much data you want to transfer to and out of the cloud, Direct Connect can decrease your overall bandwidth costs, compared with transferring the same amount through a regular internet connection, and of course, using AWS Direct Connect will speed up the transferring process.

Amazon S3 Transfer Acceleration

Another interesting solution is Amazon S3 Transfer Acceleration. Let’s say you have a centralized S3 bucket located in some region, and clients from all over the world upload their data to this bucket. So, here is the idea: the clients can upload data through the AWS Edge Location, then this data is routed to Amazon S3 bucket over an optimized network path. If you regularly transfer GBs of data across continents, you can greatly speed up the process.

Still, if you’re looking for a one-time large-scale data transfer and don’t need a persistent direct connection to AWS, take a look at AWS Snowball.

AWS Snowball

When it comes to transferring data to the cloud, especially in very large quantities, latency and bandwidth restrictions are the main concerns.

Actually, the network bandwidth is almost always a bottleneck in communication with a cloud. Sometimes, we must look back to basics, when people used pigeon post and some delivery services later to transfer data. In fact, physically moving huge amounts of data stored on hard drives can be more efficient and less time consuming than moving that same data over the network.

For just these sort of situations, AWS provides AWS Snowball. Snowball is a petabyte-scale data transport solution that uses secure appliances to transfer data into and out of the AWS cloud. It is a physical device that will be shipped to you after your request. Thus, you can receive your data from S3 bucket or export data to AWS by simply loading your data on a device and shipping it back to AWS.

While sending terabytes of your valuable data through any delivery service, you must be sure that it is protected, so one of the AWS requirements is data encryption. Snowball requires that you encrypt the data to be transferred and provides its own encryption tools and private decryption keys.

AWS recommends that if an expected data transfer over your network interface is going to take longer than a week, you might be interested in Snowball. By the way, you can use Snowball for transporting exabytes(!) of data. In this case, AWS will send you a semi-truck.

AWS Storage Gateway

It is a well-known fact that AWS S3 is an object storage with one global namespace and it can be a bit challenging to integrate it with other systems since it doesn’t present a traditional file system interface.

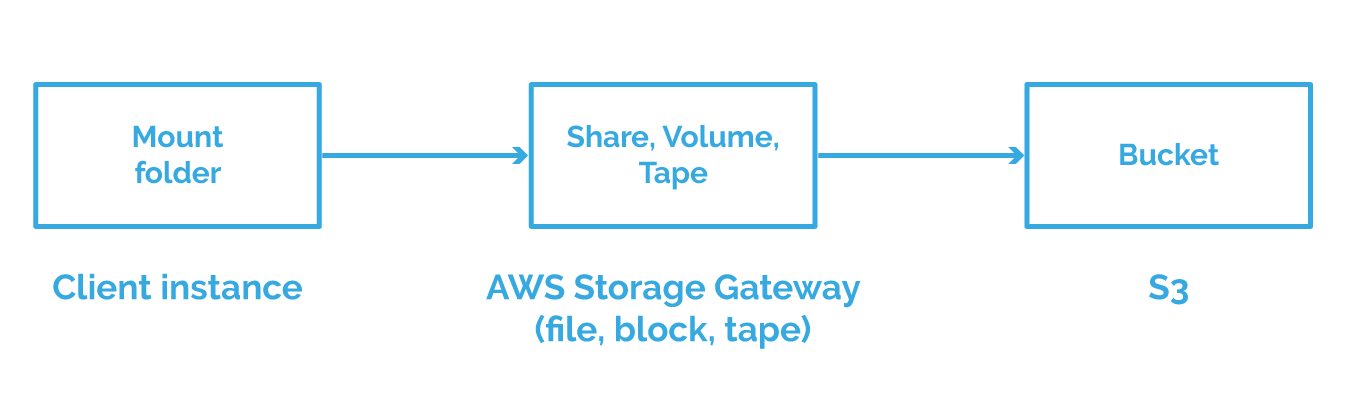

You can interact with object storage via restful HTTP calls, AWS CLI commands or via the AWS web console. But what if there was a way to make S3 mount for as a file system, for example? It can be configured to provide file (NFS), volume (iSCSI) or tape (VTL) interfaces.

That’s where AWS Storage Gateway comes in. You can consider Storage Gateway as an additional layer between your on-premise and Amazon S3. Simply speaking, Storage Gateway presents a front end to Amazon S3 and Glacier Storage.

So, as a file gateway, AWS Storage Gateway can present an NFS file system that is backed remotely by an S3 bucket. Volume gateway uses an iSCSI interface. Tape gateway presents a virtual tape library or VTL interface.

The problem is that AWS Storage Gateway doesn’t allow you to apply different retention policies between on-premises, fast cloud, and archival cloud. It is often a challenging task for the IT staff to properly select and deploy the required software or hardware and integrate separate components into a single system.

The problem is that AWS Storage Gateway doesn’t allow you to apply different retention policies between on-premises, fast cloud, and archival cloud. It is often a challenging task for the IT staff to properly select and deploy the required software or hardware and integrate separate components into a single system.

AWS Storage GatewayOf course, there are 3rd party companies that offer solutions intended to simplify and automate the data transferring process.

One of them is StarWind Cloud VTL for AWS. Besides the fact that it simulates physical tape storage so that you can create a tape storage pool on SAS/SATA drives, it can also automate tape offloading to Amazon S3 and its optional de-staging to Glacier. Moreover, you don’t have to make a special EC2 instance or download and configure a special ESXi/Hyper-V VM images as in case of AWS Storage Gateway. StarWind VTL is a native Windows application that requires very little system resources. It doesn’t have any storage limitations and works with many backup software solutions. StarWind Cloud VTL allows configuring a schedule for offloading tapes with a retention policy. This process is absolutely automated and secure and doesn’t require user access to AWS to move tapes to Amazon S3 and Glacier.

Conclusion

AWS offers various ways to make your data transfer to or from the cloud fast and convenient. That depends on your needs and requirements for the timeframes.

You can use standard tools like AWS CLI and AWS web Console or consider case-specific solutions.

For example, if you are not satisfied with your uplink throughput to AWS, you might want to consider using AWS Direct Connect. If you need to transfer huge amounts of data that even AWS Direct Connect won’t deal with, you are a candidate for using AWS Snowball. Transfer Acceleration is designed to optimize transfer speeds from across the world into S3 buckets. AWS Storage Gateway is a way to work with Amazon S3 object storage as with file, block or tape storage.

StarWind Cloud VTL can be used as an alternative to AWS Storage Gateway. With the configuration of StarWind VTL, the demand for options like AWS Snowball and Storage Gateway can be eliminated almost completely. The need for Direct Connect between the on-premises infrastructure and AWS would not be as high as previously.

So, I hope you will find this information useful when you need to upload data to AWS S3. With all these tools, transferring huge amounts of data become a breeze!

- StarWind Cloud VTL for AWS and Veeam

- AWS wants your Databases in the Cloud: Amazon Aurora offering up 5X Better Performance and PostgreSQL Compatibility