Introduction

As you know from the previous material, we have already determined whether the NVMe-oF technology can be effectively used to share fast storage over a network and how does it affect performance. Practically, we have established that both Linux NVMe-oF Initiator and StarWind NVMe-oF Initiator can operate almost without any performance decrease. However, although that does answer the question about the prospects of further use of NVMe-oF Initiator in real-life circumstances, like any other benchmarking it still does not cover all of the points and leaves a lot of unanswered questions.

Today we are going to find answers to some of those.

Purpose

We are going to continue benchmarking Linux NVMe-oF Initiator and StarWind NVMe-oF Initiator on a more profound level, making it even more interesting to system admins. This time, the comparison of their performances over both TCP and RDMA using Intel® Optane™ SSD DC P4800X will take us as close to the actual business IT infrastructure as it gets.

Benchmarking Details & Results

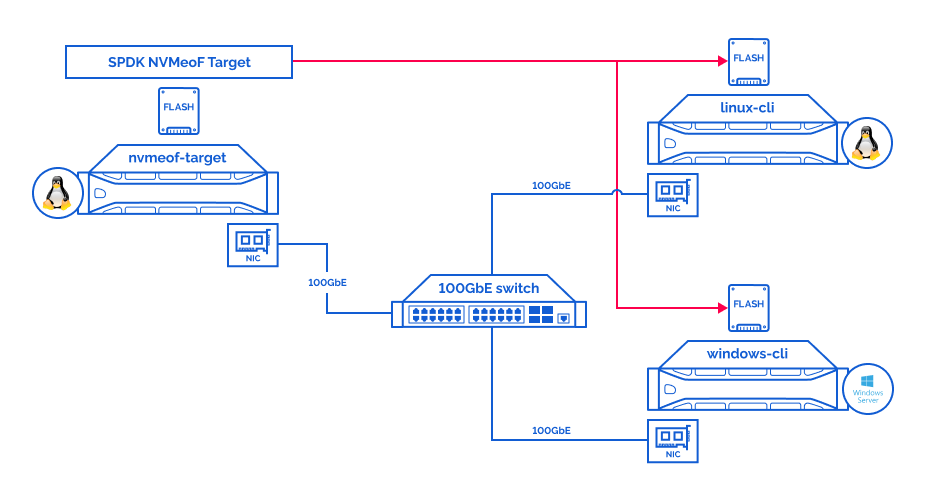

Testbed:

Hardware:

| nvmeof-target | Supermicro 2029UZ-TR4+ |

| CPU | 2x Intel® Xeon® Platinum 8268 Processor @ 2.90GHz |

| Sockets | 2 |

| Cores/Threads | 48/96 |

| RAM | 96Gb |

| Storage | 1x (NVMe) – Intel® Optane™ SSD DC P4800X Series (375GB) |

| NIC | Mellanox ConnectX-5 (100 GbE\s) |

Software:

| OS | Ubuntu 20.04.3 (5.4.0-90-generic) |

| SPDK | 21.07 |

| FIO | 3.16 |

Hardware:

| linux-cli

windows-cli |

Supermicro 2029UZ-TR4+ |

| CPU | 2x Intel® Xeon® Platinum 8268 Processor @ 2.90GHz |

| Sockets | 2 |

| Cores/Threads | 48/96 |

| RAM | 96Gb |

| NIC | Mellanox ConnectX-5 (100 GbE\s) |

Software linux-cli:

| OS | Ubuntu 20.04.3 (5.4.0-90-generic) |

| FIO | 3.16 |

| nvme-cli | 1.9 |

Software windows-cli:

| OS | Windows Server 2019 Standard Edition (Version 1809) |

| FIO | 3.27 |

| StarWind NVMeoF Initiator | 1.9.0.0 |

Benchmarking Results:

over TCP:

| Local NVMe performance (Linux) | Linux native NVMe-oF Initiator | Comparison | ||||||||||||||

| pattern | numjobs | iodepth | IOPs | MiB\s | lat (ms) | CPU usage | numjobs | iodepth | IOPs | MiB\s | lat (ms) | CPU usage | IOPs | MiB\s | lat (ms) | CPU usage |

| random read 4k | 6 | 4 | 584000 | 2282 | 0,04 | 2,00% | 8 | 16 | 548000 | 2140 | 0,23 | 7,00% | 93,84% | 93,78% | 582,50% | 350,00% |

| random write 4k | 6 | 4 | 536000 | 2095 | 0,04 | 2,00% | 8 | 16 | 512000 | 2001 | 0,25 | 5,60% | 95,52% | 95,51% | 565,91% | 280,00% |

| random read 64K | 2 | 2 | 40400 | 2526 | 0,10 | 0,20% | 4 | 2 | 39900 | 2492 | 0,20 | 1,70% | 98,76% | 98,65% | 203,06% | 850,00% |

| random write 64K | 2 | 2 | 33700 | 2107 | 0,12 | 0,20% | 4 | 2 | 31500 | 1968 | 0,25 | 1,60% | 93,47% | 93,40% | 214,41% | 800,00% |

| read 1M | 1 | 2 | 2591 | 2591 | 0,77 | 0,10% | 1 | 2 | 2523 | 2523 | 0,79 | 0,60% | 97,38% | 97,38% | 102,59% | 600,00% |

| write 1M | 1 | 2 | 2072 | 2072 | 0,96 | 0,10% | 1 | 2 | 1948 | 1948 | 1,03 | 0,40% | 94,02% | 94,02% | 106,33% | 400,00% |

| Local NVMe performance (Linux) | StarWind NVMe-oF Initiator for Windows | Comparison | ||||||||||||||

| pattern | numjobs | iodepth | IOPs | MiB\s | lat (ms) | CPU usage | numjobs | iodepth | IOPs | MiB\s | lat (ms) | CPU usage | IOPs | MiB\s | lat (ms) | CPU usage |

| random read 4k | 6 | 4 | 584000 | 2282 | 0,04 | 2,00% | 8 | 16 | 577000 | 2253 | 0,21 | 13,00% | 98,80% | 98,73% | 517,50% | 650,00% |

| random write 4k | 6 | 4 | 536000 | 2095 | 0,04 | 2,00% | 8 | 16 | 531000 | 2076 | 0,21 | 11,00% | 99,07% | 99,09% | 475,00% | 550,00% |

| random read 64K | 2 | 2 | 40400 | 2526 | 0,10 | 0,20% | 4 | 2 | 39100 | 2445 | 0,20 | 2,00% | 96,78% | 96,79% | 207,14% | 1000,00% |

| random write 64K | 2 | 2 | 33700 | 2107 | 0,12 | 0,20% | 4 | 2 | 31600 | 1972 | 0,25 | 2,00% | 93,77% | 93,59% | 213,56% | 1000,00% |

| read 1M | 1 | 2 | 2591 | 2591 | 0,77 | 0,10% | 1 | 2 | 2471 | 2471 | 0,81 | 1,00% | 95,37% | 95,37% | 104,80% | 1000,00% |

| write 1M | 1 | 2 | 2072 | 2072 | 0,96 | 0,10% | 1 | 2 | 1974 | 1974 | 1,01 | 1,00% | 95,27% | 95,27% | 104,88% | 1000,00% |

| Linux native NVMe-oF Initiator | StarWind NVMe-oF Initiator for Windows | Comparison | ||||||||||||||

| pattern | numjobs | iodepth | IOPs | MiB\s | lat (ms) | CPU usage | numjobs | iodepth | IOPs | MiB\s | lat (ms) | CPU usage | IOPs | MiB\s | lat (ms) | CPU usage |

| random read 4k | 8 | 16 | 548000 | 2140 | 0,23 | 7,00% | 8 | 16 | 577000 | 2253 | 0,21 | 13,00% | 105,29% | 105,28% | 88,84% | 185,71% |

| random write 4k | 8 | 16 | 512000 | 2001 | 0,25 | 5,60% | 8 | 16 | 531000 | 2076 | 0,21 | 11,00% | 103,71% | 103,75% | 83,94% | 196,43% |

| random read 64K | 4 | 2 | 39900 | 2492 | 0,20 | 1,70% | 4 | 2 | 39100 | 2445 | 0,20 | 2,00% | 97,99% | 98,11% | 102,01% | 117,65% |

| random write 64K | 4 | 2 | 31500 | 1968 | 0,25 | 1,60% | 4 | 2 | 31600 | 1972 | 0,25 | 2,00% | 100,32% | 100,20% | 99,60% | 125,00% |

| read 1M | 1 | 2 | 2523 | 2523 | 0,79 | 0,60% | 1 | 2 | 2471 | 2471 | 0,81 | 1,00% | 97,94% | 97,94% | 102,15% | 166,67% |

| write 1M | 1 | 2 | 1948 | 1948 | 1,03 | 0,40% | 1 | 2 | 1974 | 1974 | 1,01 | 1,00% | 101,33% | 101,33% | 98,63% | 250,00% |

over RDMA:

| Local NVMe performance (Linux) | Linux native NVMe-oF Initiator | Comparison | ||||||||||||||

| pattern | numjobs | iodepth | IOPs | MiB\s | lat (ms) | CPU usage | numjobs | iodepth | IOPs | MiB\s | lat (ms) | CPU usage | IOPs | MiB\s | lat (ms) | CPU usage |

| random read 4k | 6 | 4 | 584000 | 2282 | 0,04 | 2,00% | 6 | 4 | 583000 | 2279 | 0,04 | 3,20% | 99,83% | 99,87% | 101,75% | 160,00% |

| random write 4k | 6 | 4 | 536000 | 2095 | 0,04 | 2,00% | 6 | 4 | 533000 | 2084 | 0,04 | 2,50% | 99,44% | 99,47% | 100,00% | 125,00% |

| random read 64K | 2 | 2 | 40400 | 2526 | 0,10 | 0,20% | 2 | 2 | 40400 | 2525 | 0,10 | 0,40% | 100,00% | 99,96% | 100,00% | 200,00% |

| random write 64K | 2 | 2 | 33700 | 2107 | 0,12 | 0,20% | 2 | 2 | 34400 | 2147 | 0,12 | 0,40% | 102,08% | 101,90% | 98,31% | 200,00% |

| read 1M | 1 | 2 | 2591 | 2591 | 0,77 | 0,10% | 1 | 2 | 2560 | 2560 | 0,78 | 0,10% | 98,80% | 98,80% | 101,17% | 100,00% |

| write 1M | 1 | 2 | 2072 | 2072 | 0,96 | 0,10% | 1 | 2 | 2082 | 2082 | 0,96 | 0,10% | 100,48% | 100,48% | 99,59% | 100,00% |

| Local NVMe performance (Linux) | StarWind NVMe-oF Initiator for Windows | Comparison | ||||||||||||||

| pattern | numjobs | iodepth | IOPs | MiB\s | lat (ms) | CPU usage | numjobs | iodepth | IOPs | MiB\s | lat (ms) | CPU usage | IOPs | MiB\s | lat (ms) | CPU usage |

| random read 4k | 6 | 4 | 584000 | 2282 | 0,04 | 2,00% | 8 | 4 | 574000 | 2241 | 0,05 | 11,00% | 98,29% | 98,20% | 130,00% | 550,00% |

| random write 4k | 6 | 4 | 536000 | 2095 | 0,04 | 2,00% | 8 | 4 | 513000 | 2005 | 0,06 | 11,00% | 95,71% | 95,70% | 134,09% | 550,00% |

| random read 64K | 2 | 2 | 40400 | 2526 | 0,10 | 0,20% | 2 | 2 | 40200 | 2511 | 0,10 | 1,00% | 99,50% | 99,41% | 100,00% | 500,00% |

| random write 64K | 2 | 2 | 33700 | 2107 | 0,12 | 0,20% | 2 | 2 | 34500 | 2155 | 0,12 | 1,00% | 102,37% | 102,28% | 97,46% | 500,00% |

| read 1M | 1 | 2 | 2591 | 2591 | 0,77 | 0,10% | 1 | 2 | 2589 | 2589 | 0,77 | 1,00% | 99,92% | 99,92% | 100,00% | 1000,00% |

| write 1M | 1 | 2 | 2072 | 2072 | 0,96 | 0,10% | 1 | 2 | 2095 | 2095 | 0,95 | 1,00% | 101,11% | 101,11% | 98,86% | 1000,00% |

| Linux native NVMe-oF Initiator | StarWind NVMe-oF Initiator for Windows | Comparison | ||||||||||||||

| pattern | numjobs | iodepth | IOPs | MiB\s | lat (ms) | CPU usage | numjobs | iodepth | IOPs | MiB\s | lat (ms) | CPU usage | IOPs | MiB\s | lat (ms) | CPU usage |

| random read 4k | 6 | 4 | 583000 | 2279 | 0,04 | 3,20% | 8 | 4 | 574000 | 2241 | 0,05 | 11,00% | 98,46% | 98,33% | 127,76% | 343,75% |

| random write 4k | 6 | 4 | 533000 | 2084 | 0,04 | 2,50% | 8 | 4 | 513000 | 2005 | 0,06 | 11,00% | 96,25% | 96,21% | 134,09% | 440,00% |

| random read 64K | 2 | 2 | 40400 | 2525 | 0,10 | 0,40% | 2 | 2 | 40200 | 2511 | 0,10 | 1,00% | 99,50% | 99,45% | 100,00% | 250,00% |

| random write 64K | 2 | 2 | 34400 | 2147 | 0,12 | 0,40% | 2 | 2 | 34500 | 2155 | 0,12 | 1,00% | 100,29% | 100,37% | 99,14% | 250,00% |

| read 1M | 1 | 2 | 2560 | 2560 | 0,78 | 0,10% | 1 | 2 | 2589 | 2589 | 0,77 | 1,00% | 101,13% | 101,13% | 98,85% | 1000,00% |

| write 1M | 1 | 2 | 2082 | 2082 | 0,96 | 0,10% | 1 | 2 | 2095 | 2095 | 0,95 | 1,00% | 100,62% | 100,62% | 99,27% | 1000,00% |

RDMA vs TCP:

| StarWind NVMe-oF Initiator for Windows over RDMA | StarWind NVMe-oF Initiator for Windows over TCP | Comparison | ||||||||||||||

| pattern | numjobs | iodepth | IOPs | MiB\s | lat (ms) | CPU usage | numjobs | iodepth | IOPs | MiB\s | lat (ms) | CPU usage | IOPs | MiB\s | lat (ms) | CPU usage |

| random read 4k | 8 | 4 | 574000 | 2241 | 0,05 | 11,00% | 8 | 16 | 577000 | 2253 | 0,21 | 13,00% | 100,52% | 100,54% | 398,08% | 118,18% |

| random write 4k | 8 | 4 | 513000 | 2005 | 0,06 | 11,00% | 8 | 16 | 531000 | 2076 | 0,21 | 11,00% | 103,51% | 103,54% | 354,24% | 100,00% |

| random read 64K | 2 | 2 | 40200 | 2511 | 0,10 | 1,00% | 4 | 2 | 39100 | 2445 | 0,20 | 2,00% | 97,26% | 97,37% | 207,14% | 200,00% |

| random write 64K | 2 | 2 | 34500 | 2155 | 0,12 | 1,00% | 4 | 2 | 31600 | 1972 | 0,25 | 2,00% | 91,59% | 91,51% | 219,13% | 200,00% |

| read 1M | 1 | 2 | 2589 | 2589 | 0,77 | 1,00% | 1 | 2 | 2471 | 2471 | 0,81 | 1,00% | 95,44% | 95,44% | 104,80% | 100,00% |

| write 1M | 1 | 2 | 2095 | 2095 | 0,95 | 1,00% | 1 | 2 | 1974 | 1974 | 1,01 | 1,00% | 94,22% | 94,22% | 106,09% | 100,00% |

Conclusion

Overall, both Initiators have shown impressive results, as were expected. The performance of each Initiator is on par with another, no matter if you’re using RDMA or TCP. However, as it always is, there are a couple of nuances.

In general, the results aren’t that different from each other. If we’re talking about the basic SPDK (Storage Performance Development Kit) advantages such as user space usage and polling-based asynchronous I/O model, both RDMA and TCP are good to go. Thanks to StarWind NVMe-oF Initiator, you can use both with equal efficiency.

In particular, if your NICs support RoCE, then RDMA is an obviously better choice. Why? The answer is simple: latency. Even though in both cases you can rip the benefits for your storage, RDMA essentially moves your data with less latency than the alternative. That difference isn’t all that critical, but if you’re stuck with the applications that are more demanding than usual latency-wise, you’ll be better off with RDMA.

This material has been prepared in collaboration with Viktor Kushnir, Technical Writer with almost 3 years of experience at StarWind.