As most of you are probably aware, Gitlab is, in part, a source code hosting repository which suffered something of a major outage just a few weeks ago. Unusually, they posted a very full and frank report on what actually happened. It’s very rare for a company to do this and even rarer for it to be made public, I wish more companies would do this, even if it was just an internal review with the blame put on the process, it would at least highlight where the weaknesses are in the infrastructure.

As most of you are probably aware, Gitlab is, in part, a source code hosting repository which suffered something of a major outage just a few weeks ago. Unusually, they posted a very full and frank report on what actually happened. It’s very rare for a company to do this and even rarer for it to be made public, I wish more companies would do this, even if it was just an internal review with the blame put on the process, it would at least highlight where the weaknesses are in the infrastructure.

The Gitlab outage document (https://about.gitlab.com/2017/02/01/gitlab-dot-com-database-incident/) is worth a read, the actual root cause of the outage is down to a very simple mistake that we’ve probably all made at one time or another:

YP thinks that perhaps pg_basebackup is being super pedantic about there being an empty data directory, decides to remove the directory. After a second or two he notices he ran it on db1.cluster.gitlab.com, instead of db2.cluster.gitlab.com

The first thing that’s pretty obvious to see is that the error is simple human error.

It’s the sort of error that can easily occur because of the similar names in use. It is very easy to forget that you’re on a production system and in trying to fix a problem it’s very easy to trash production data.

Where I am working, we’ve had the same sort of problems in the past. To rectify been experimenting with using scripting on Linux and GPO’s on Windows to help make it obvious which system is a production and which is UAT. On production, scripts are used to update the bashrc file to clearly highlight that the server is a live server.

Over on the windows side, GPO’s update the background via Bginfo to show if the server is live or not:

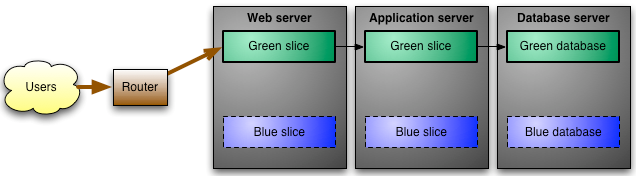

Along with marking servers as live/UAT, we’ve also been marking then with environment variables to say if they are blue or green. This is part of a blue/green software deployment process. It works very well for web servers, SQL servers, and other application servers. Essentially, for a period of time (normally two weeks), the live servers are “blue” and the UAT servers are “green”. This means that software and other updates can be deployed to the “green” environment and then when all tests pass the environment can be swapped over with a few scripts that update the environment variables. It’s easier displayed with a diagram:

In the above diagram, you can see that the router is sending all traffic to the green slice, this leaves the blue slice available for updates. Once the blue slice is ready to become production it’s just a matter of running a script to cut things over and have the router send the traffic to the blue slice. If there are problems then it’s easy to roll back by pointing things back to the green slice and then reviewing what was missed, updating the documentation and checks and trying again.

One of the big issues with this type of environment is the duplication of servers. It basically doubles everything and that can have a big impact on resources and licenses but, it is often the best way to ensure that there are options to recover from a bad deployment and to test out a new deployment in a like-for-like environment.

Another key thing to note is that this type of environment is ideal to be able to be switched to a container type environment which will make deployments even easier and automatic scale up and scale down a reality thanks to elastic scaling and the various docker tools like mesosphere.

Deploying a blue/green style environment does need a fair amount of work to make it a smooth, reliable process and it really needs to have front-end servers that are as stateless as possible. It helps if everything that a deployment needs is documented. Once it’s setup it really shouldn’t need too much administration to keep running. If you have load balancers then some will even dynamically change which slice they send data to by querying the host name or environment variable or some other value. This is something I’ll be covering in a later blog as it’s a very handy little tool to allow developers the ability to move servers between slices themselves.

Finally, always add in warning signs to say if an environment is a production. All of this helps to reduce the chance that someone will run a data-destroying command on a production system but, it will not eliminate it. None of this is the replacement for a good, tested backup regime. The 3-2-1 backup rule is always your best backup method to ensure you have the best chance to recover should things go wrong.