I'm primarily interested in comparing performance (aka "speed" - IOPS, installing o/s, copying files etc). Dedup will not be part of this test.

After reading these (see links below), I disagree with the idea of installing using a single HDD. No one that buys these products will ever install using a single hard drive.

http://blogs.jakeandjessica.net/post/20 ... art-1.aspx

http://www.starwindsoftware.com/starwin ... est-report

Question for Anton & the crew: Given the equipment that I have (see list)

1) To achieve max performance: how should the SSD be used

2) What version of Starwind should I use for testing

3) Pls feel free to add any other relevant info

Equipment list:

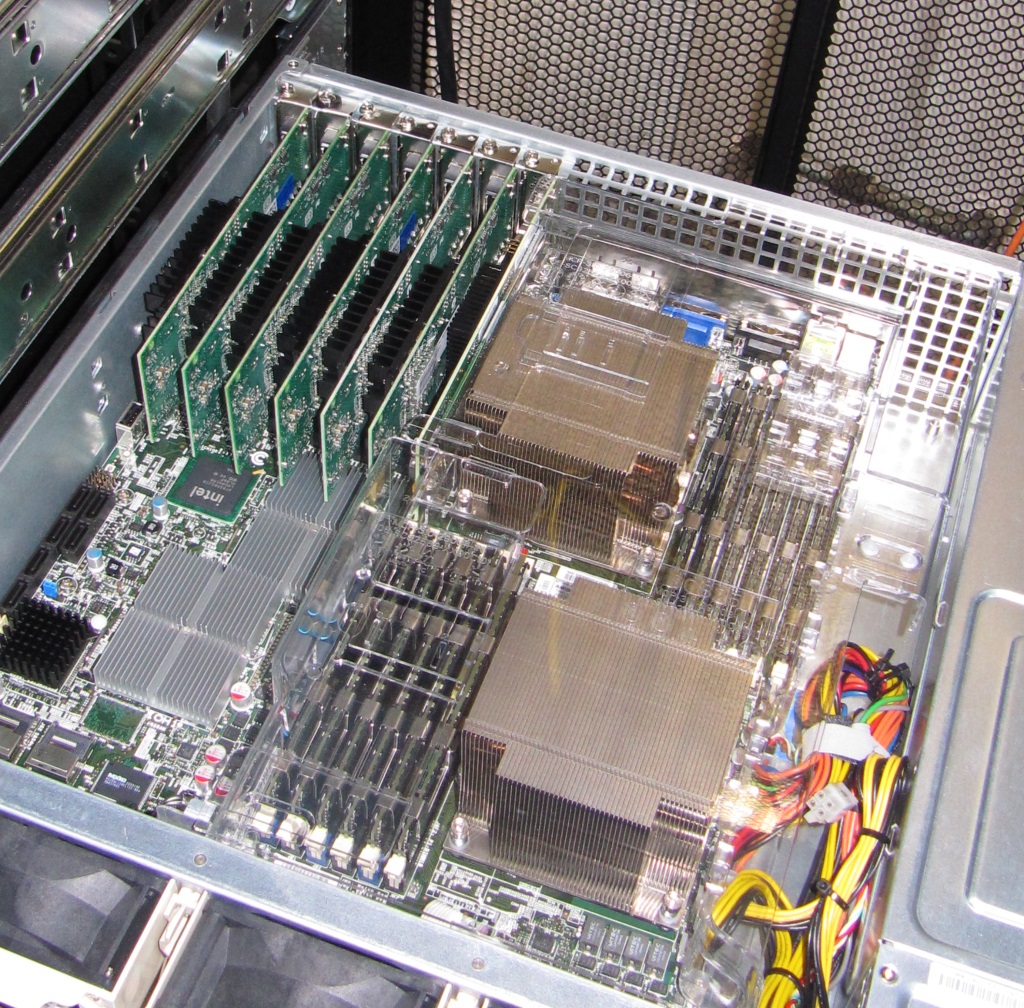

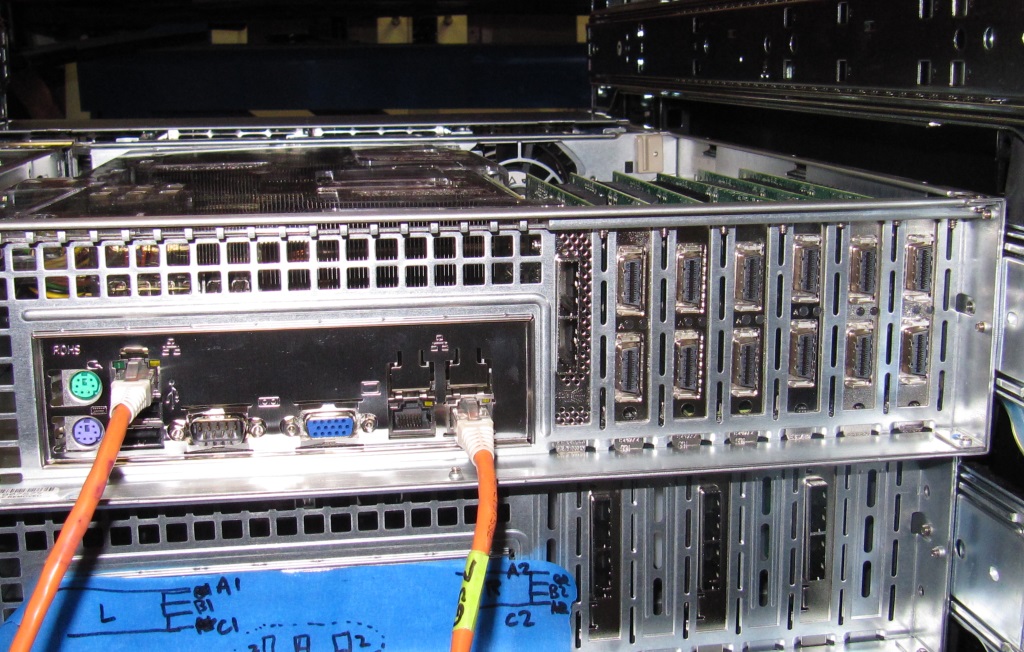

Head unit:

Chassis: SM SC826-R800LPB (No backplane)

Motherboard: SM X8DTH-6F

CPU: 2x Intel Xeon E5645 6 core

HBA: 1x LSI 9280-8E

RAM: 12 x 8GB Crucial ECC

NIC: 6x Intel EXPX9502CX4 10GBe

Boot SSD: 2x Samsung 830 128GB SSD (mirrored)

JBOD 1: SuperMicro SC216E26-R1200LPB

Slots 0: STEC SLC SSD

Slots 1-3: STEC MLC SSD

Slots 4-19: Seagate ST91000640SS (1TB 7200 rpm 6Gbs SAS 2.5”)

Slots 20-23: reserved for future ST91000640SS (as budget allows)

JBOD 2: SuperMicro SC216E26-R1200LPB

Slots 0-23: reserved for future ST91000640SS (as budget allows)

JBOD 3: SuperMicro SC826E16-R800LPB

Slots 0-11: Seagate ST31000640SS (1TB 7200 rpm 3Gbs SAS 3.5”)