Introduction

Generally, Network I/O Control (NIOC), a traffic-specific vSphere Distributed Switch (vDS) mechanism of network bandwidth regulation, is not common knowledge for VMware vSphere administrators. Probably, the reason is that NIOC is a vSphere Enterprise Plus feature. This means that many users, basically, do not have a chance to try it out as this license is quite expensive.

Anyway, I believe it is good to know about NIOC. Being introduced in vSphere 4.1, back in 2010, this technology was something like a real breakthrough. NIOC appeared when 10G networking was becoming increasingly prevalent. It became clear that thanks to high network throughput you can go with fewer network adapters in ESXi hosts. On the other hand, having such a broad channel often faced users with another challenge: distributing the massive network bandwidth between various traffic types.

In this article, I take a closer look at NIOC discussing how to use it.

Getting under NIOC hood

NIOC allows system and network administrators to prioritize the following types of traffic for ESXi host network adapters:

- Fault Tolerance traffic

- iSCSI traffic

- vMotion traffic

- Management traffic

- vSphere Replication traffic

- Network file system (NFS) traffic

- Virtual machine (VM) traffic

- User-defined traffic

After introducing NIOC in vSphere 4.1, VMware has been working really hard on it. Now VMware vSphere 6.7 Update 1 features the third version of NIOC (NIOC v3).

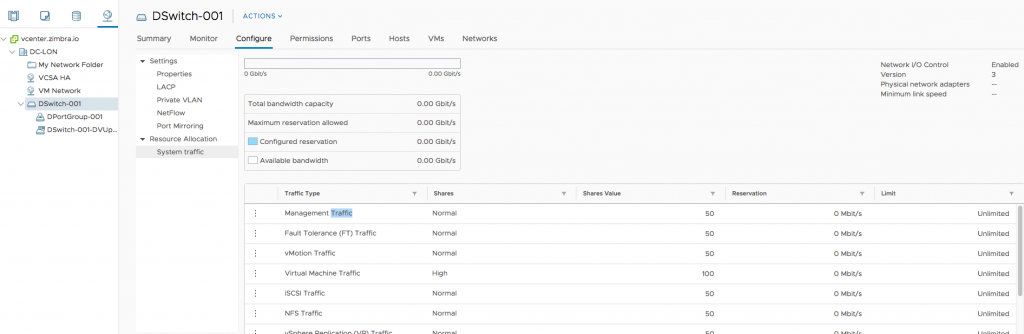

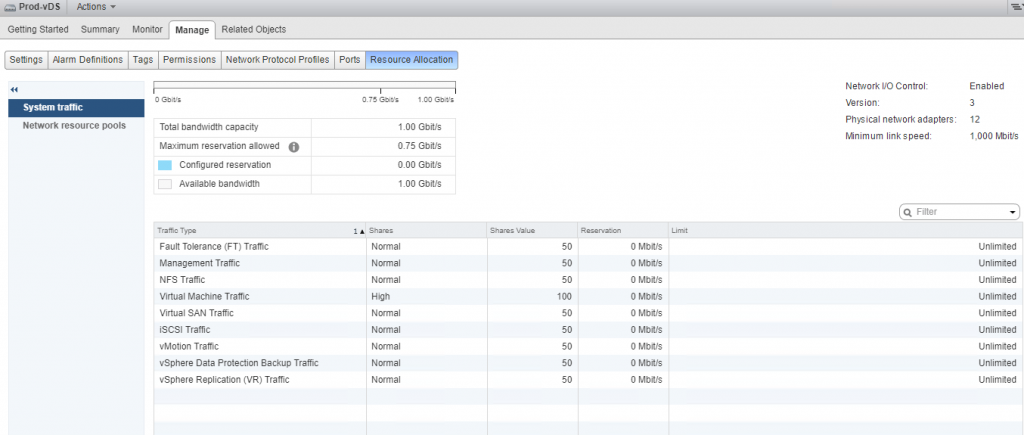

Good news: In vSphere 6.7 Update 1, HTML5 Client fully supports NIOC workflows. The image below shows how you can prioritize all types of traffic on vDS that I’ve just mentioned above:

In vSphere Web Client, you can access all those settings at the following address:

|

1 |

vDS > Manage > Resource Allocation > System traffic |

NIOC is enabled by default in vSphere 6.0 and higher. As you see in the screenshots, there are three techniques for traffic regulation: Shares, Limit, and Reservation. Shares parameter is set by default, while Limit and Reservation are not. All these three mechanisms work exactly in the same way as ones for compute and storage resource distribution. Well, let’s discuss them, anyway.

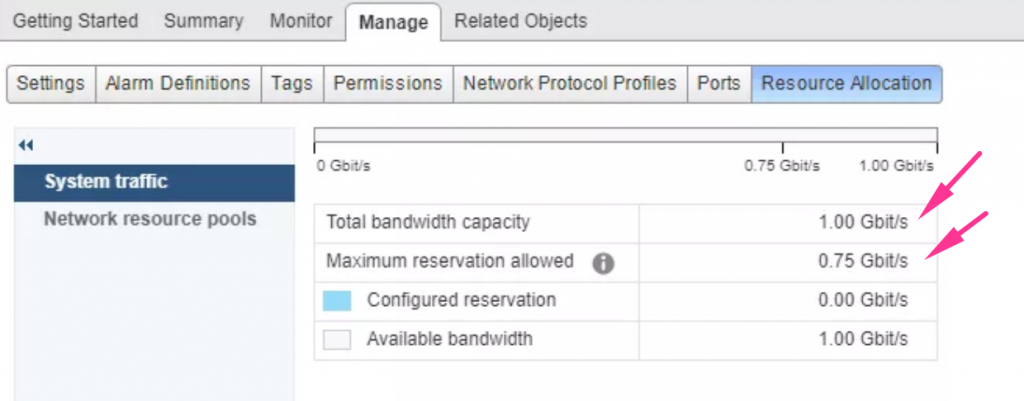

- Reservation provides a single physical adapter with the minimum guaranteed network bandwidth, in Mb/s. Even though Reservation assigns a part of network bandwidth to the specific network adapter, this resource can be used by other types of the system traffic once it idles. Regarding this fact, you should always pre-assign to system traffic the lowest network bandwidth required for running the service properly. You have to consider that idle of the resource reserved for system traffic prevents allocating network bandwidth for virtual machines. That’s why the reserved bandwidth should be neither average nor maximum. It should be the lowest sufficient one.Another important thing about reservation: you can pre-allocate only 75% of the overall ESXi host network bandwidth. To be more specific, it is 75% of the network bandwidth that the NIC with the lowest capacity in the port group on that host can provide. The rest, 25%, is left to compensate some unpredictable events (i.e., management traffic bursts).

- Limit sets the highest network bandwidth that the specific type of traffic can consume.

- Shares allow setting the relative priority of one system traffic type against others by varying their shares value from 1 to 100. For instance, if one port group share is 100 while the share of another is 50, the former gets twice as much resource as the latter. This mechanism starts distributing the channel after handling the Reservation parameter values that you set.

Configuring NIOC

The share of network bandwidth that is not allocated by reservation is distributed according to the Shares mechanisms.

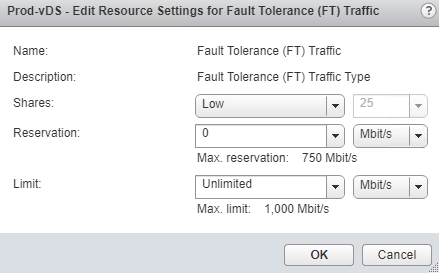

To change Reservation, Limit, or Shares parameter for a specific type of the network traffic (i.e., Fault Tolerance), you just need to open its resource settings.

You can set the value for Shares using the combo-box instead of numbers. Just select one of the following options: Low (25), Normal (50), or High (100). The exact shares will be set automatically according to the priorities you configure. Settings will be applied to all ESXi host physical adapters that are connected to the vDS in vCenter Server.

Once you specify a value for Reservation parameter, maximum throughput will be equal that value times the number of physical adapters assigned for that traffic.

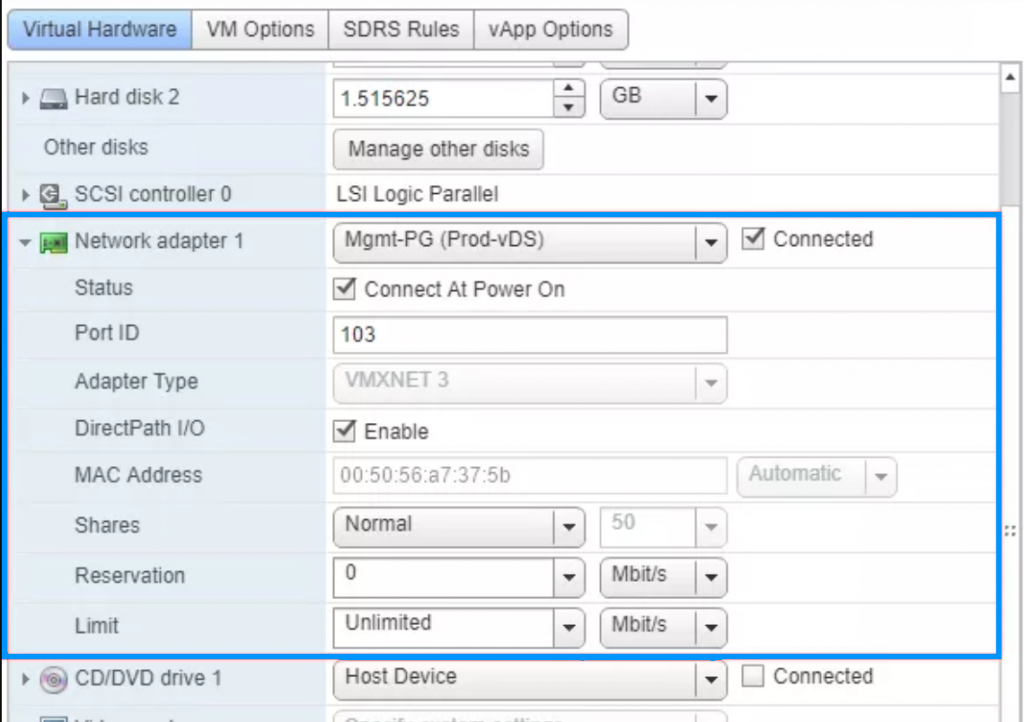

NIOC v3 allows configuring Reservation inside virtual machines (in vNIC settings) or by creating the User-Defined Network Resource Pools – the subset of Virtual machine (VM) traffic. Note that for User-Defined Network Resource Pools, you cannot set Limits or Shares, only Reservations.

One more time, Reservation allocates the minimum guaranteed network bandwidth. In this way, even if you set Reservation for a vNIC of a specific VM, that virtual machine can consume more bandwidth if there are any available resource. In the image below, see how to reserve bandwidth for the vNIC:

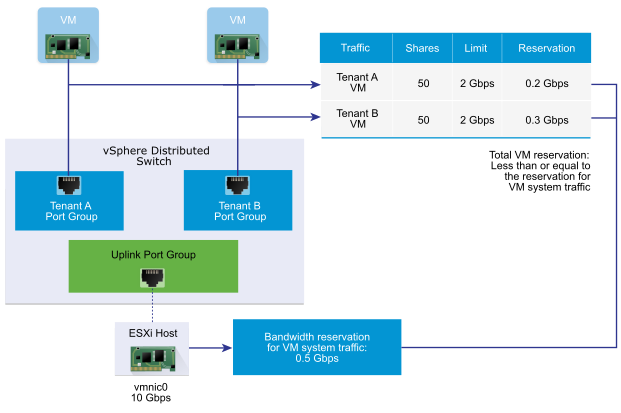

While specifying the Reservation parameter for vNICs, you should note that the overall amount of reserved network bandwidth cannot exceed VM system traffic reservation on that host:

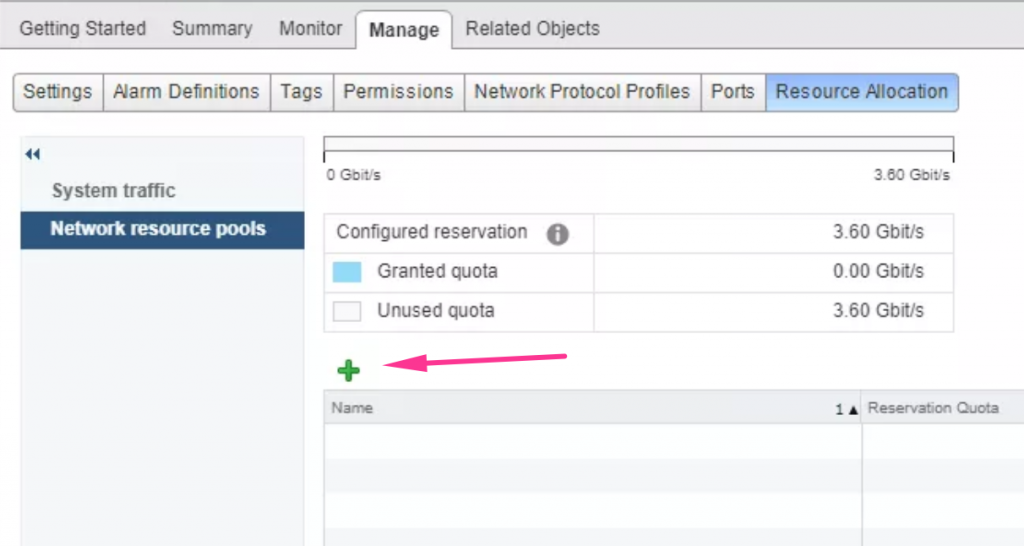

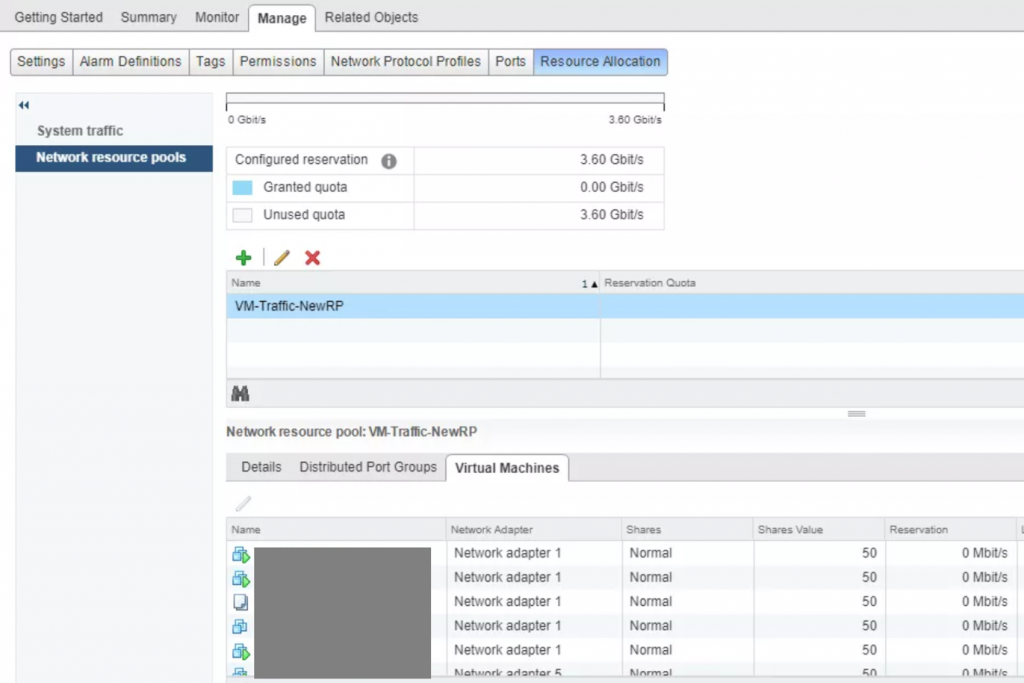

Now, let’s take a look at how you can set User-Defined Network Resource Pools for groups of virtual machines. First, you need to create the pool in vSphere Web Client:

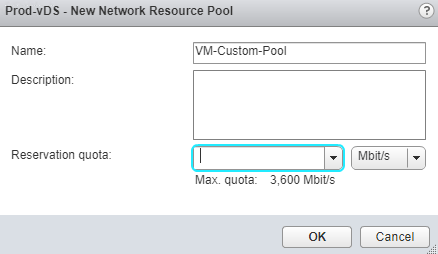

Set the Reservation quota afterward:

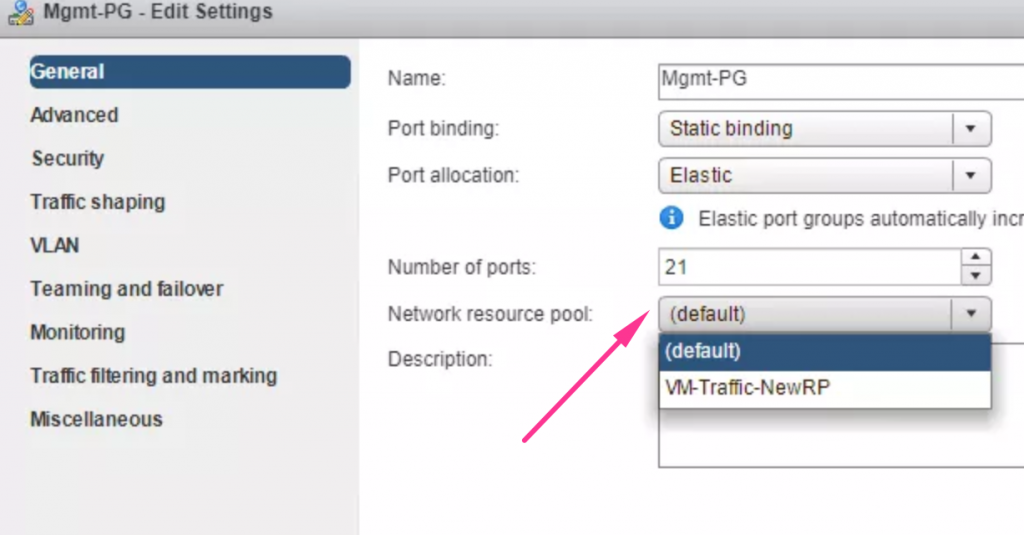

Next, specify this pool while setting the distributed port group in vDS:

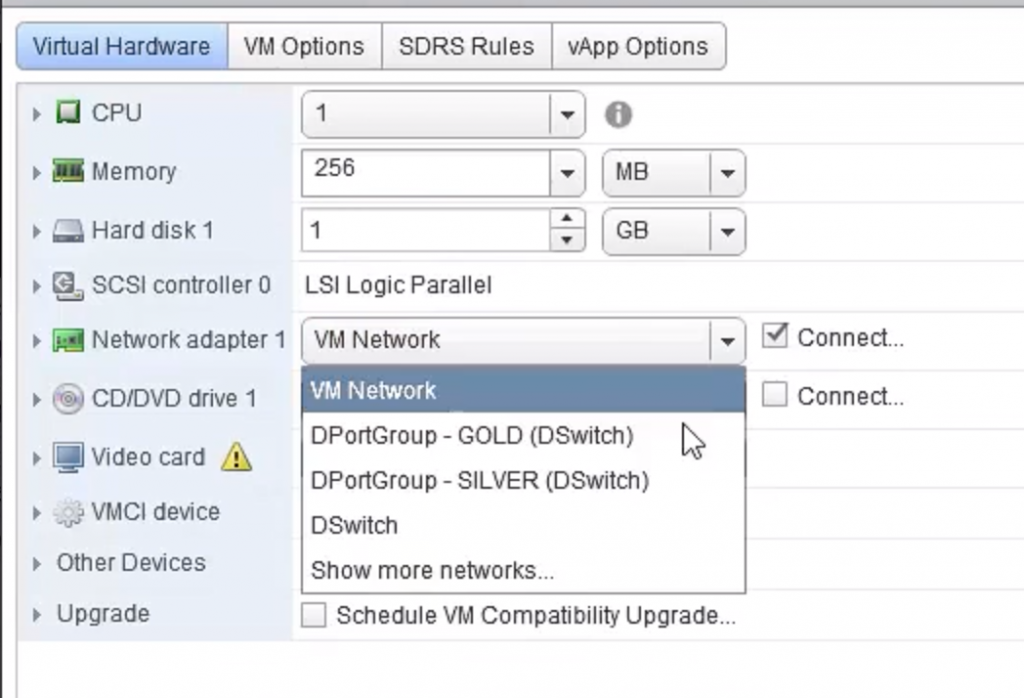

Now, let’s connect the virtual machines that are expected to get this reservation:

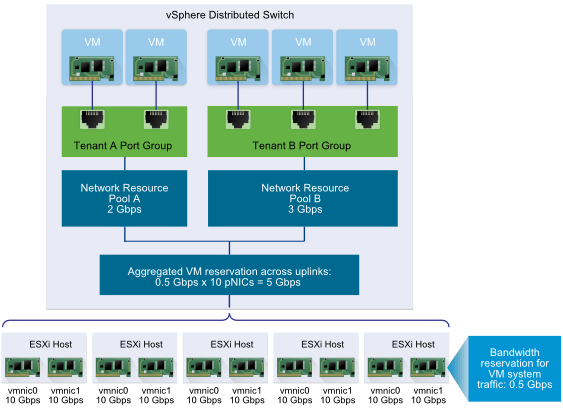

If you reserve 1 Mb/s having 5 NICs in the ESXi host, the distributed port group will get 5 Mb/s bandwidth.

To clarify the approach towards the maximum network bandwidth estimation, take a look at the image below:

After creating a new pool in the Network Resource Pools tab, you can access the list of VMs in it. Just click the Virtual Machines tab:

Conclusion

To wrap up the topic, I’d like to say that vNIC adapter limits should adhere to the traffic shaping limits for the distributed port groups on vDS. You can set the bandwidth limit to 200 Mb/s for a vNIC having the entire vDS port group bandwidth limit around 100 Mb/s, but the effective value for that vNIC will be 100 Mb/s, not 200 Mb/s.