Introduction

It’s not a secret to anybody that within every new major vSphere release, VMware finds some place for improvements in vMotion technology. The most recent one – VMware vSphere 7 Update 1 – isn’t an exception. You’ll see for yourself that a lot has been done for vMotion to work faster and support the live migration of even the most cumbersome virtual machines.

Another important novelty is the introduction of Enhanced vMotion Capabilities (EVC) for GPU to vSphere. Why? Because now vMotion is more than suitable for clusters of servers that aren’t strangers to workloads using GPU (graphic software, ML/DL-workloads, etc).

What’s New

Let’s take a look.

1. Completely rearchitected memory pre-copy technology and improved performance

In the early versions, vMotion used to experience a considerable fallback in performance while working with so-called Monster VMs (more than 64 vCPUs and 512 GB memory). If you want every explicit detail, you can look it up here. For now, let’s have a brief look.

First things first, VMware has completely rewritten vMotion memory pre-copy technology with the new page-tracing mechanism.

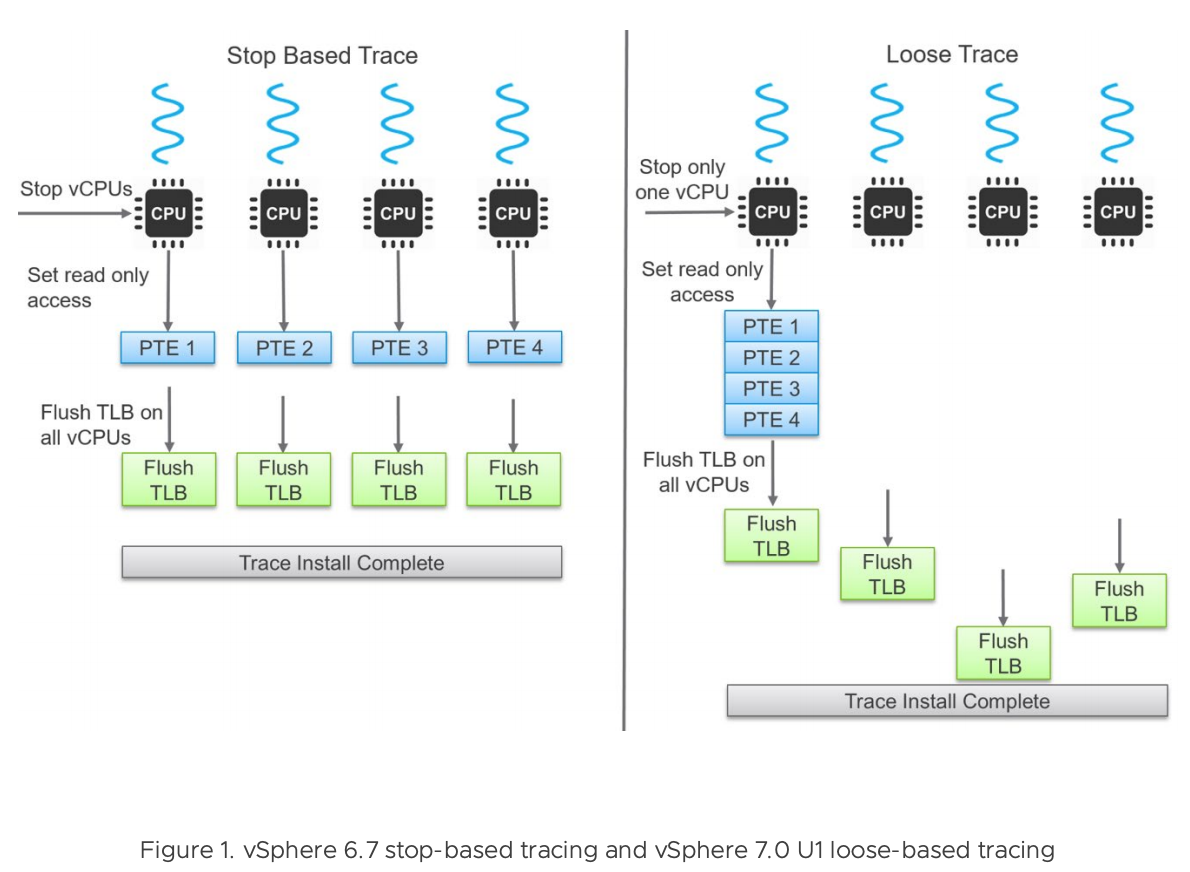

For context, let’s dial it back a little. How does installing traces work in vSphere 6.7? Stopping all vCPUs from executing the guest instructions and then distributing the work of setting up the traces to all the vCPUs assigned to the VM is called the stop-based trace approach. Now, in vSphere 7 U1, you have to stop only one CPU, and that is the loose page-trace approach:

Now, If the application were to attempt to write into a memory page protected by a trace, it would’ve resulted in Page Fault, and the page itself would’ve been added to the list of pages that need to be resynchronized with the host memory. Simultaneously, each vCPU flushes its own translation lookaside buffer (TLB), and with the last CPU, the work is finished.

It has never been a problem with a small number of CPUs because it never needed that much time for installing traces. However, if you’re packing a vast virtual machine with 64 vCPU, in some cases there might be no migration at all.

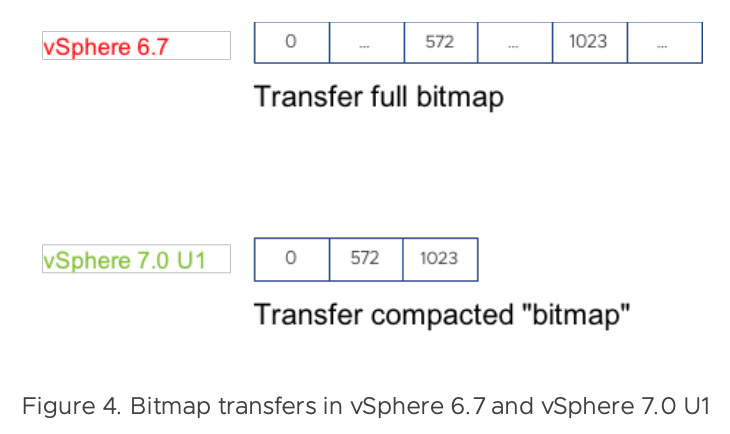

Also, vSphere 7.0 U1 transfers a “compacted” bitmap version instead of the whole bitmap, which noticeably affects the time required to switch on to a VM from another host:

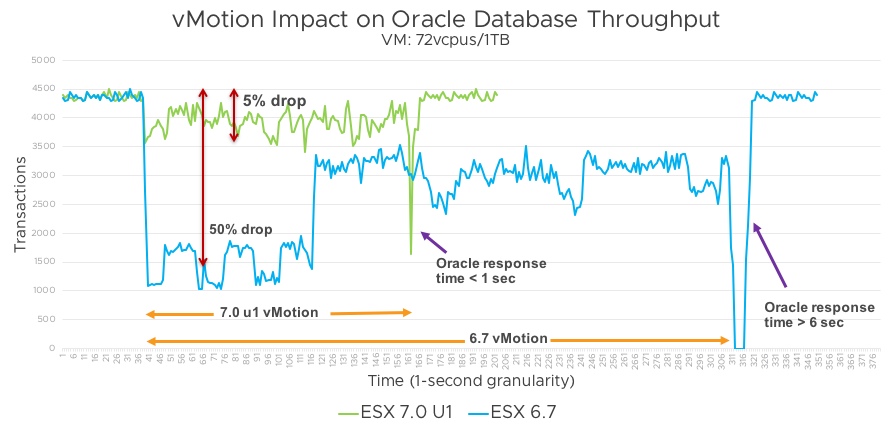

To demonstrate the benefits of a new technology, VMware compared the impact of vMotion on an Oracle DB server running inside a 72-vCPU/1TB VM. Below, you can see the number of transactions per second – before, while, and after vMotion (HammerDB test):

Conclusions:

- The overall migration time has fallen back twice its length (from 271 seconds to 120 seconds);

- The time required for installing traces has decreased from 86 seconds to 7,5 seconds (dropped down 11 times);

- The overall throughput has fallen back during migration by 50% in vSphere 6.7 and by merely 5% – in vSphere 7.0 Update 1;

- Oracle response time dropped down to less than one second, while it used to be at least 6 seconds.

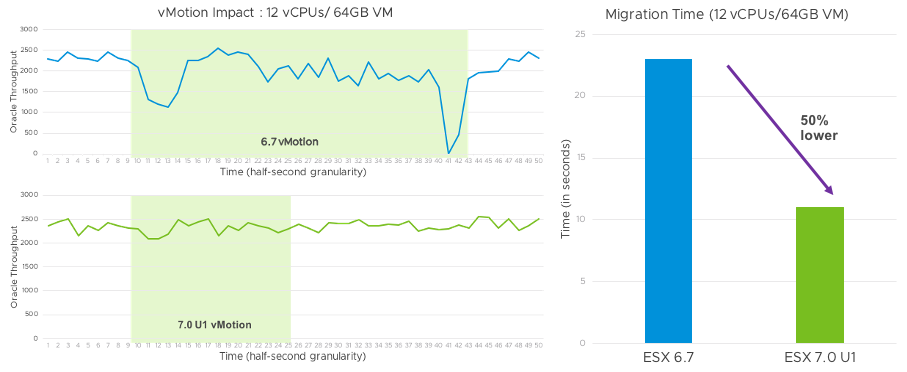

Yet another test was taken to compare the impact of vMotion on an Oracle DB server running inside a 12 vCPU/64 ГБ VM on both vSphere 6.7 and vSphere 7.0 U1. Below, you can see the number of transactions per second – before, while, and after vMotion (HammerDB test):

Conclusions:

- The overall migration time has shortened from 23 seconds in vSphere 6.7 to 11 seconds in vSphere 7.0 U1 (pretty much twice as faster);

- The time required for installing traces has fallen from 2.8 seconds to 0.4 seconds (seven times as faster);

- The bandwidth has increased by 45% (from 2403 transactions per second to 3480).

If you’re looking for even more facts, you can find them here.

2. VMware Enhanced vMotion Capabilities (EVC) for GPU in VMware vSphere 7 Update 1

Most of the VMware vSphere admins know full well that there’s Enhanced vMotion Compatibility (EVC) mode that allows you to combine hosts with different CPUs with the one baseline CPU Feature Set. This technology exists to let free vMotion migration between the ESXi hosts with different hardware. You can achieve this by masking certain sets of features of a CPU with CPUID.

Many modern applications (for example, Machine Learning / Deep Learning) tend to use GPU (graphics processor unit) resources since its architecture is perfectly suitable for particular tasks.

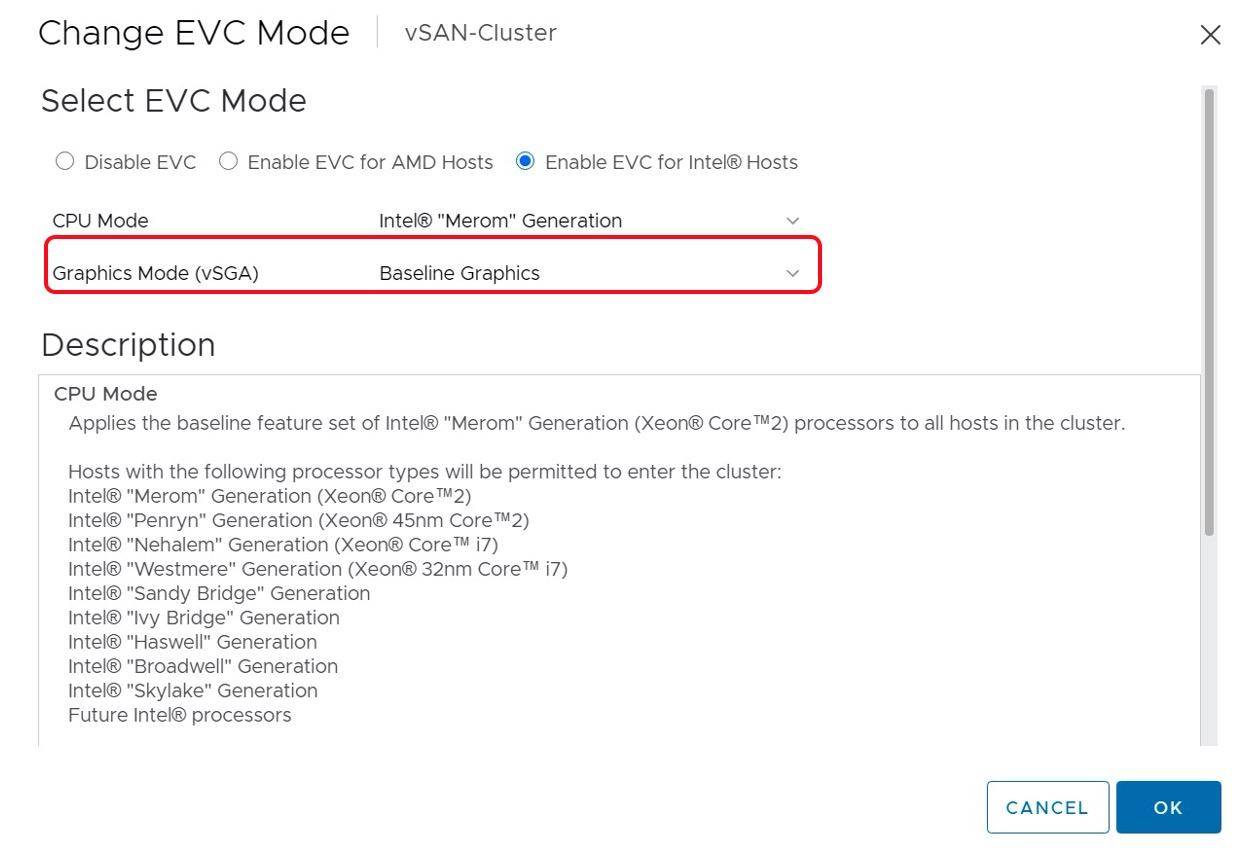

Also, in VMware vSphere 7, along with the respective version of VM Hardware, vSGA (Virtual Shared Graphics Acceleration, allows multiple VMware vSphere virtual machines to share hardware GPUs) mode has got a boost in performance. That’s why in VMware vSphere 7 Update 1, we got Enhanced vMotion Capabilities mode (part of EVC in vSphere Client) for GPU:

VM Features graphic mode includes support for 3D-applications, such as D3D 10.0/ OpenGL 3.3, D3D 10.1 / OpenGL 3.3, and D3D 11.0 / OpenGL 4.1 libraries. Unfortunately, the baseline feature set is available only for D3D 10.1 / OpenGL 3.3 (11.0 and 4.1 versions, respectively, will be supported in further releases).

When an ESXi host connects to a cluster with EVC for Graphics, vCenter Server verifies whether this host supports respective versions of libraries or not. You can add different hosts, ESXi 6.5, 6.7, or 7.0, thanks to D3D 10.0 and OpenGL 3.3 support.

Just as with the usual EVC, the users can set EVC for Graphics at individual VMs level. In this case, before starting a virtual machine on a ESXi host, vCenter Server will have to verify whether this host supports respective versions of libraries or not. This option will come in handy if you are ever to migrate a VM between data centers or to the cloud.

If EVC for Graphics is active, the same checks will take place before migration as well.

To Sum Up

Overall, the vMotion technology is getting better and better with every new release, and I, for one, am glad that the manufacturers do not forget about it. It doesn’t really matter that right now, the only virtual machines that will benefit from these improvements are the notorious Monster VMs because the very rearchitecting will pave the way for dozens of future updates. Moreover, EVC for GPU will have its star hour too, since lots of companies are buying more and more hardware, but it is not always possible to maintain the same hardware level (GPU cards become obsolete fast).