Introduction

If you read my blog on Switch Embedded Teaming with RDMA (for SMB Direct) you’ll notice that I set the -IeeePriorityTag to “On” on the vNICs that use DCB for QoS. This requires some explanation.

When you configure a Switch Embedded Teaming (SET) vSwitch and define one or more management OS vNICs on which you enable RDMA you will see that the SMB Direct traffic gets it priority tag set correctly. This always happens no matter what you set the -IeeePriorityTag option to. On or Off, it doesn’t make a difference. It works out of the box.

When you have other traffic that needs to be tagged, let’s say backup traffic over TCP/IP for example, not because you want to make it lossless via PFC but because you need the priority tag set to configure ETS (minimum QoS) you’ll notice different behavior. No matter how correctly you have configured your Windows DCB setting that traffic doesn’t seem to get tagged.

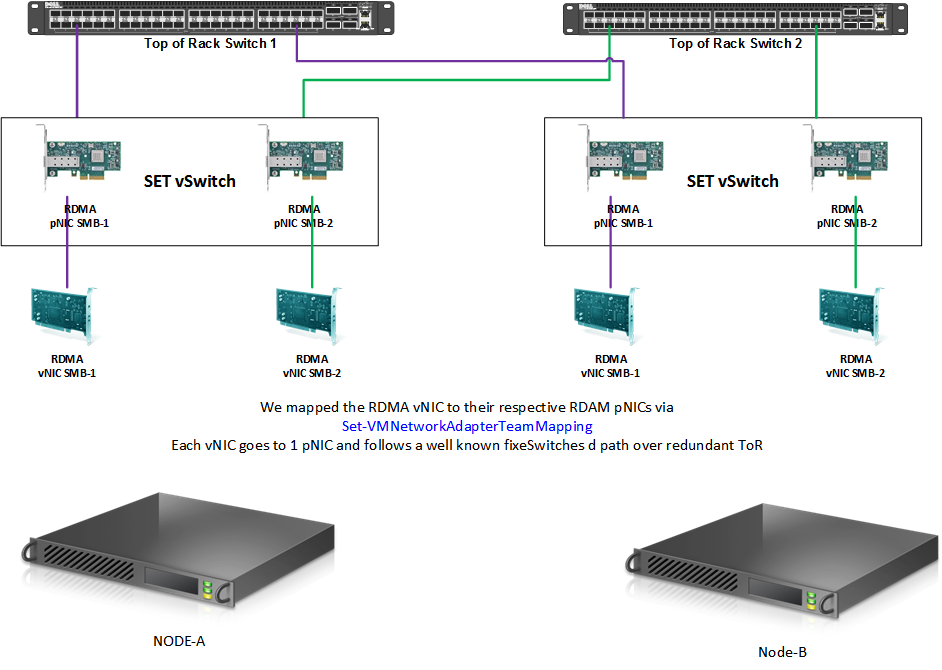

The lab setup

To explain what’s going on we’ll use a little lab setup to demonstrate a couple of things. This is our 2 node Windows Server 2016 lab clusters with a converged SET vSwitch. You can read more about such a setup in my blog post.

The DCB configuration is as follows on Node-A:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 |

# Disable the DCBx setting: Set-NetQosDcbxSetting -Willing 0 # Create QoS policies and tag each type of traffic with the relevant priority New-NetQosPolicy “SMB” -NetDirectPortMatchCondition 445 -PriorityValue8021Action 4 New-NetQosPolicy “DEFAULT” -Default -PriorityValue8021Action 0 New-NetQosPolicy “TCP” -IPProtocolMatchCondition TCP -PriorityValue8021Action 1 New-NetQosPolicy “UDP” -IPProtocolMatchCondition UDP -PriorityValue8021Action 1 #Note that in a SET environment wit RDMA enabled vNIC the tagging happens on the vNICs Set-VMNetworkAdapterVLAN -ManagementOS -VMNetworkAdapterName SMB-1 -Access -vlanid 110 Set-VMNetworkAdapterVLAN -ManagementOS -VMNetworkAdapterName SMB-2 -Access -vlanid 110 # Enable Priority Flow Control (PFC) on a specific priority. Disable for others Enable-NetQosFlowControl -Priority 4 Disable-NetQosFlowControl 0,1,2,3,5,6,7 # Enable QoS on the relevant interface Enable-NetAdapterQos -InterfaceAlias “NODE-A-S4P1-SW12P05-SMB1” Enable-NetAdapterQos -InterfaceAlias "NODE-A-S4P2-SW13P05-SMB2" # Optionally, limit the bandwidth used by the SMB traffic to 60% New-NetQoSTrafficClass “SMB” -Priority 4 -Bandwidth 90 -Algorithm ETS New-NetQoSTrafficClass “Other” -Priority 1 -Bandwidth 9 -Algorithm ETS |

Don’t forget this needs to be done on Node-B as well, I hope that is evident.

What’s going on?

So, what’s going on here? Well, the traffic on the host does get tagged as configured by DCB actually. It’s when we get to the vSwitch it becomes interesting.

Let’s start out with the RDMA vNICs were we have not set the -IeeePriorityTag to “On”. SMB Direct traffic on the vNIC bypasses the vSwitch by design and it works as you would expect with or without the -IeeePriorityTag set to “On”.

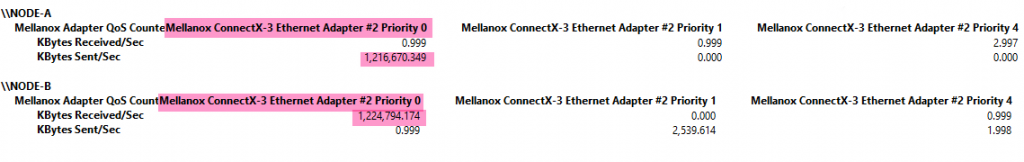

Things are different for non-SMB Direct traffic. If you do not set the -IeeePriorityTag to “On” the priority value is reset to 0. Note that this is not untagged traffic, the VLAN tag is still there, but the priority is set to 0. Below you can see TCP/IP traffic over a tagged vNIC that gets its priority set to 1 but when it hits the vSwitch it’s reset to 0.

If you do set -IeeePriorityTag to “On” the priority tagged packets will go by unchanged.

To do so we run:

|

1 2 3 4 5 6 7 8 9 10 11 |

$NicSMB1 = Get-VMNetworkAdapter -Name SMB-1 -ManagementOS $NicSMB2 = Get-VMNetworkAdapter -Name SMB-2 -ManagementOS Set-VMNetworkAdapter -VMNetworkAdapter $NicSMB2 -IeeePriorityTag On Set-VMNetworkAdapter -VMNetworkAdapter $NicSMB1 -IeeePriorityTag On Get-VMNetworkAdapter -ManagementOS -Name "SMB*" | fl Name,SwitchName,IeeePriorityTag,Status |

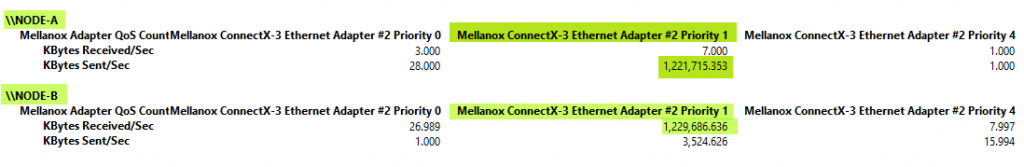

Do remember to do this on all hosts for this to work in all directions. Below you can see me sending traffic from Node-A now with its priority tag 1 intact instead of being reset to 0.

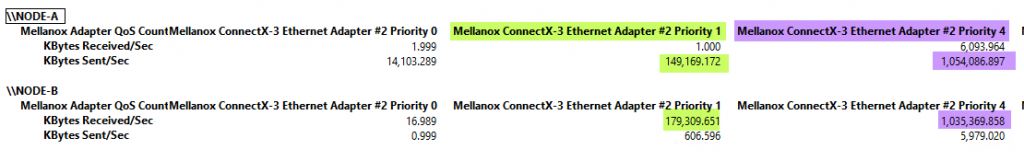

When we now run priority tagged TCP/IP traffic and SMB Direct traffic from node A to node B we see they both get their minimum bandwidth as defined by the DCB/ETS settings. 9% for TCP/IP, 90% for SMB Direct (1% is the remainder, the default).

Conclusion

Unless you really don’t care about anything else than SMB Direct traffic to be tagged with a QoS priority you’ll need to enable IeeeTagging on your vNIC. If you do not do so, you cannot avoid the priority tag for non-SMB Direct traffic being set to 0. Which means that you cannot configure PFC and ETS for that traffic. This is something to keep in mind. The fact that the RDMA traffic on a vNIC actually bypasses the switch by default and as such won’t have its priority tag set to 0 confused me initially as I figured the priority tag for DCB would not be removed for any type of traffic. That’s before I found out that I indeed still needed to turn on the IeeePriorityTag on those vNICs. In reality, it’s not different from how we deal with classification and tagging for QoS in the management OS and trusted virtual machine without hardware-based QoS via hardware (NICs & switches via DCB with PFC/ETS) being involved.