Do persistent memory disks matter to you?

Windows Server 2019 with the Hyper-V role brings us support for persistent memory disks inside a virtual machine. That’s great news right. But any time we get performance that is way better than we normally use there is doubt about its real-world usefulness. Do persistent memory disks matter to you?

There is nothing new here. This is a question that always pops up when a new superfast and advanced technology becomes available for the 1st time. As we didn’t have access to it before we question why we should buy it now. Remember storage tiering? It works and does help when leveraged correctly. The 1st times I used that technology was just like when I implemented 10Gbps all over a stack. People were questioning the validity of it all at first. But they were very happy to have it when they experienced how it helped them. I made this happen with great value for money designs and today I have moved beyond those solutions as they have been superseded by other approaches. I now use All Flash Arrays and 25Gbps or more. I also leverage NVMe storage where it makes sense. And yes, I also intend to leverage persistent memory where it helps to solve bottlenecks.

Persistent memory disks matter when it delivers value in an efficient and cost-effective manner. Maybe your vendors will do that for you in their storage offerings. In certain cases, you’ll leverage it yourself in your virtual machines. Why? When you need the very high speed and the very low latency. Still, why do we need or want to map it into a virtual machine? Why not just leverage persistent memory storage just on the storage solution? Well, not all storage solutions integrate persistent memory in their offerings. That means that offering this via the hypervisor host make it available despite the “normal storage” not having it.

Secondly, for workloads that can leverage DAX, this delivers that capability inside a virtual machine. There are two ways to consume persistent memory storage: block mode and Direct Access (DAX)

Block Mode

- No code change, fast I/O device (4K sectors), so compatible with your existing applications!

- Still, have software overhead of I/O path.

- Still a huge improvement over NVMe.

Direct Access

- Achieve full performance potential of NVDIMM using memory-mapped files on Direct Access volumes (NTFS-DAX).

- There is no I/O path overhead, no queueing, no async reads/writes – the gloves come off, so to speak.

- Requires applications that can leverage DAX (Hyper-V, SQL Server).

Using persistent memory in a Hyper-V virtual machine

In a previous article “Configure NVDIMM-N on a DELL PowerEdge R740 with Windows Server 2019”, I showed you how to set up persistent memory for use on Windows Server 2019. In this article, we’ll consume that persistent memory disk in a virtual machine running on Hyper-V.

Prepare a persistent memory disk for use by the Hyper-V host

We’ll pick up where we left off in. We are going to use two logical persistent memory disks we created on the 6 interleaved NVDIMM-N modules in our Hyper-V host. That gave us 2 * 48GB of usable persistent memory disk space.

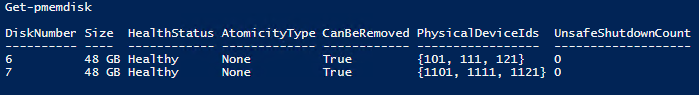

First of all, we look at our available physical persistent memory disks via Get-PmemDisk. Note that the multiple PhysicalDeviceIDs give away that these leverage interleaved NVDIMMs.

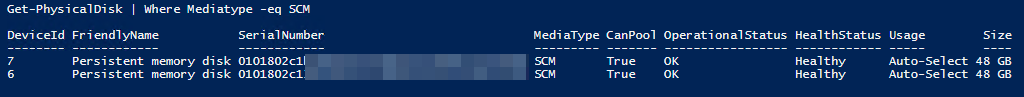

We can grab those via Get-PhycicalDisk as well and we filter on Mediatype SCM (Storage Class Memory)

|

1 |

Get-PhysicalDisk | Where Mediatype -eq SCM |

We can also use

|

1 |

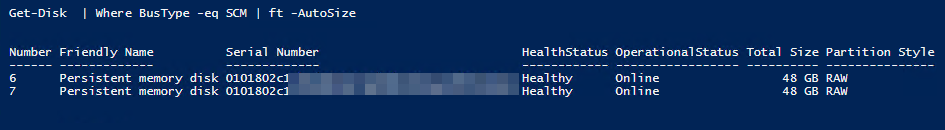

Get-Disk | Where Bustype -eq SCM |

They are already online so we just need to initialize and format them.

We initialize it as a GPT disk. This is the default but I like to be clear about it

|

1 |

Initialize-Disk 6 –PartitionStyle GPT |

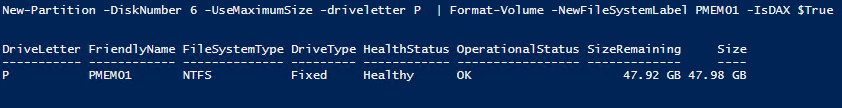

We format it with NFTS DAX

|

1 |

New-Partition -DiskNumber 6 -UseMaximumSize -driveletter P | Format-Volume -NewFileSystemLabel PMEM01 -IsDAX $True |

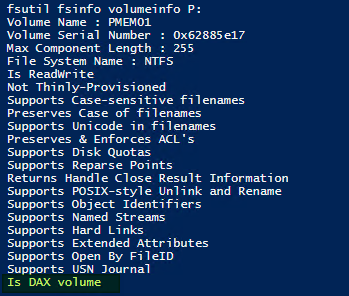

We can verify that it is DAX with fsutil fsinfo volumeinfo P:

Consume the persistent memory disk inside a Hyper-V virtual machine

First of all, we just use a normal virtual machine with a VHDX OS disk and potentially one or more data virtual disk (VHDX). Persistent memory is supported only with generation 2 virtual machines only. But when top performance is of concern you don’t run generation 1 VMs, do we? We have given the VM 20GB of memory and 48 vCPU as the persistent memory disk will put a bit of stress on the processors.

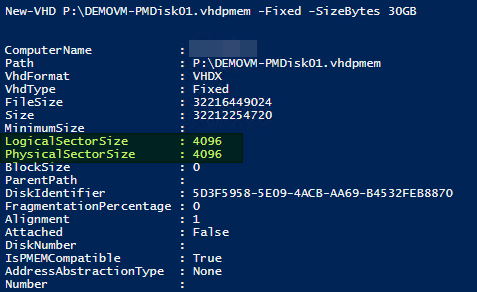

We can now create a new persistent memory virtual disk (file extension “.vhdpmem”) on our persistent memory DAX volume on the Hyper-V host. For this, we use the New-VHD cmdlet to create a persistent memory device for a VM. Some things to take notice off:

- The .vhdpmem extension specifies the virtual disk is a persistent memory disk

- You have to create it on an NTFS DAX volume

- For now, only fixed size persistent memory virtual disks are supported

|

1 |

New-VHD P:\DEMOVM-PMDisk01.vhdpmem -Fixed -SizeBytes 30GB |

As you can see highlighted in green persistent memory is a 4K disk game. This is PowerShell only (for now?). But I am guessing that when you are interested in persistent memory, you’ll be comfortable with some PowerShell cmdlets

In Windows Server 2019 with the Hyper-V role we have a new cmdlet Add-VMPmemController to add a persistent memory controller to a VM. Next, we create a persistent memory controller on our generation 2 virtual machine. To do so the virtual machine has to be shut down or the command below will throw an error.

|

1 |

Add-VMPmemController PMEMVM |

We mount the .vhdpmem disk we created earlier to the Virtual machine

|

1 |

Add-VMHardDiskDrive -VMName PMEMVM PMEM -ControllerLocation 1 -Path P:\DEMOVM-PMDisk01.vhdpmem |

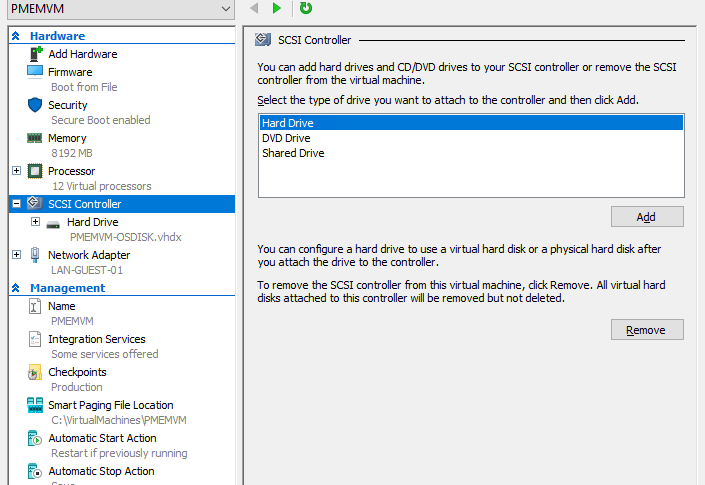

Do note that the VMPmemController or the persistent memory Disk are not visible in the GUI at all.

Inside the VM you will now see this newly attached persistent memory disk and you’ll be able to use it as such. The VM is aware it is a persistent memory device. The guest operating system can use the device as a block or DAX volume just like a physical host and the same benefits/drawbacks. Which one you’ll use is up to what your application supports and what you need. For example, you can have a SQL Server VM put its log files on a virtual DAX volume and/or its TempDB on a block volume.

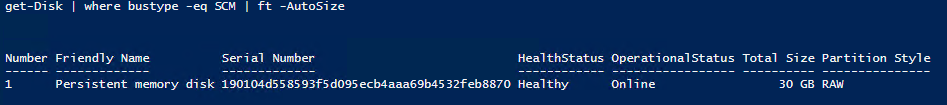

When we run

|

1 |

Get-Disk | Where Bustype -eq SCM |

inside the PMEMVM we see our 30GB .vhdpmem disk of 30GB.

It’s online so we initialize it as a GPT disk.

|

1 |

Initialize-Disk 1 –PartitionStyle GPT |

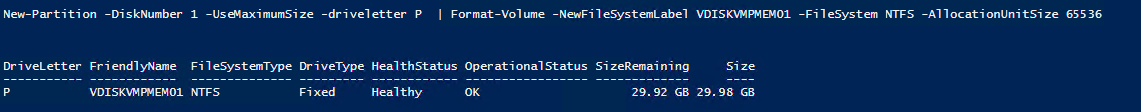

We format it with NFTS as a block volume.

|

1 |

New-Partition -DiskNumber 1 -UseMaximumSize -driveletter P | Format-Volume -NewFileSystemLabel VDISKVMPMEM01 -FileSystem NTFS -AllocationUnitSize 65536 |

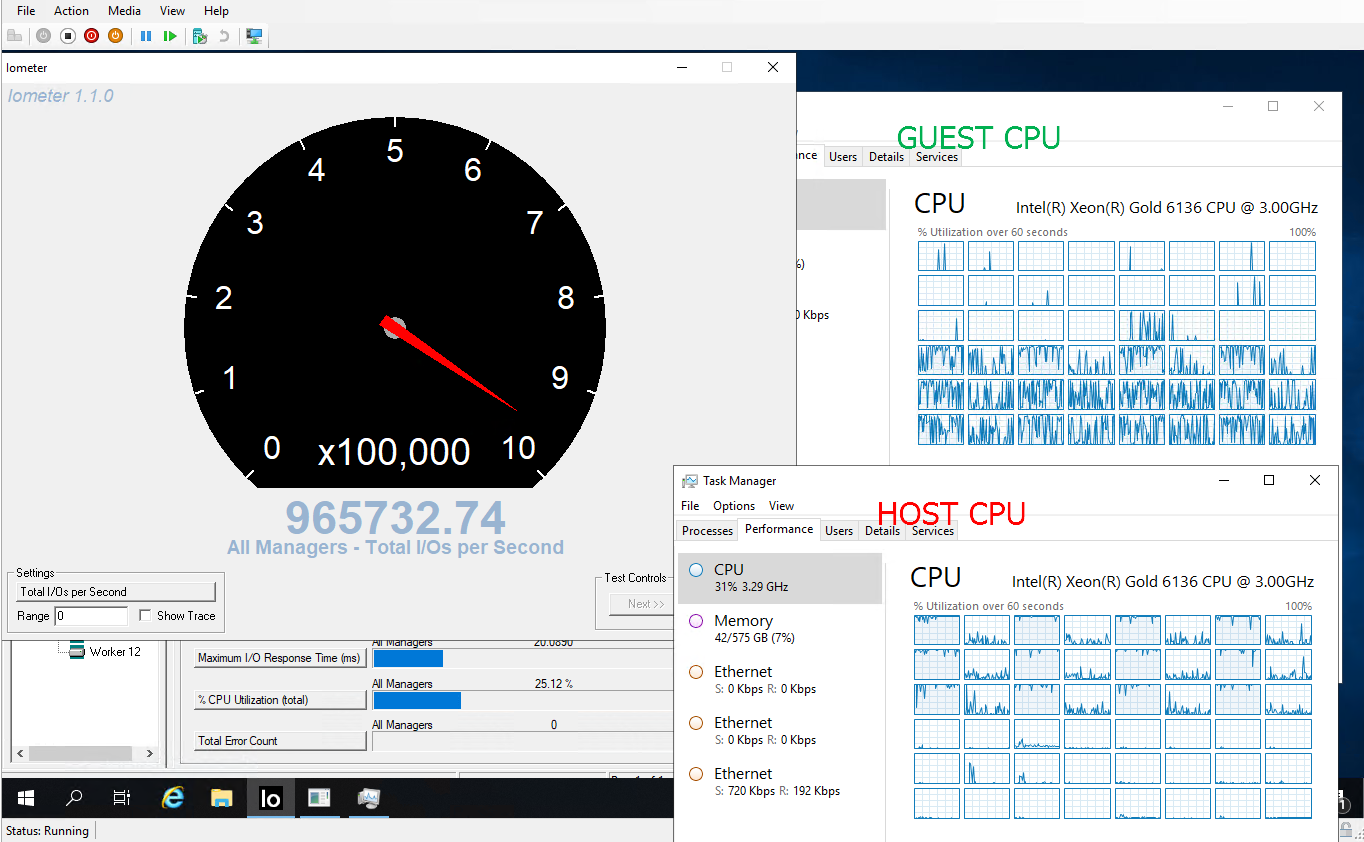

We played around with IOMeter to have some fun. Let me just share one screenshot below to show you the kind of performance we can get from this.

Below is a screenshot of 8K, 70% read, 30% write, 70% sequential, 30% random IO.

Note those poor 12 CPUs that are maxed out. You get the message, I guess? High speed and low latency memory, storage and networking. My dear CPU builders, now it’s up to you, I guess. This is one persistent memory disk inside a virtual machine, block access (not DAX) with 3 interleaved NVDIMM-N modules. These 3 interleaved NVDIMMs are going to one processor socket and that is reflected in the CPU usage.

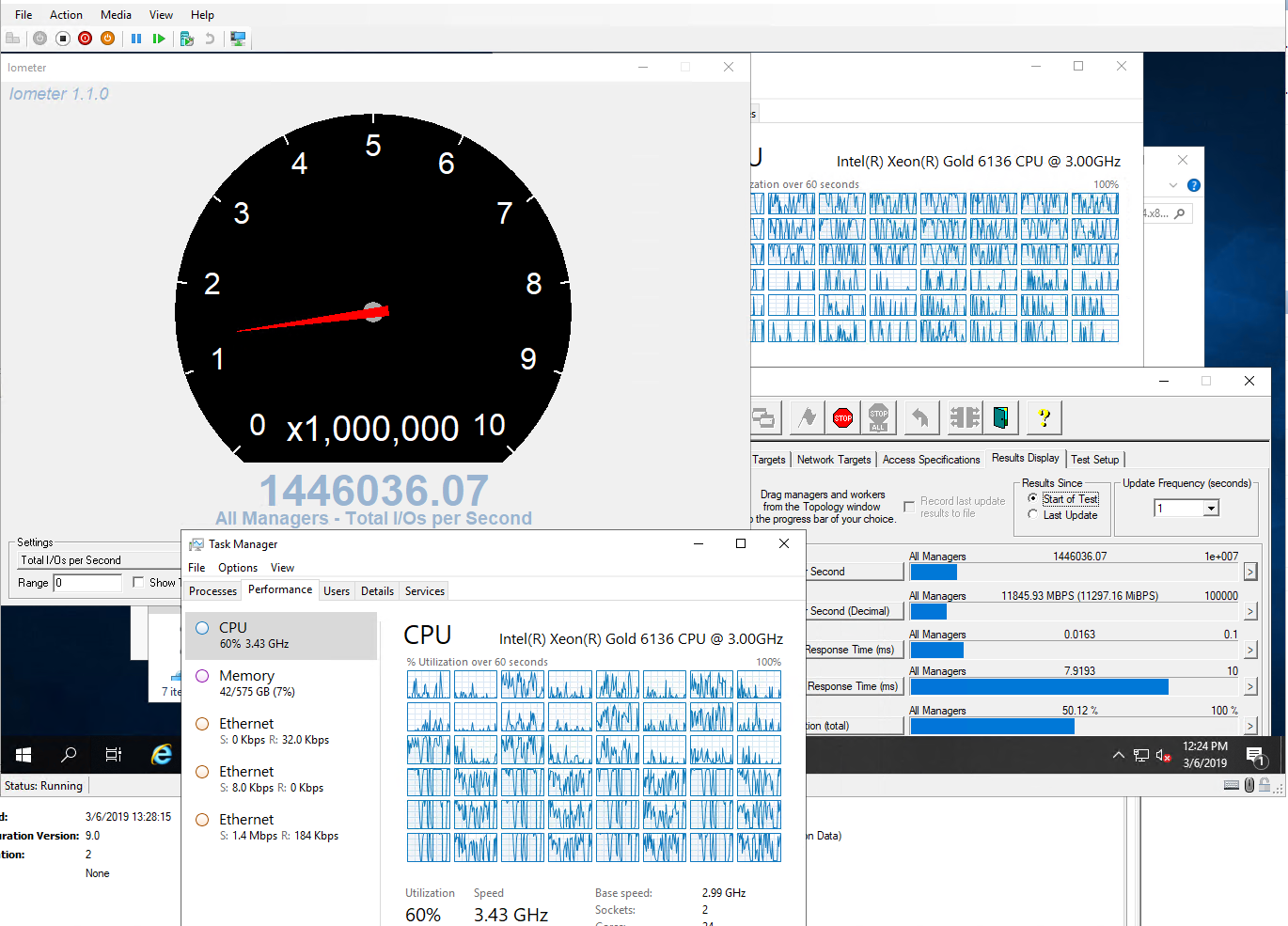

One more run with 2 persistent memory disks now and 24 workers just for fun … again 8K, 70% read, 30% write, 70% sequential, 30% random IO.

Next on the to-do list is play some more with diskspd and then together with some DBAs and their fine SQL Server virtual machines to see what we can do for them.

At the Microsoft Ignite Conference 2018, they showcased 12 * 2 Unit servers in an S2D cluster with 2 Intel Optane modules per server as cache and NVME as capacity drives. They fired up all VMs to deliver 13 million IOPS. This is 1 server, one single virtual machine leveraging NVDIMM-N modules. Optane comes in significantly bigger capacity sizes then NVDIMM-N. For the current lab work conditions, the NVDIMM-N modules made the most sense. I will however be testing Intel Optane modules in the future. It is early days yet but the capabilities are very promising for various scenarios.

Current limitations with Hyper-V

At the time of writing, persistent memory disks are only supported in generation 2 virtual machines and I have no issues with that. This is about performance and generation 1 virtual machines today are about backward compatibility, not speed.

What I found sadly missing in action is support for Live Migration. Now Microsoft has this working in Azure so my guess is that it is coming. Unfortunately, it isn’t here yet. At least not exposed to the GUI or via PowerShell that I know off. VMware is leading on that front.

It is also worth noting that while a virtual machine with persistent memory disks supports production checkpoints these do not include persistent memory state.

The last two are on my wishlist but when I have to prioritize, I chose live migration as my the highest priority, followed by checkpoint support as I like to protect my data and services optimally.